- Aug 30, 2012

- 6,598

About three years ago we got our first look at Nvidia's Kepler architecture that powered the GTX 680, a $500 card packing 3.54 billion transistors and 192GB/s bandwidth. It was the end of the line for the GeForce 600 series, but it wasn't the end for the Kepler architecture.

While we eagerly awaited for next-gen GeForce 700 cards, Nvidia dropped the GeForce GTX Titan, wielding 7.08 billion transistors for an unwieldy price of $1,000. The Titan instantly claimed king of the hill, and even though the Radeon R9 290X brought similar performance for half the price six months later, Nvidia refused to budge on the Titan's MSRP.

This was every gamer's dream GPU for half a year, but its fate was sealed when the GTX 780 Tishipped many months later (Nov/13), offering more CUDA cores at a more affordable $700.

Although the GTX Titan was great for gaming, that wasn't the sole purpose of the GPU, which was equipped with 64 double-precision cores for 1.3 teraflops of double-precision performance. Previously only found in Tesla workstations and supercomputers, this feature made the Titan ideal for students, researchers and engineers after consumer-level supercomputing performance.

A year after the original Titan's release, Nvidia followed up with a full 2880-core version known as the Titan Black, which boosted the card's double-precision performance 1.7 teraflops. A month later, the GTX Titan Z put two Titan Blacks on one PCB for 2.7 teraflops of compute power, though this card never made sense at $3,000 -- triple the Titan Black's price.

Since then, the Maxwell-based GeForce 900 series arrived with the GTX 980's unbeatable performance vs. power ratio leading the charge as today's undisputed single-GPU king. Given that the GTX 980 has a modest 2048 cores using 5.2 billion transistors in a small 398mm2 die area, it manages to be 29% smaller with 26% fewer transistors than the flagship Kepler parts.

We knew there would be more ahead for Maxwell and so here it comes. Six months after the GTX 980, Nvidia is back with the GeForce GTX Titan X, a card that's bigger and more complex than any other. However, unlike previous Titan GPUs, the new Titan X is designed exclusively for high-end gaming and as such offers similar compute performance similar to the GTX 980.

Announced at GDC, there's plenty to be psyched about: headline features include 3072 CUDA cores, 12GB of GDDR5 memory running at 7Gbps, and a whopping 8 billion transistors. At its peak, the GTX Titan X will deliver 6600 GFLOPS single precision and 206 GFLOPS double precision processing power.

Nvidia reserved pricing information to the last minute as they delivered the opening keynote at their GPU Technology Conference -- unsurprisingly the Titan X will be $999. But without getting bogged down in how stupid that was -- let's focus on the fact that we get to show you how the GTX Titan X performs and that it's a hard launch with availability expected today.

Titan X's GM200 GPU in Detail

The GeForce Titan X is a processing powerhorse. The GM200 chip carries six graphics processing clusters, 24 streaming multiprocessors with 3072 CUDA cores (single precision).

As noted earlier, the Titan features a core configuration that consists of 3072 SPUs which take care of pixel/vertex/geometry shading duties, while texture filtering is performed by 192 texture units. With a base clock frequency of 1000MHz, texture filtering rate is 192 Gigatexels/sec, which is over 33% higher than the GTX 980. The Titan X also ships with 3MB of L2 cache and 96 ROPs.

The memory subsystem of GTX Titan X consists of six 64-bit memory controllers (384-bit) with 12GB of GDDR5 memory. This means that the 384-bit wide memory interface and 7GHz memory clock deliver a peak memory bandwidth that is 50% higher than GTX 980 at 336.5GB/sec.

And with its massive 12GB of GDDR5 memory, gamers can play the latest DX12 games on the Titan X at 4K resolutions without worrying about running short on graphics memory.

Nvidia says that the Titan X is built using the full implementation of GM200. The display/video engines are unchanged from the GM204 GPU used in the GTX 980. Also like the GTX 980, overall double-precision instruction throughput is 1/32 the rate of single-precision instruction throughput.

As mentioned, the base clock speed of the GTX Titan X is 1000MHz, though it does feature a typical Boost Clock speed of 1075MHz. The Boost Clock speed is based on the average Titan X card running a wide variety of games and applications. Note that the actual Boost Clock will vary from game to game depending on actual system conditions.

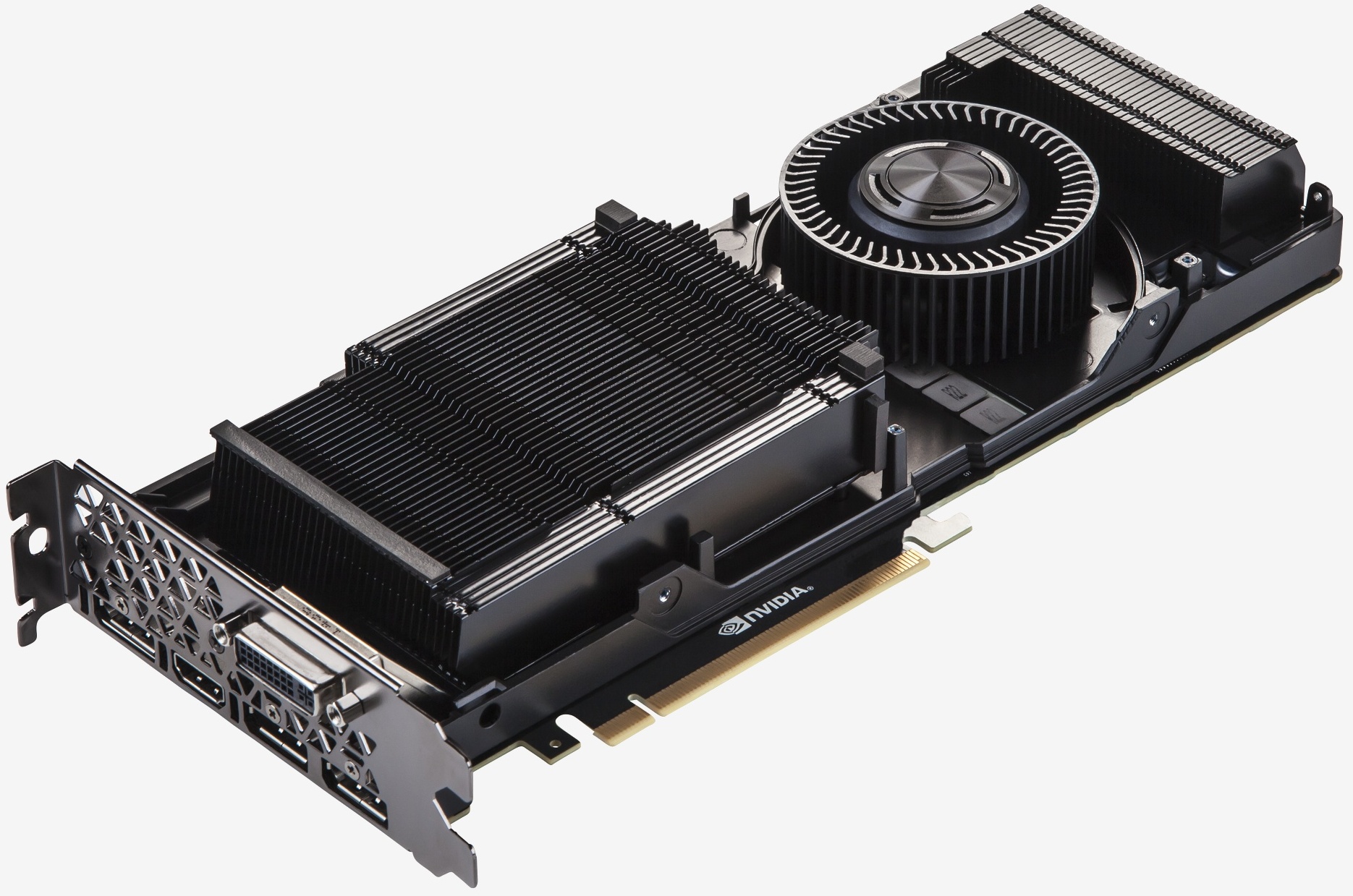

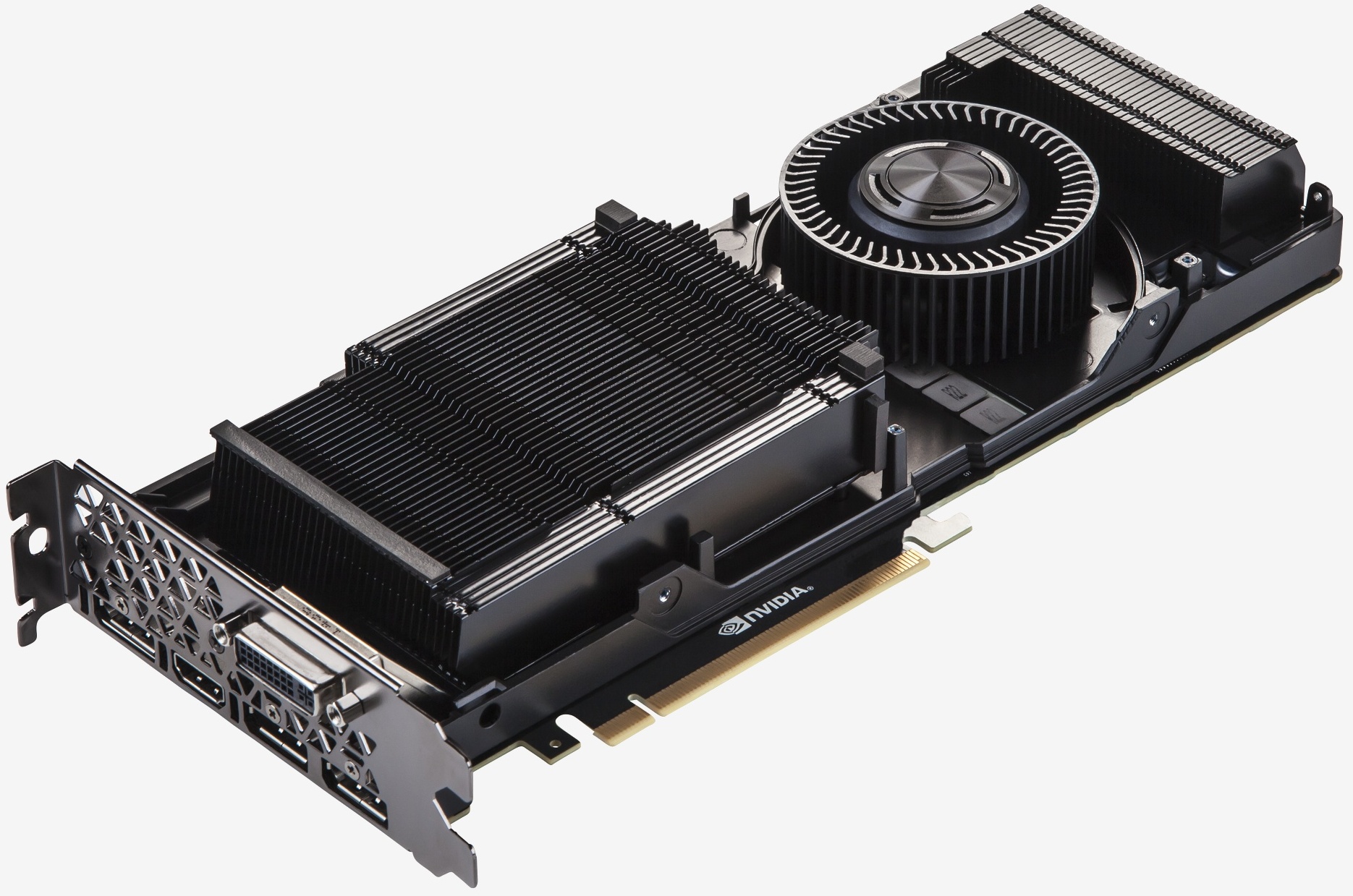

Setting performance aside for a moment, one of the Titan X's other noteworthy features is its stunning board design. As was the case with previous Titan cards, the Titan X has an aluminum cover. The metal casing gives the board a premium look and feel, while the card's unique black cover sets it apart from predecessors -- this is the Darth Vader of Titans.

A copper vapor chamber is used to cool the Titan X's GM200 GPU. This vapor chamber is combined with a large, dual-slot aluminum heatsink to dissipate heat off the chip. A blower style fan then exhausts this hot air through the back of the graphics card and outside the PC’s chassis. The fan is designed to run very quietly, even while under load when the card is overclocked.

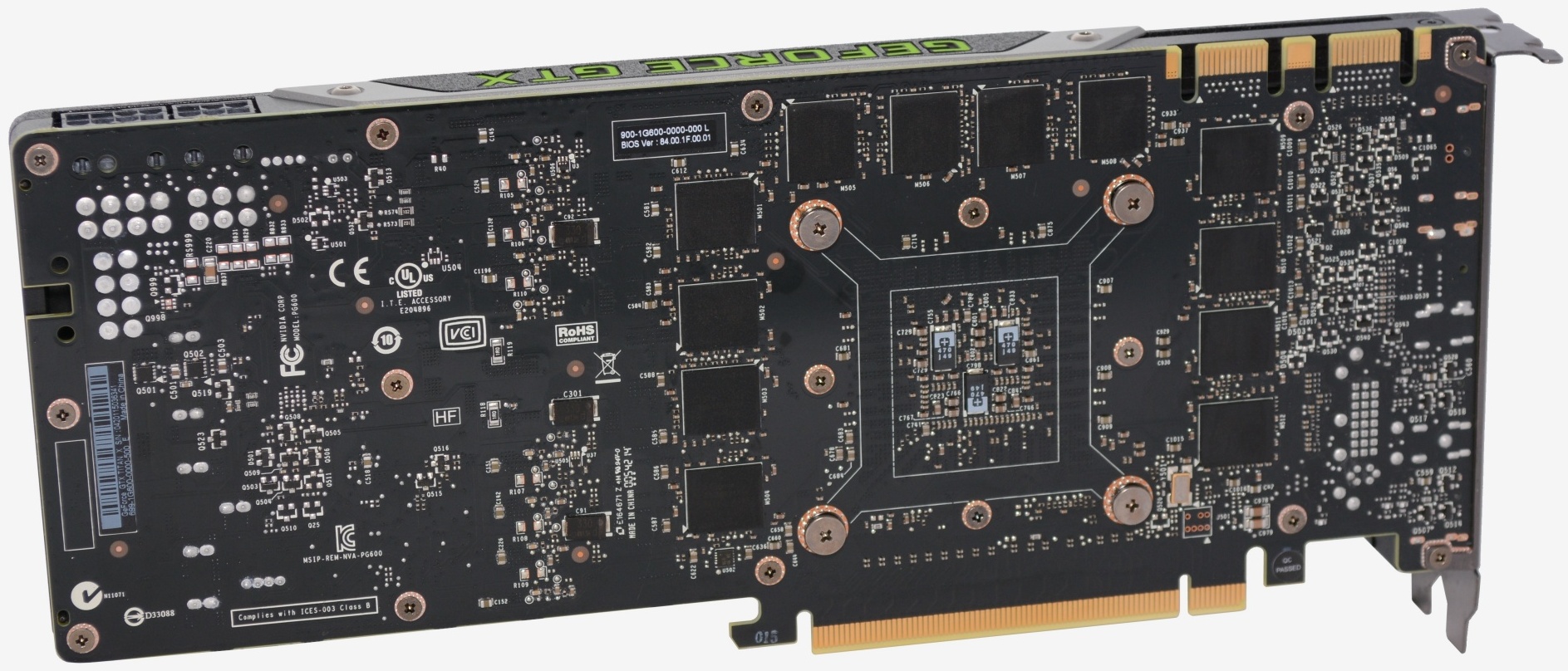

If you recall, the GTX 980 reference board design included a backplate on the underside of the card with a section that could be removed in order to improve airflow when multiple GTX 980 cards are placed directly adjacent to each other (as with 3- and 4-way SLI, for example). In order to provide maximum airflow to the Titan X's cooler in these situations, Nvidia does not include a backplate on the Titan X reference.

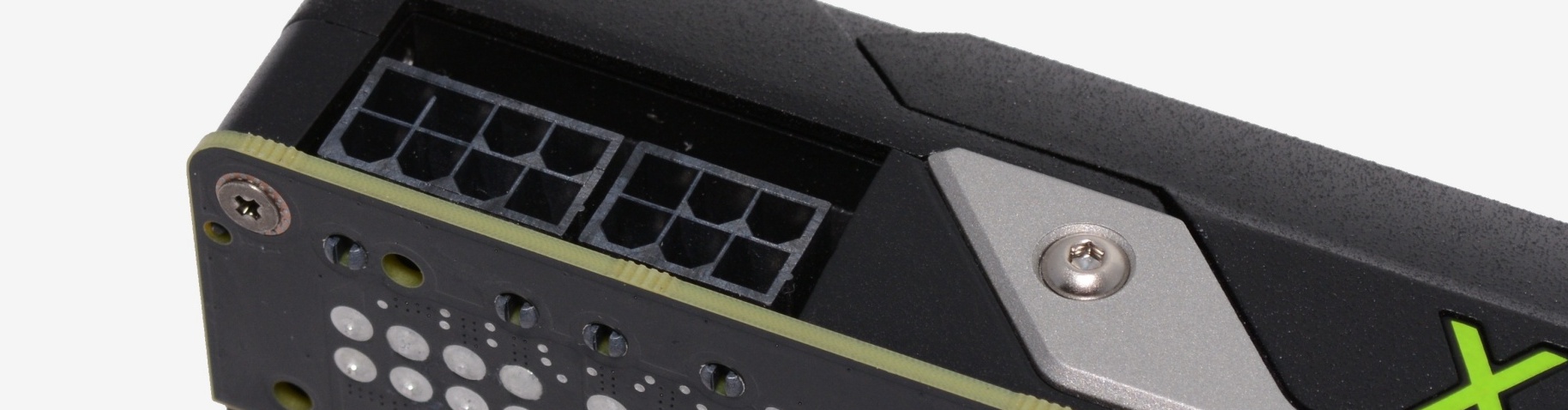

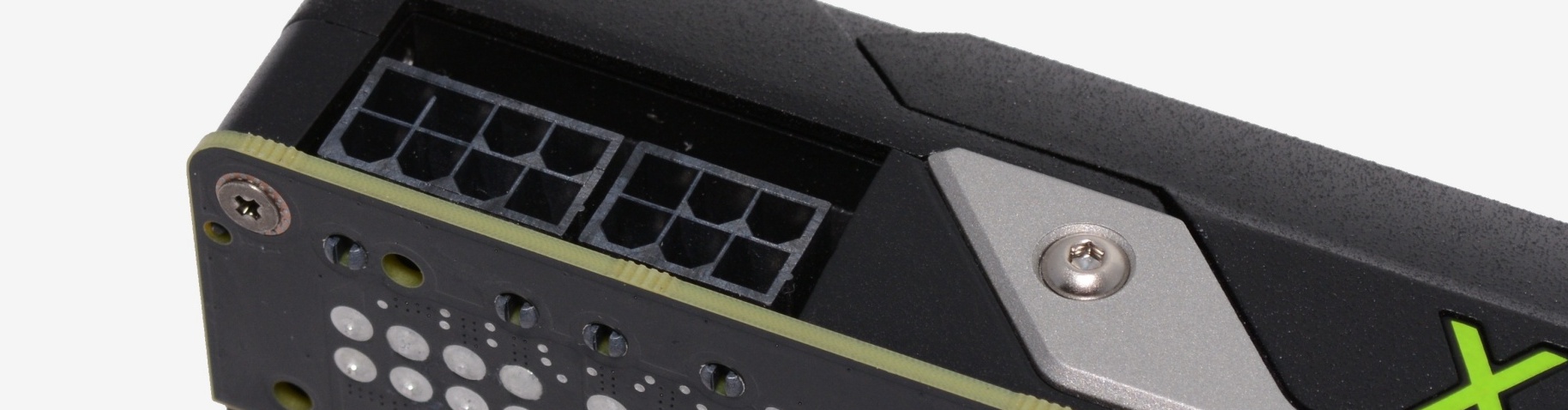

The Titan X reference board measures 10.5" long. Display outputs include one dual-link DVI output, one HDMI 2.0 output and three DisplayPort connectors. One 8-pin PCIe power connector and one 6-pin PCIe power connector are required for operation.

Speaking of power connectors, the Titan X has a TDP rating of 250 watts and Nvidia calls for a 600w power supply when running just a single card. That is a little over 50% higher than the TDP rating of the GTX 980, though it is still 14% lower than the Radeon R9 290X.

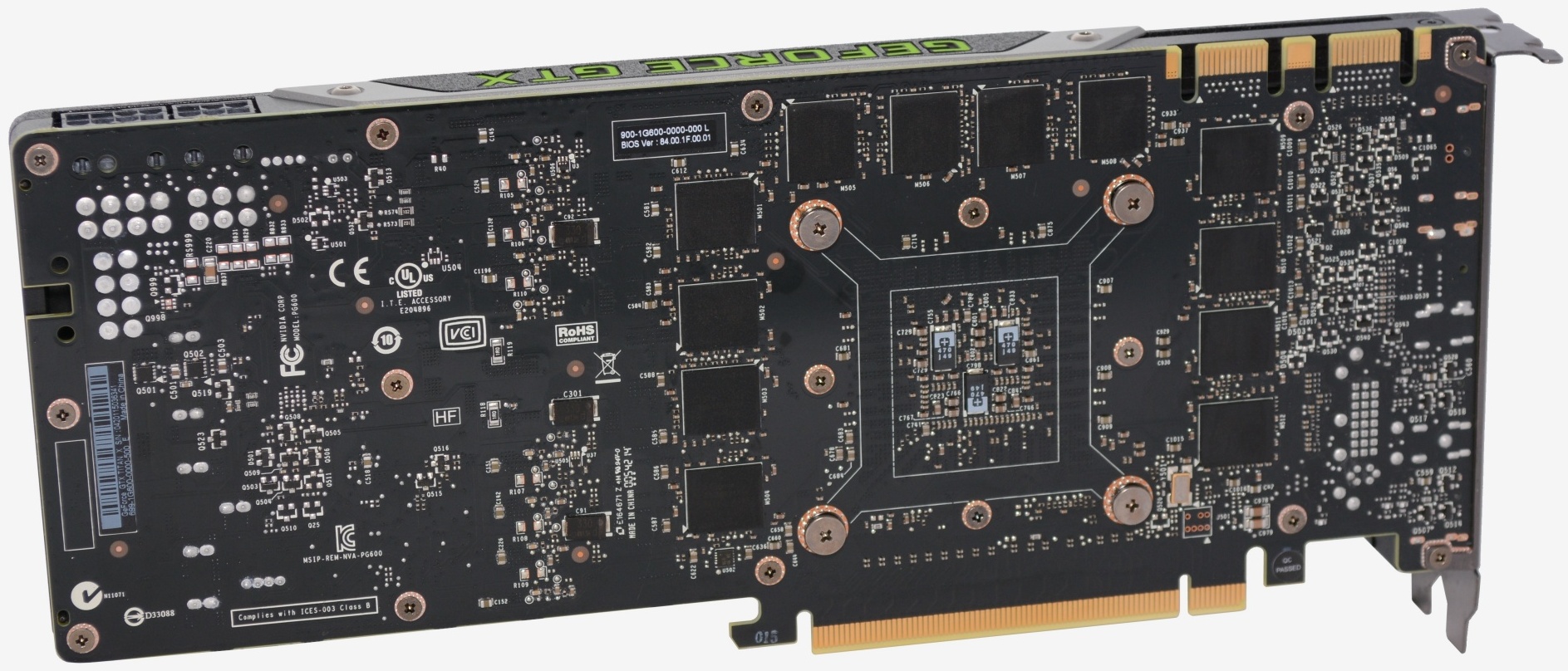

Nvidia says that being a gaming enthusiast's graphics card, the Titan X has been designed for overclocking and implements a six-phase power supply with overvoltaging capability. An additional two-phase power supply is dedicated for the board's GDDR5 memory.

This 6+2 phase design supplies Titan X with more than enough power, even when the board is overclocked. The Titan X reference board design supplies the GPU with 275 watts of power at the maximum power target setting of 110%.

Nvidia has used polarized capacitors (POSCAPS) to minimize unwanted board noise as well as molded inductors. To further improve Titan X's overclocking potential, Nvidia has improved airflow to these board components so they run cooler compared to previous high-end GK110 products, including the original GTX Titan.

Moreover, Nvidia says it pushed the Titan X to speeds of 1.4GHz using nothing more than the supplied air-cooler during its own testing, so we're obviously interested in testing that.

Testing Methodology

All GPU configurations have been tested at the 2560x1600 and 3840x2160 (UHD) 4K resolutions and for this review we will be discussing the results from both resolutions.

All graphics cards have been tested with core and memory clock speeds set to the AMD and Nvidia specifications.

Test System Specs

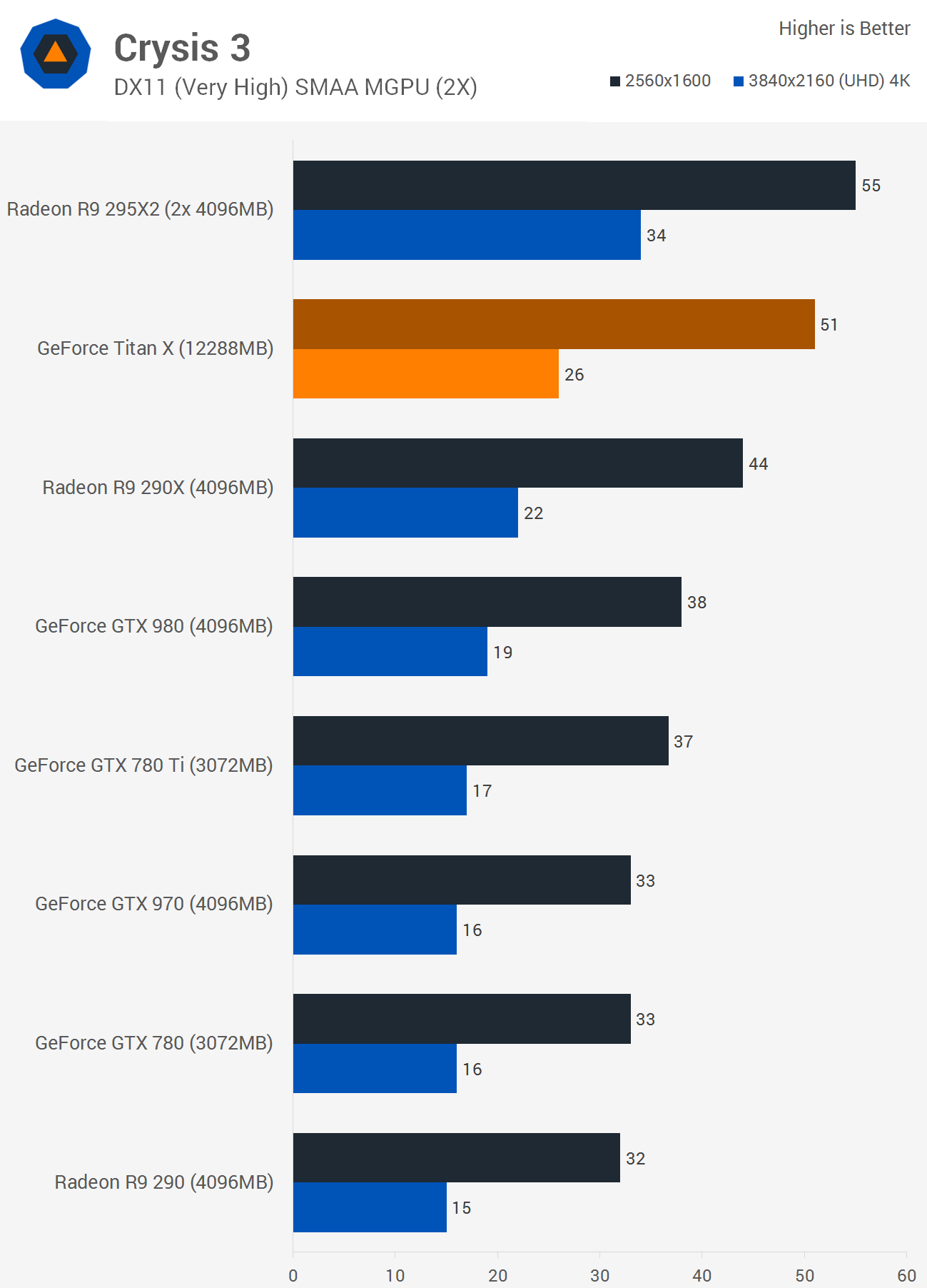

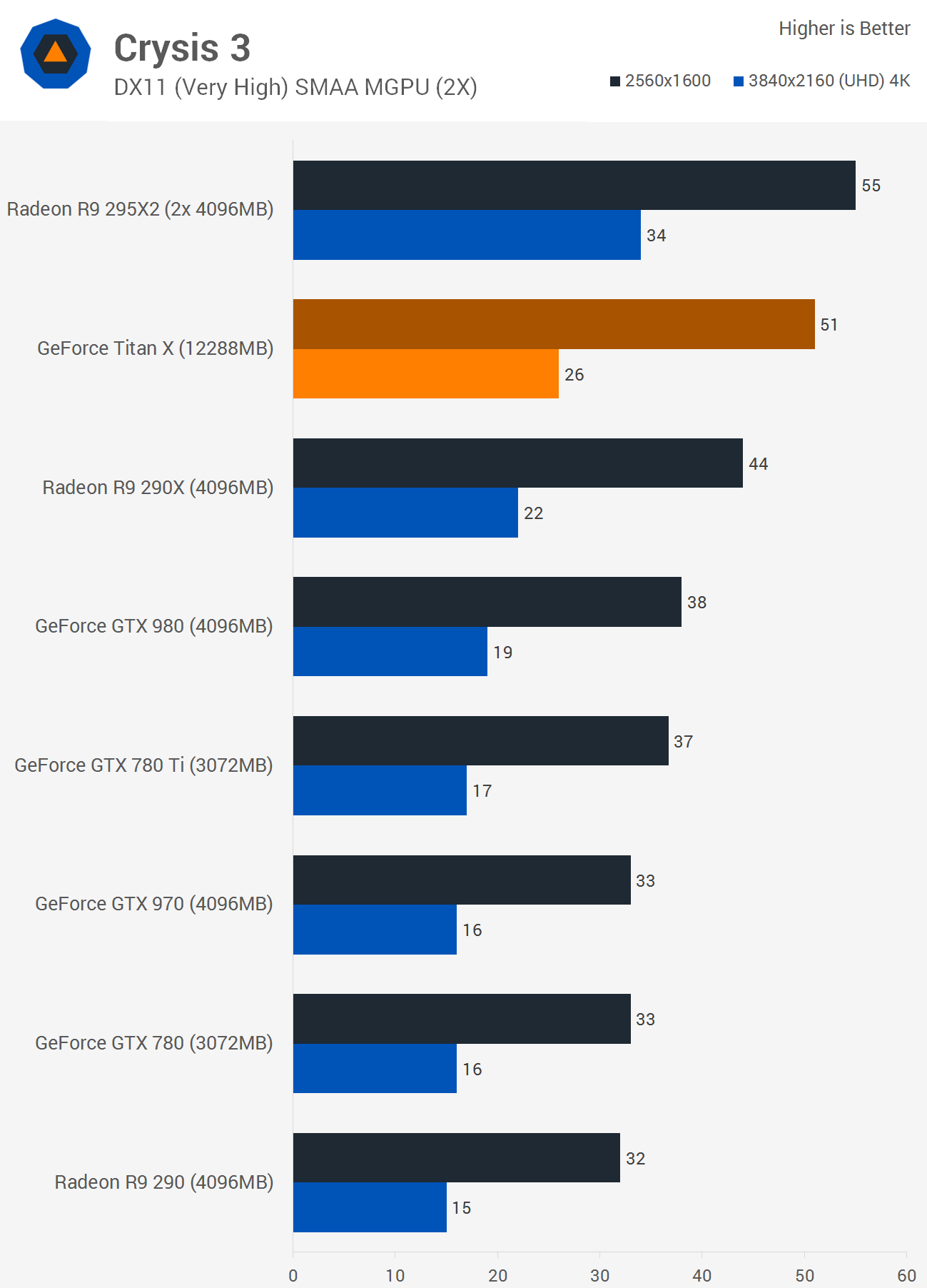

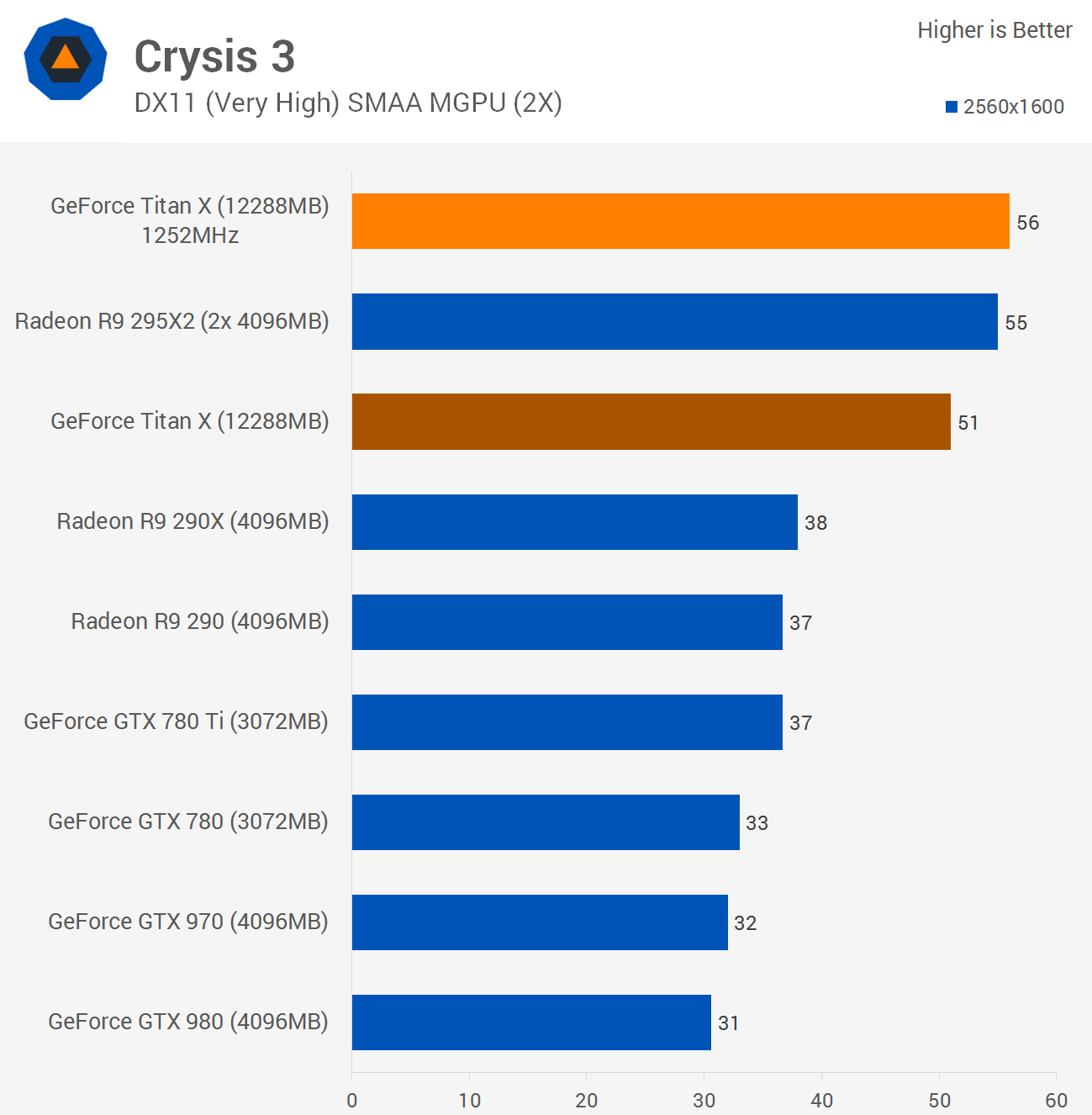

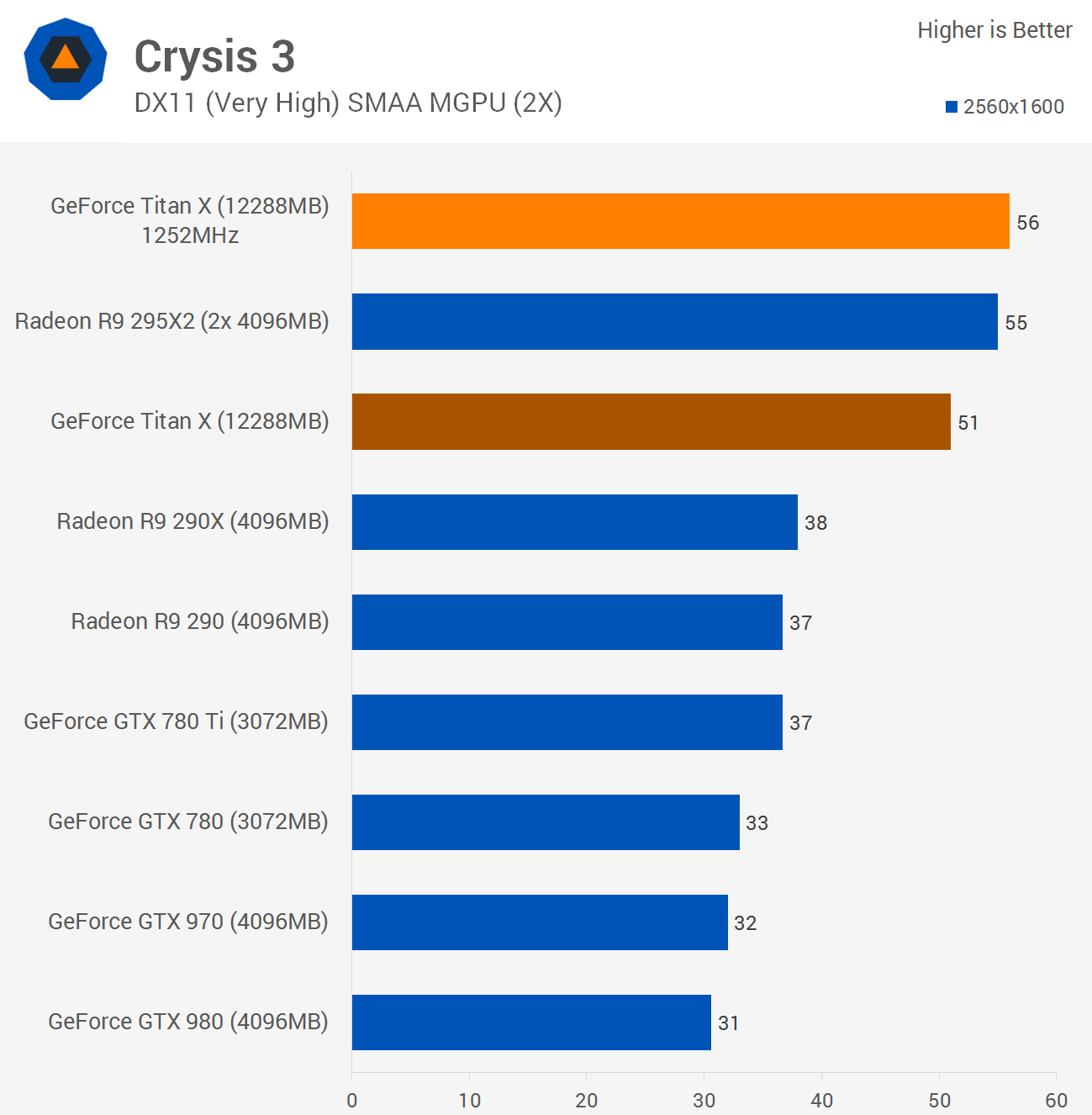

When gaming at 2560x1600 in Crysis 3 the GTX Titan X rendered an impressive 51fps on average, 34% faster than the GTX 980 and 15% faster than the R9 290X, while it was just 7% slower than the R9 295X2.

Jumping to 4K reduced the average frame rate to just 26fps, though if we were to disable anti-aliasing we would likely receive playable performance. Even so, with 26fps the GTX Titan X was 37% faster than the GTX 980 and 18% faster than the R9 290X while also being 24% slower than the R9 295X2.

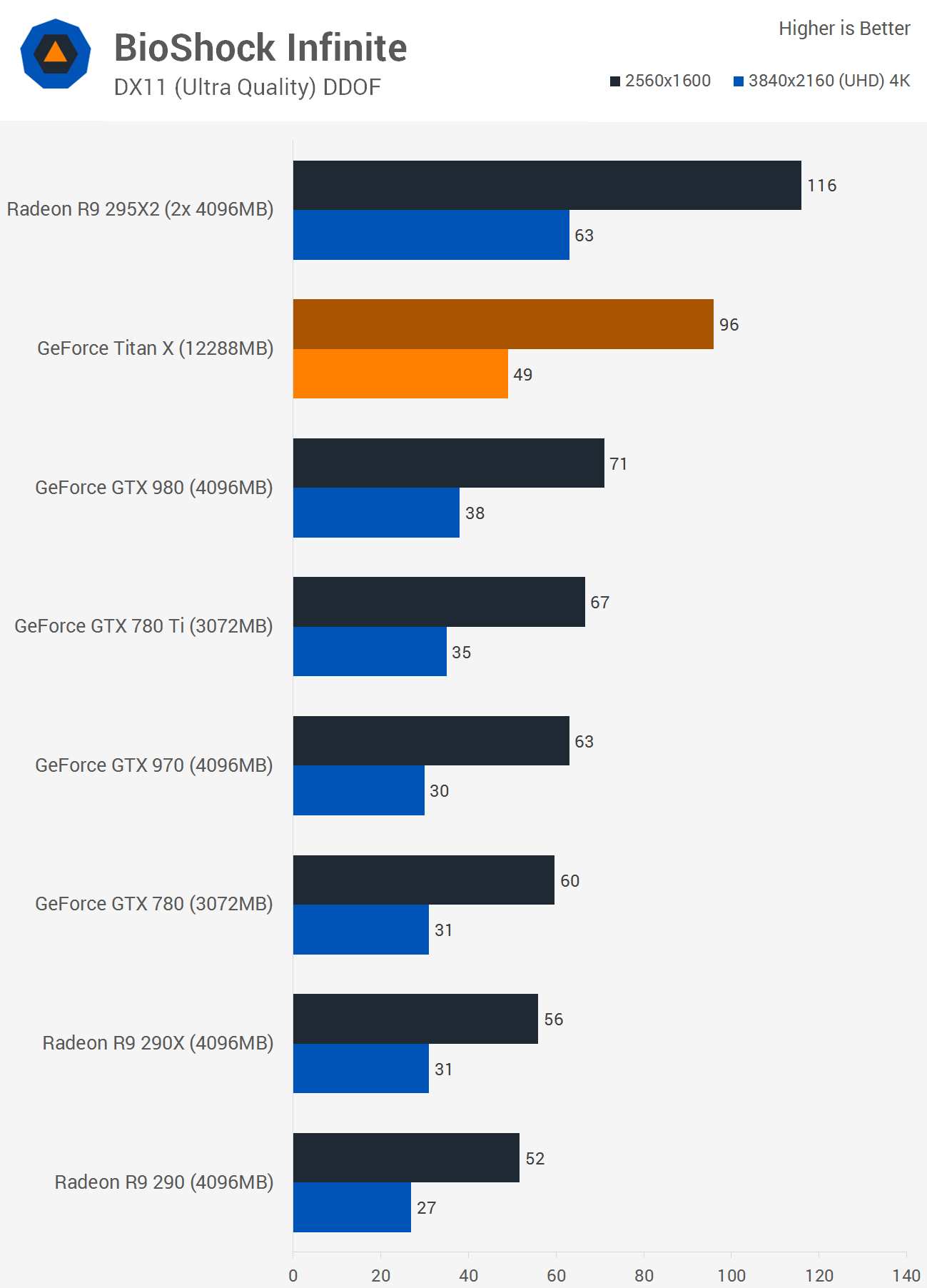

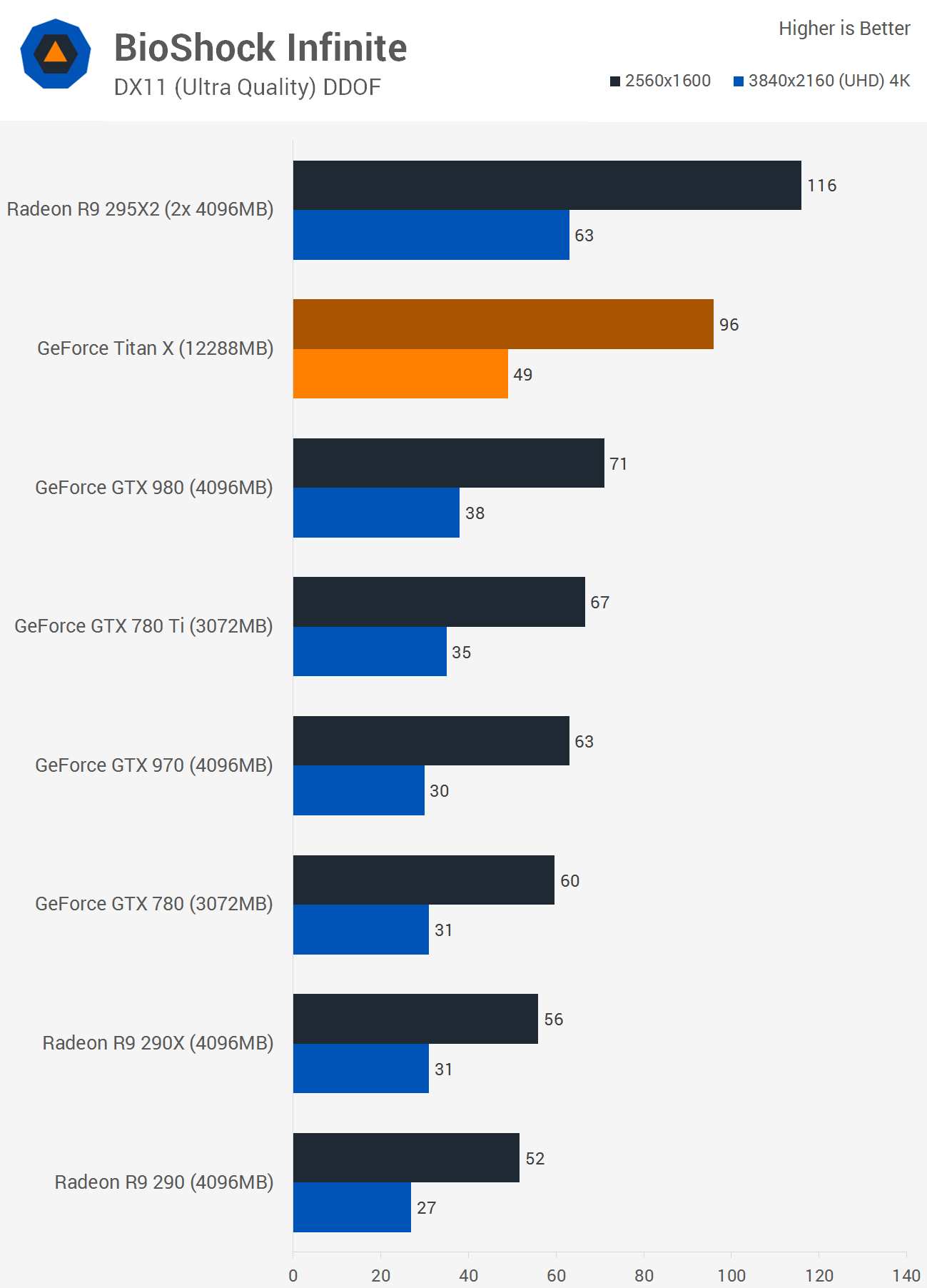

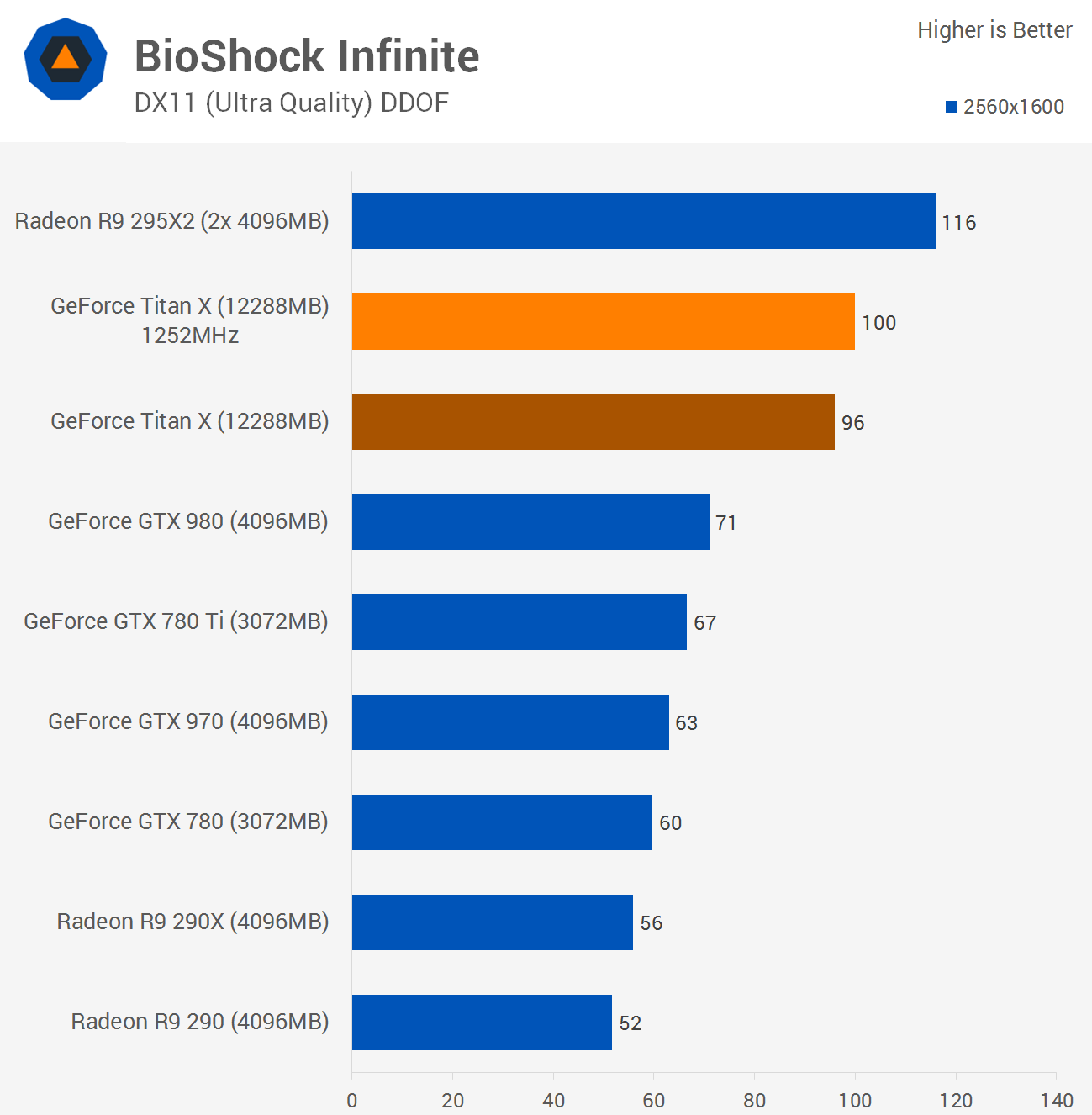

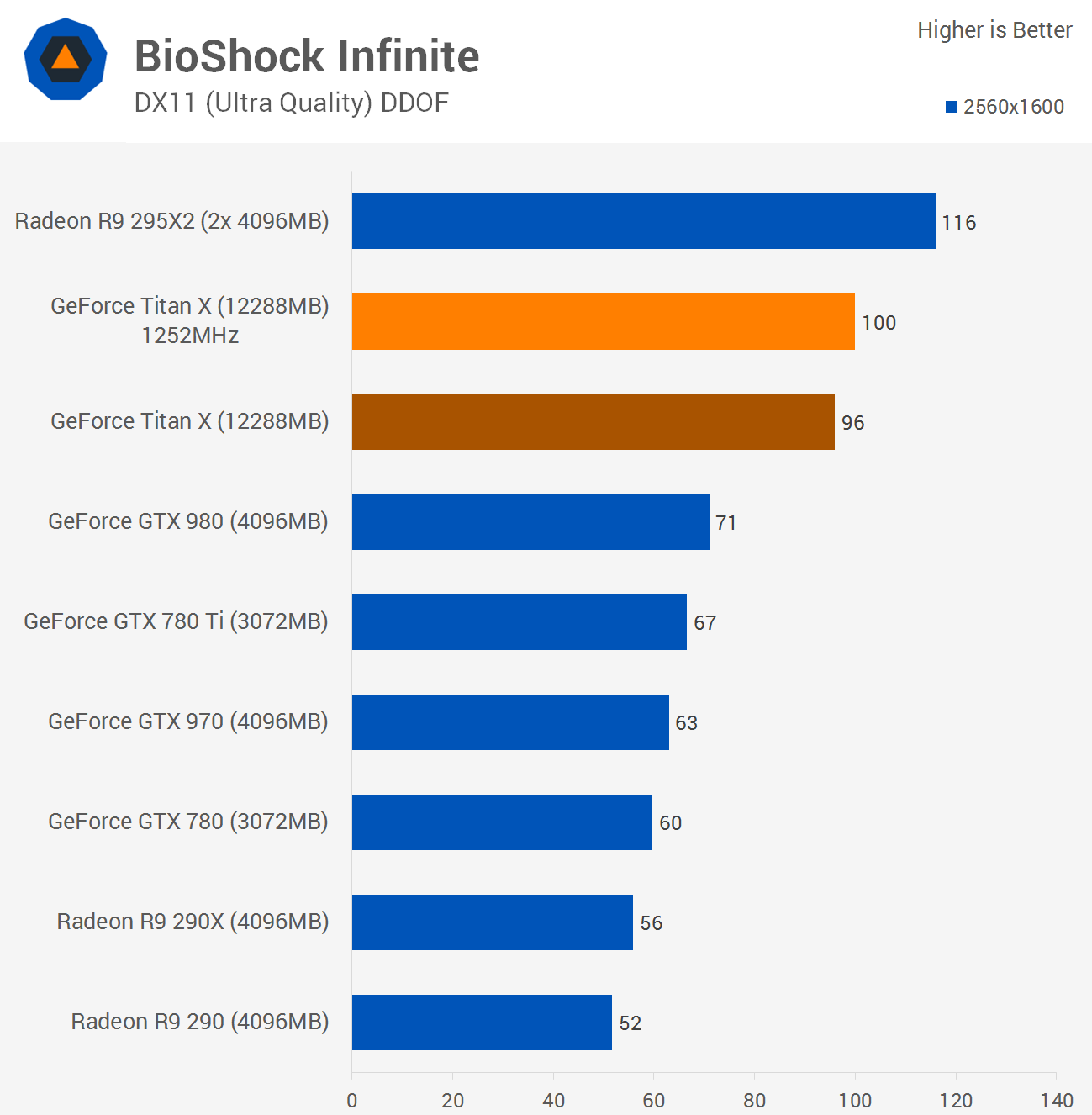

The GTX Titan X has no trouble with BioShock Infinite at 2560x1600 using the ultra-quality preset, delivering highly playable 96fps, 35% faster than the GTX 980 and a whopping 71% faster than the R9 290X. Despite crushing the R9 290X, the GTX Titan X was still 17% slower than the R9 295X2.

Even at 4K the Titan X is able to deliver playable performance with its 49fps being 29% faster than the GTX 980, 58% faster than the R9 290X and 22% slower than the R9 295X2.

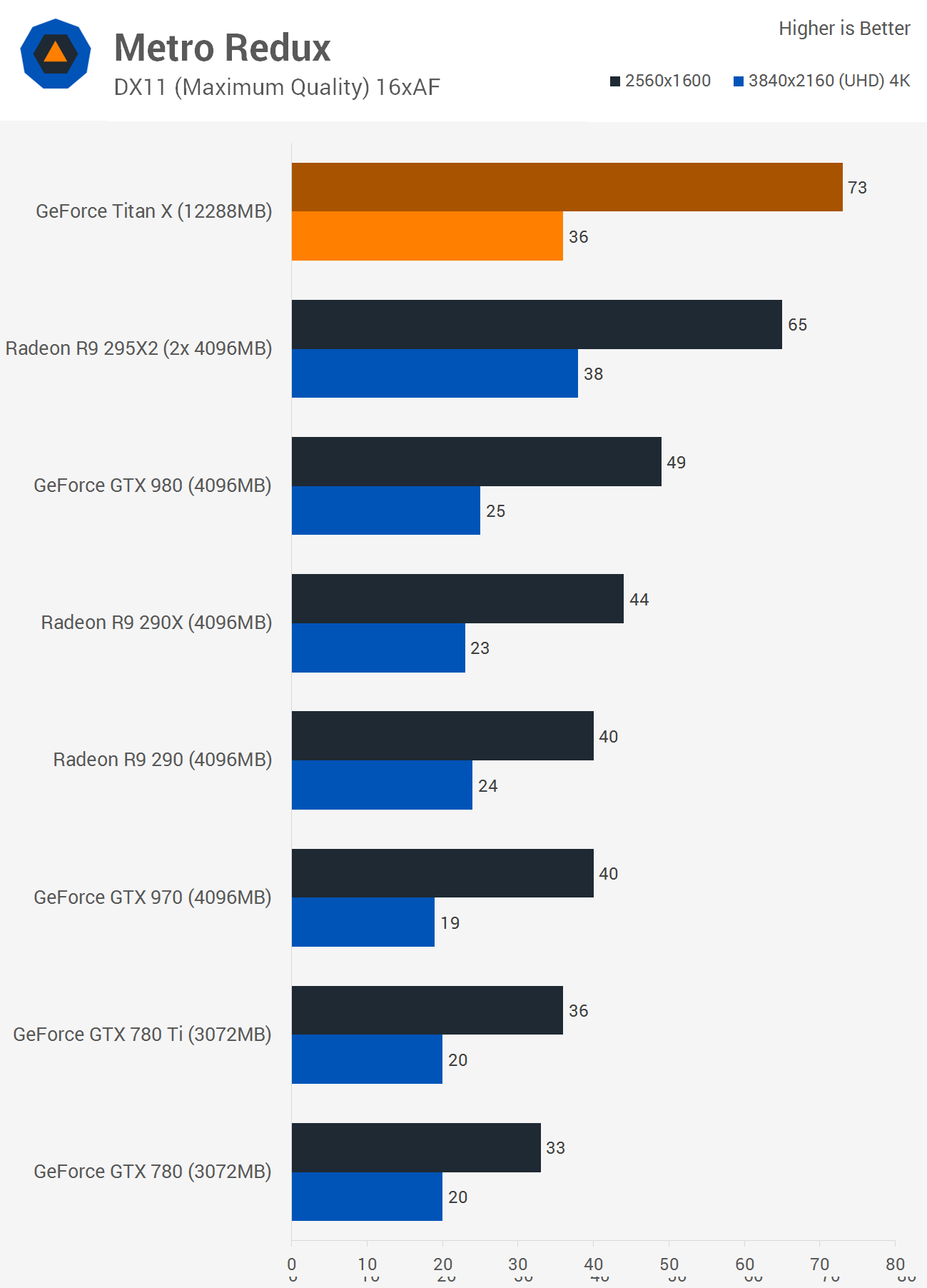

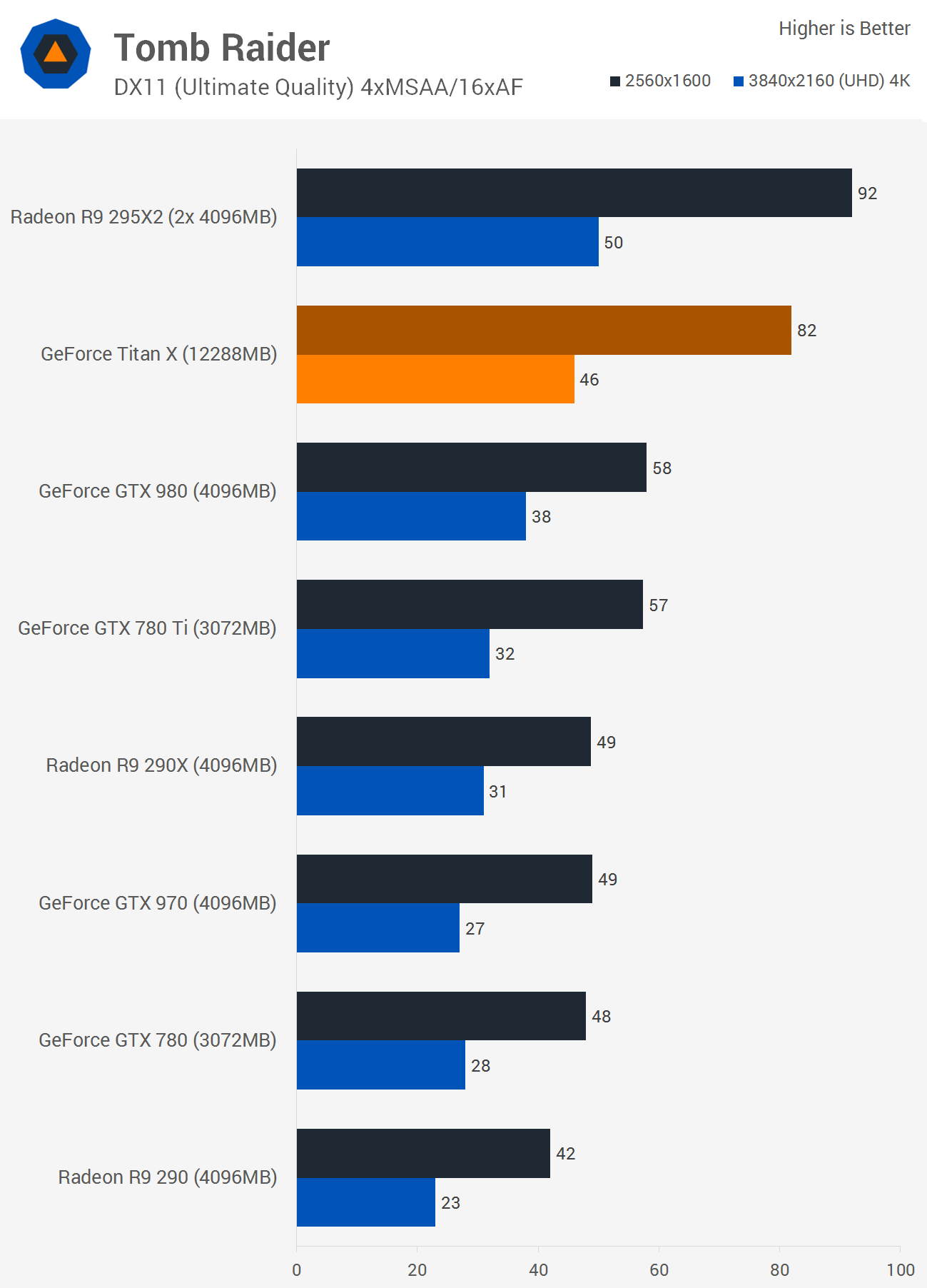

Benchmarks: Metro Redux, Tomb Raider

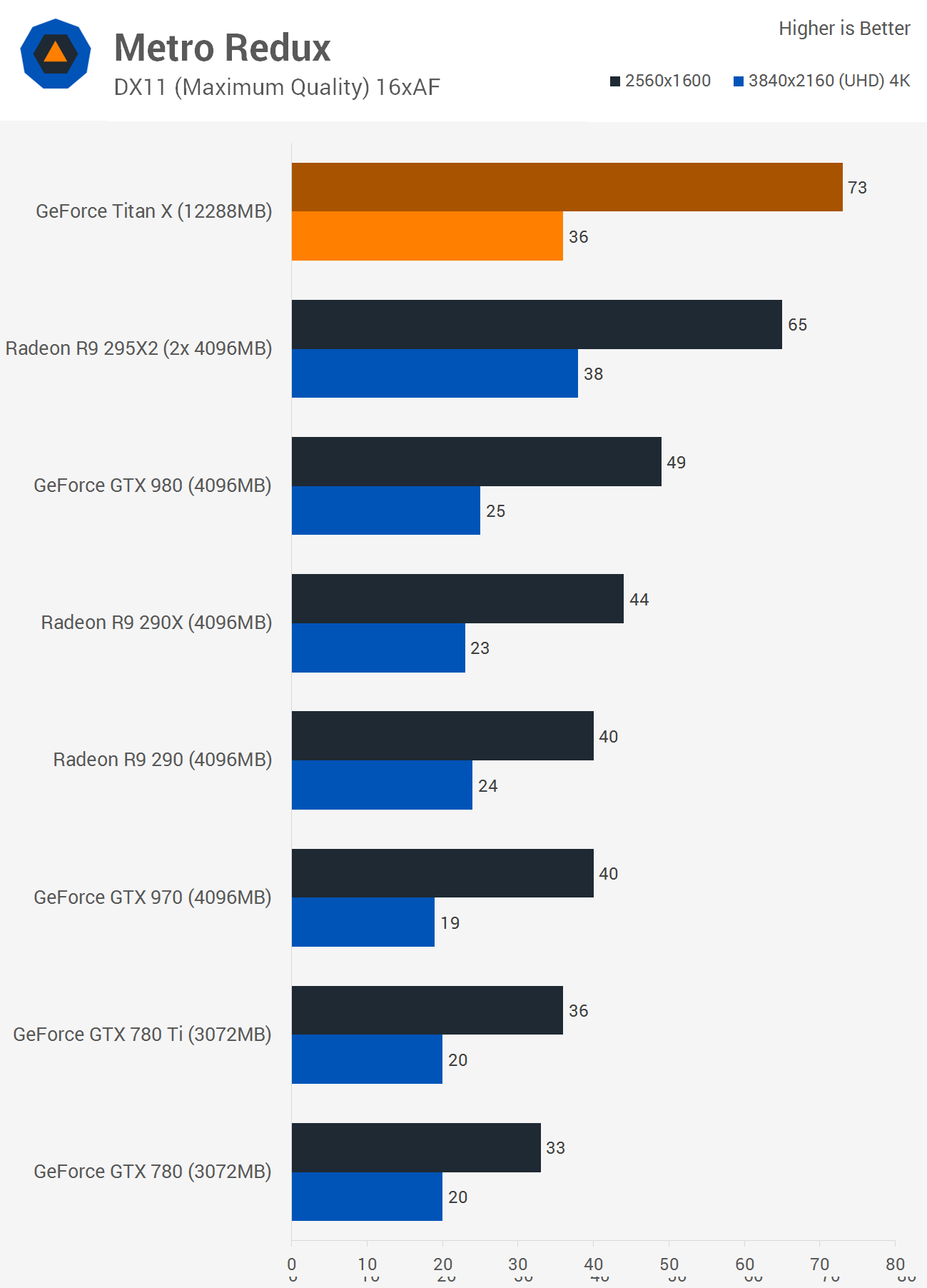

The GTX Titan X looks to be a beast when testing with Metro Redux as the R9 295X2 scales poorly at 2560x1600, allowing the Titan X to be 12% faster than AMD's dual-GPU solution in addition to topping the the GTX 980 and R9 290X.

Testing at 4K showed that the GTX Titan X could deliver playable performance, if only just. With 36fps it was 44% faster than the GTX 980, 50% faster than the R9 290X and just 5% slower than the R9 295X2.

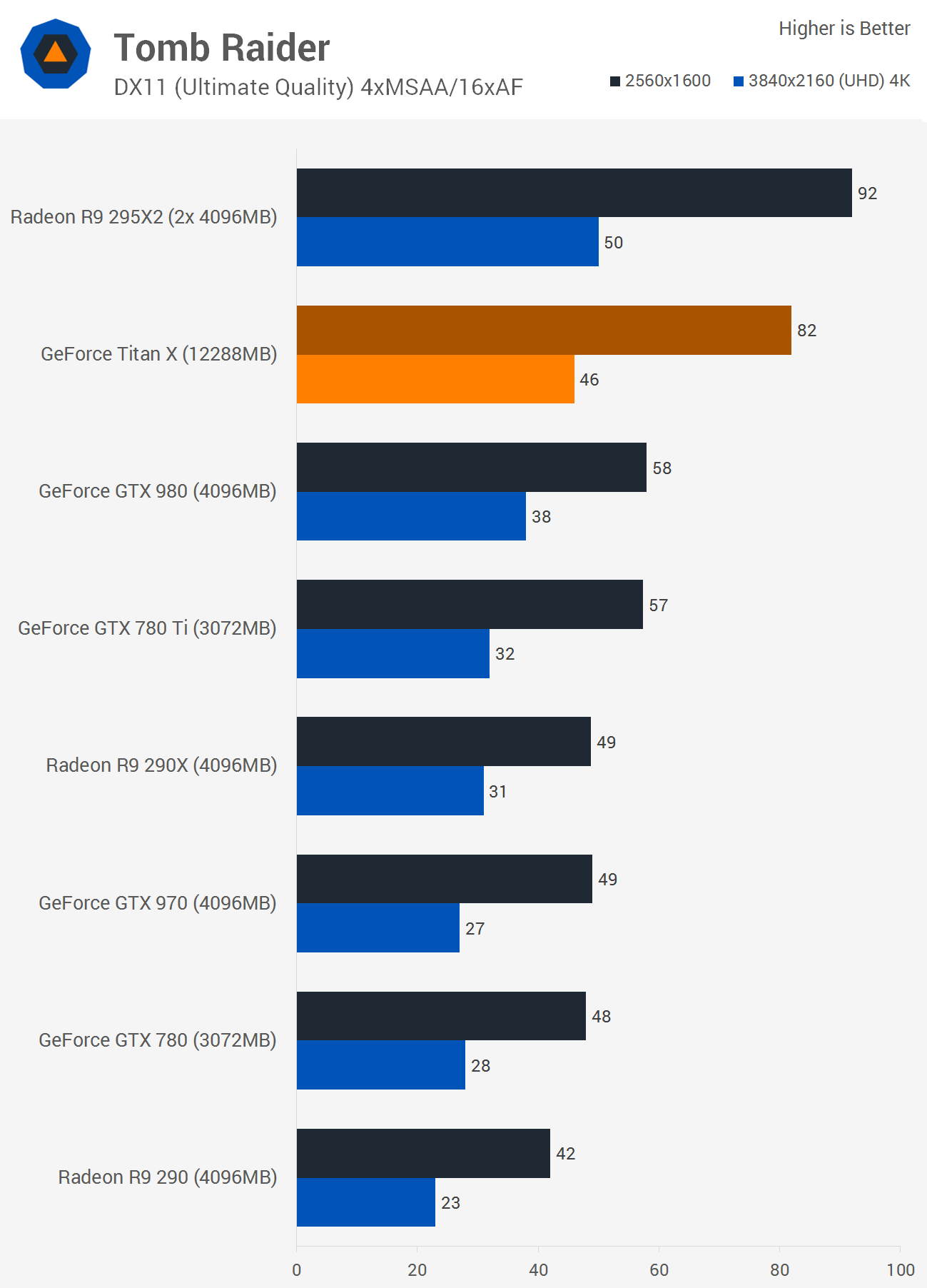

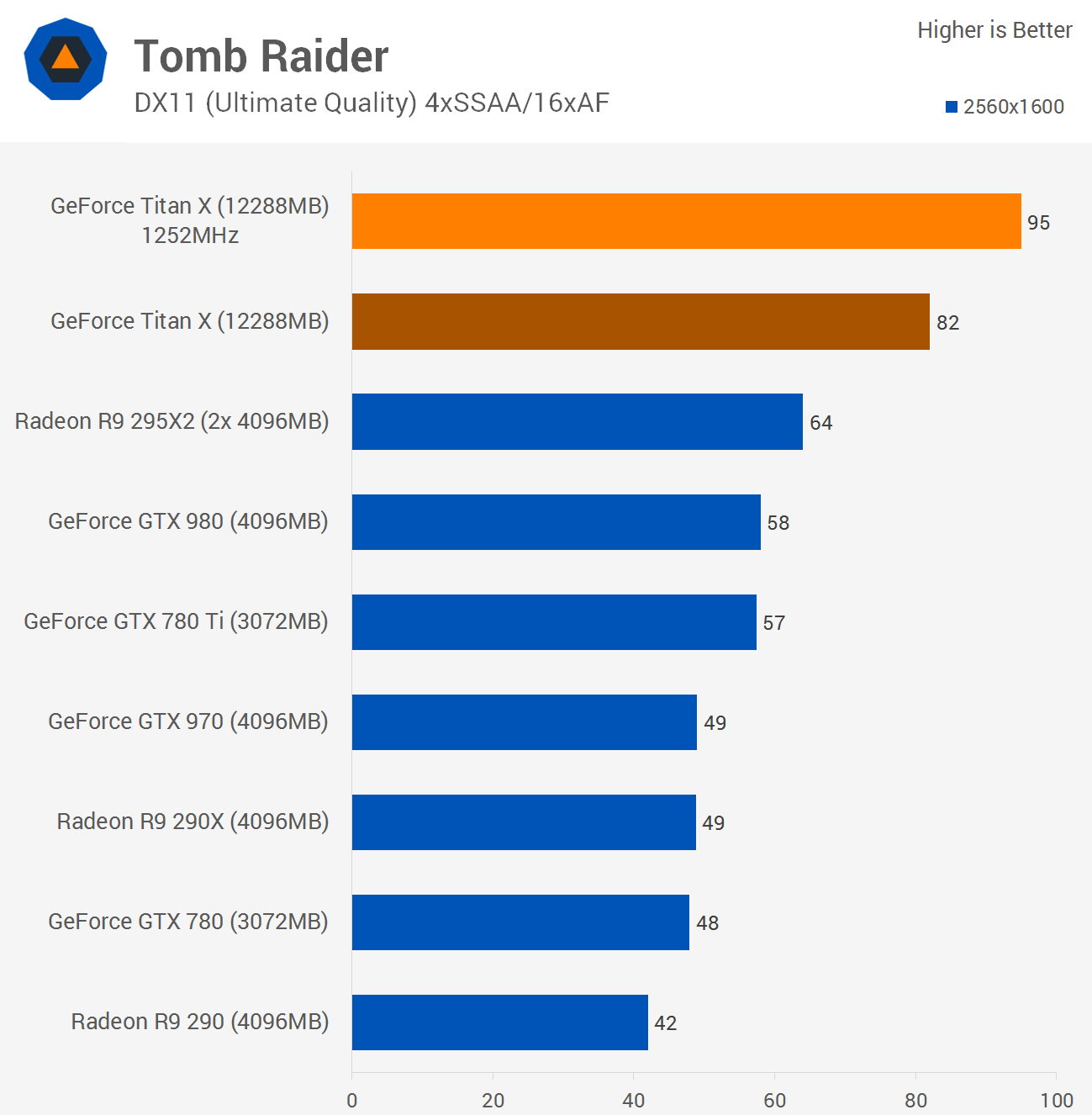

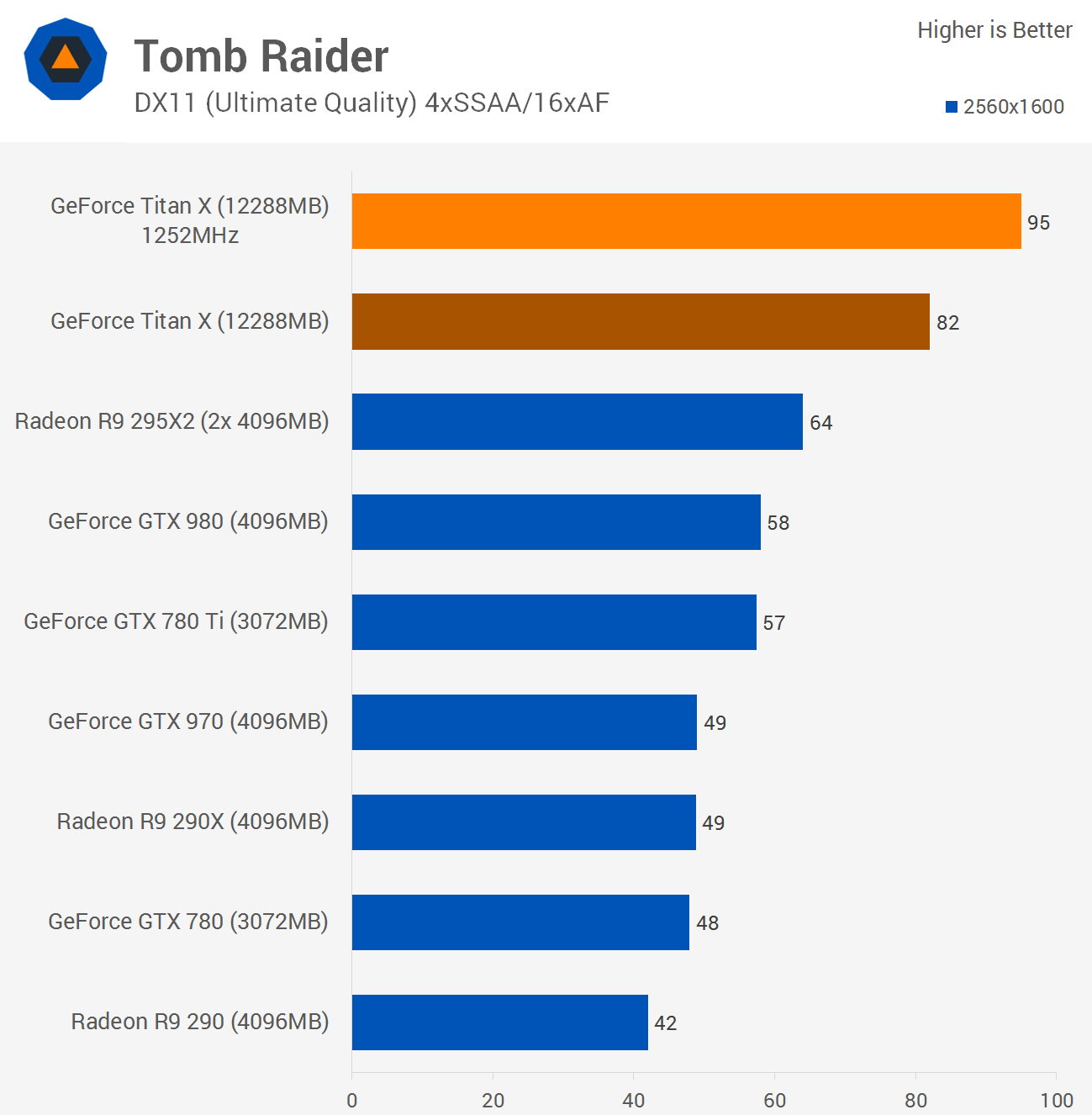

The Titan X again dominated at 2560x1600 with 82fps, 28% faster than the R9 295X2 and 41% faster than the GTX 980. At 4K, the Titan X was 21% faster than the GTX 980, but only 18% faster than the R9 290X and 8% slower than the R9 295X2.

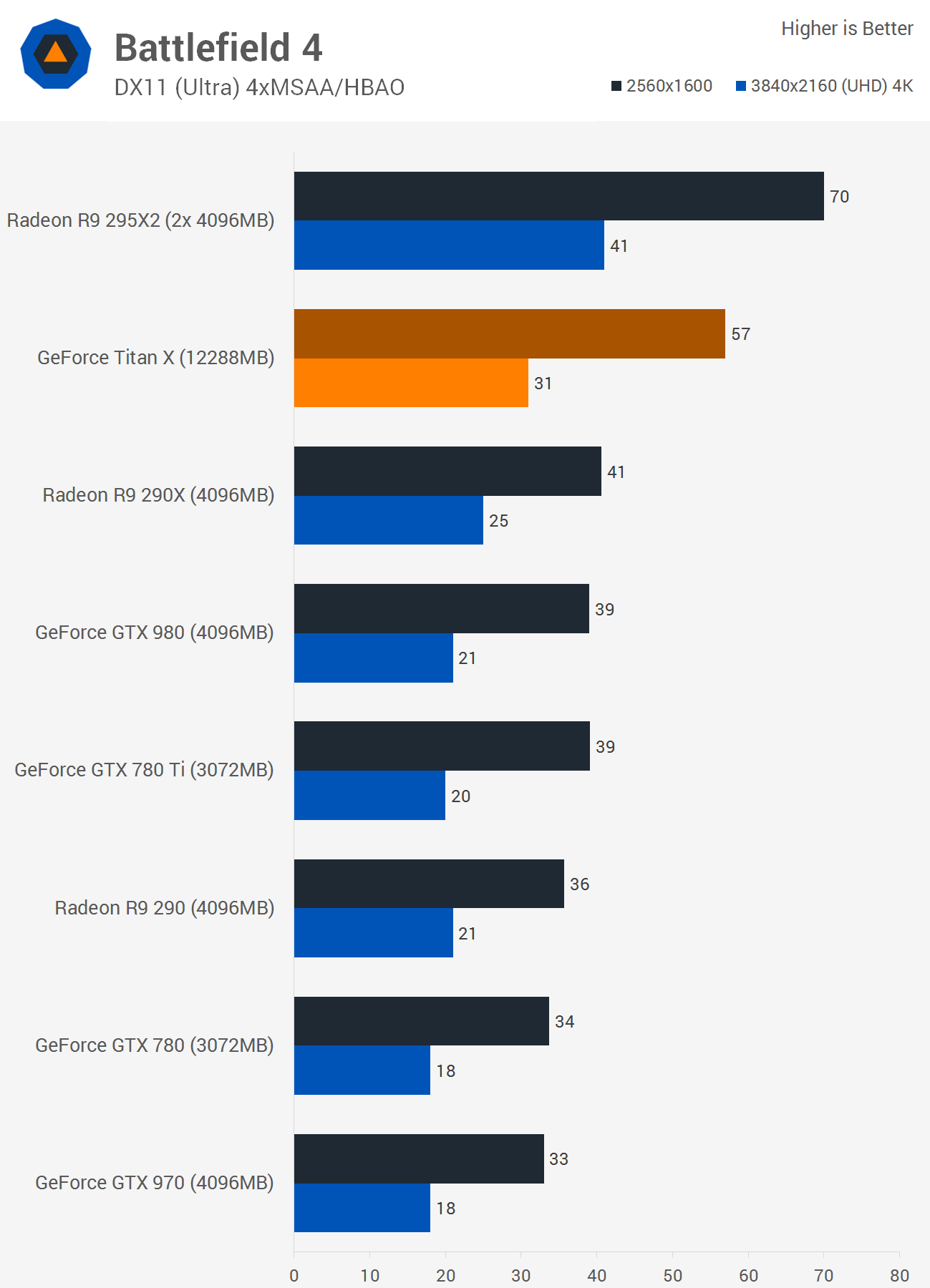

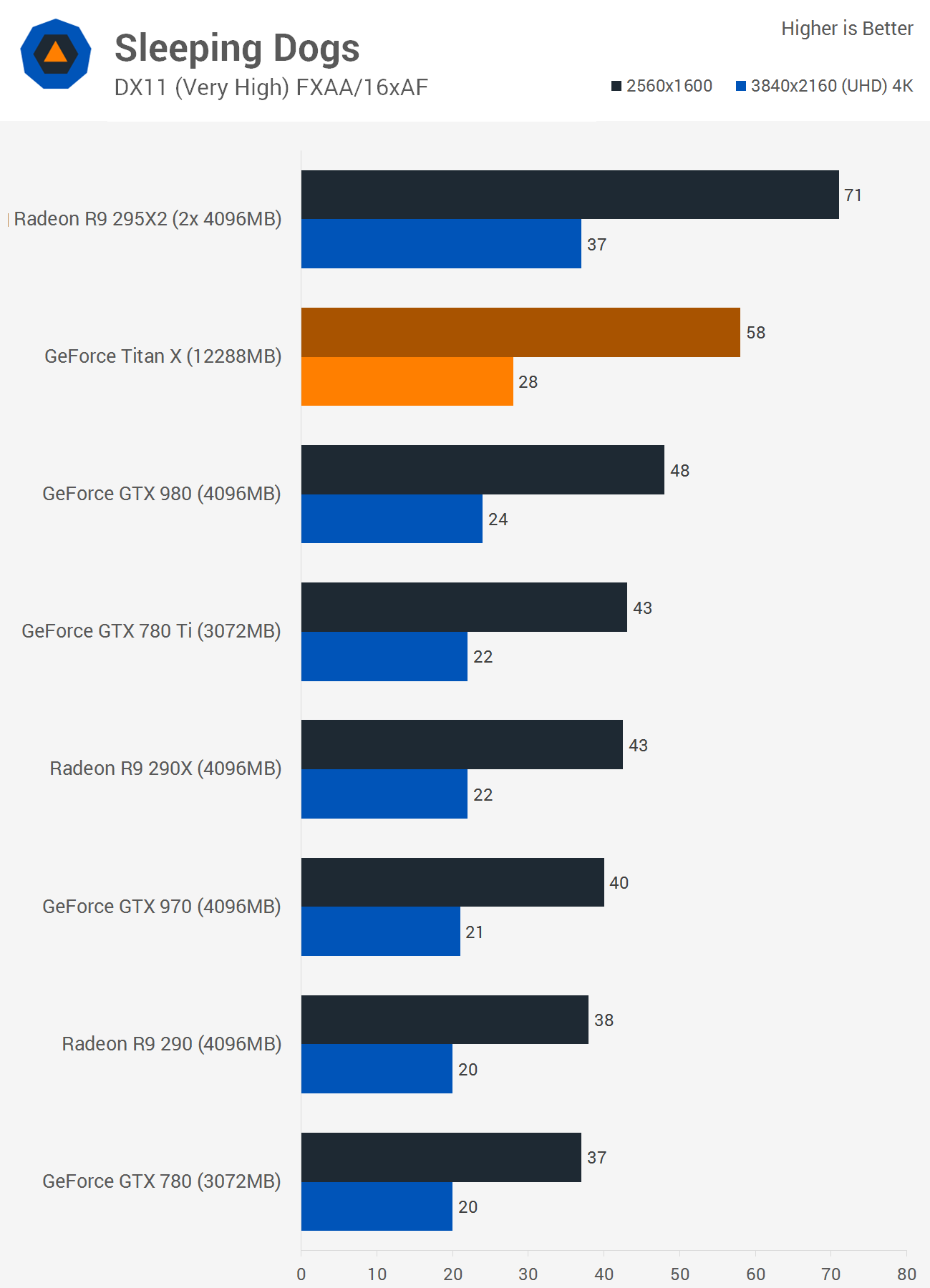

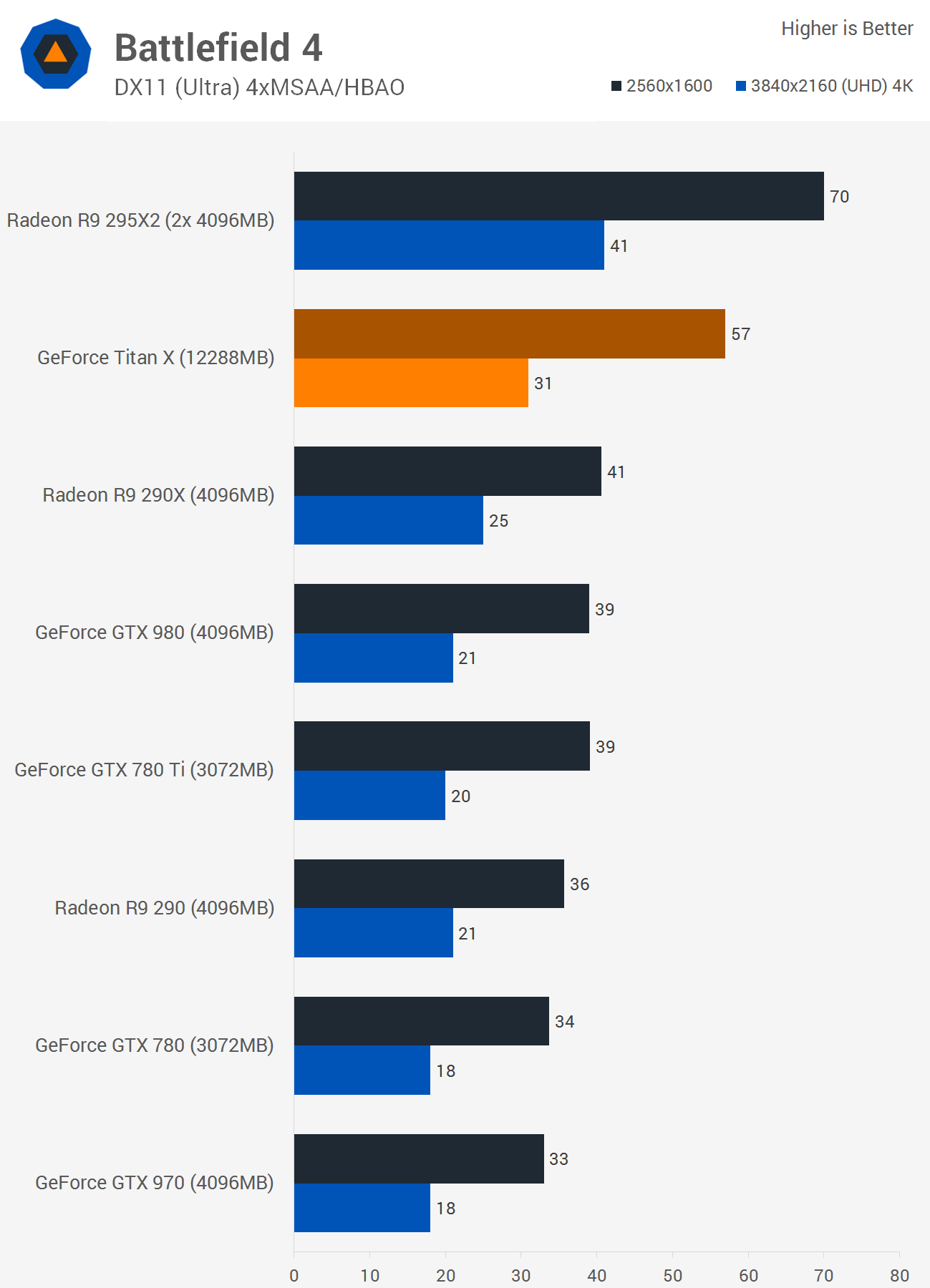

Benchmarks: Sleeping Dogs, Battlefield 4

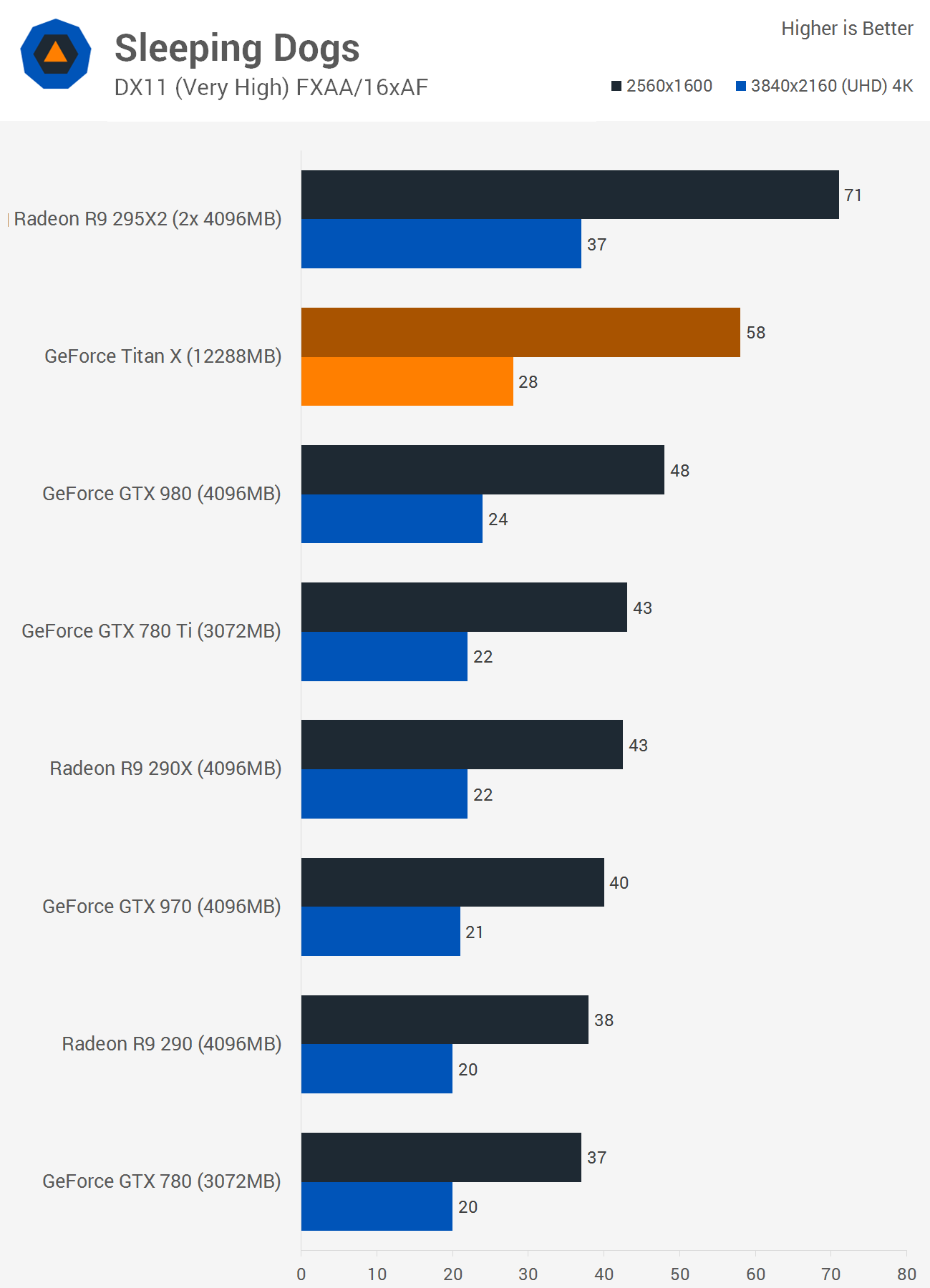

The Titan X rendered an impressive 58fps at 2560x1600 for a 21% lead over the GTX 980 and 35% over the R9 290X. Compared to the R9 295X2, Nvidia's newcomer was 18% slower.

Increasing the resolution reduced the average frame rate of the GTX Titan X to just 28fps, 17% faster than the GTX 980 and 27% when compared to the R9 290X.

The Titan X averaged 57fps at 2560x1600, 19% slower than the R9 295X2 but 46% faster than the GTX 980 and 39% faster than the R9 290X. At 4K the Titan X dropped to 31fps and while this made it 48% faster than the GTX 980, it was just 24% faster than the R9 290X.

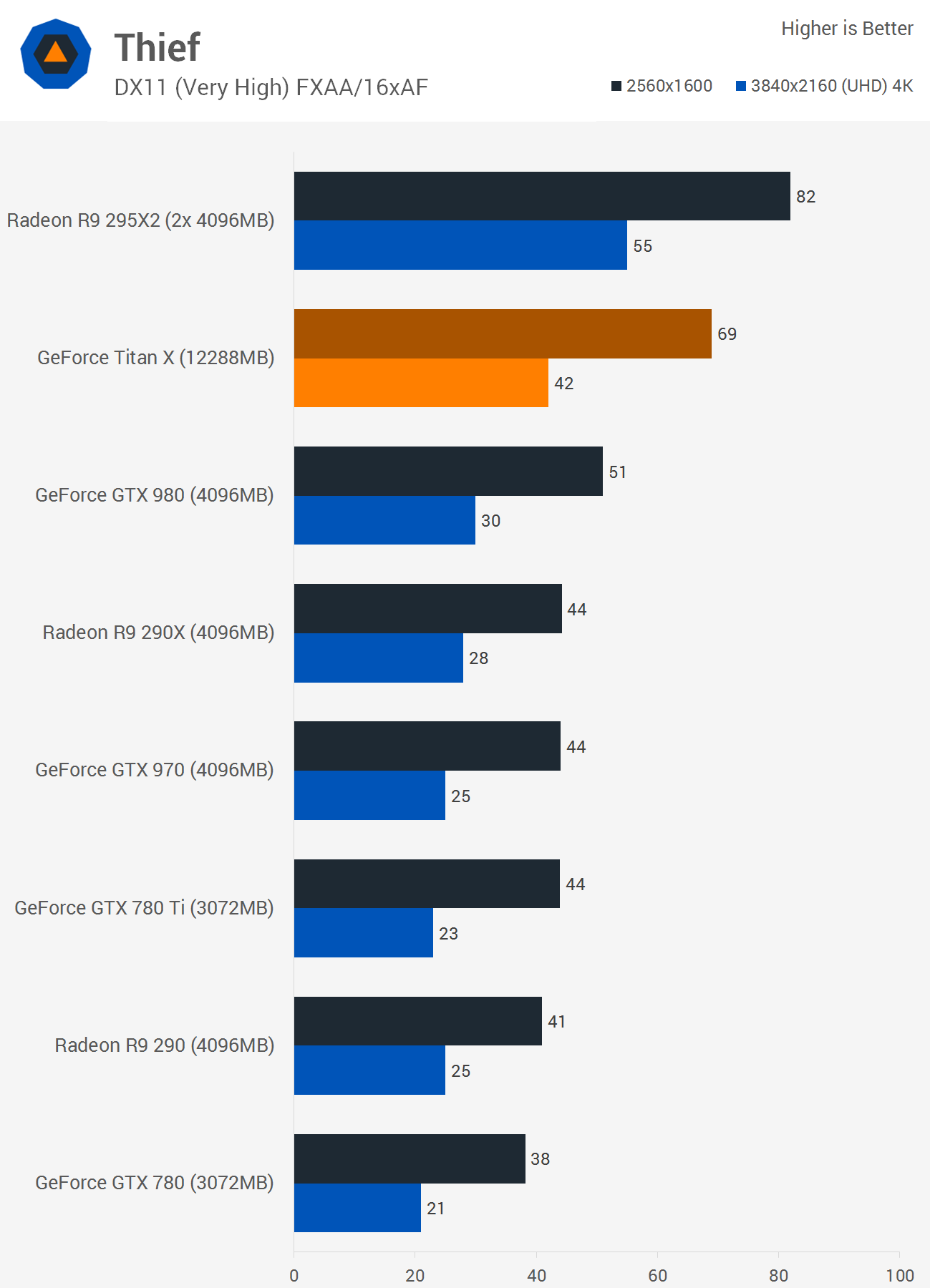

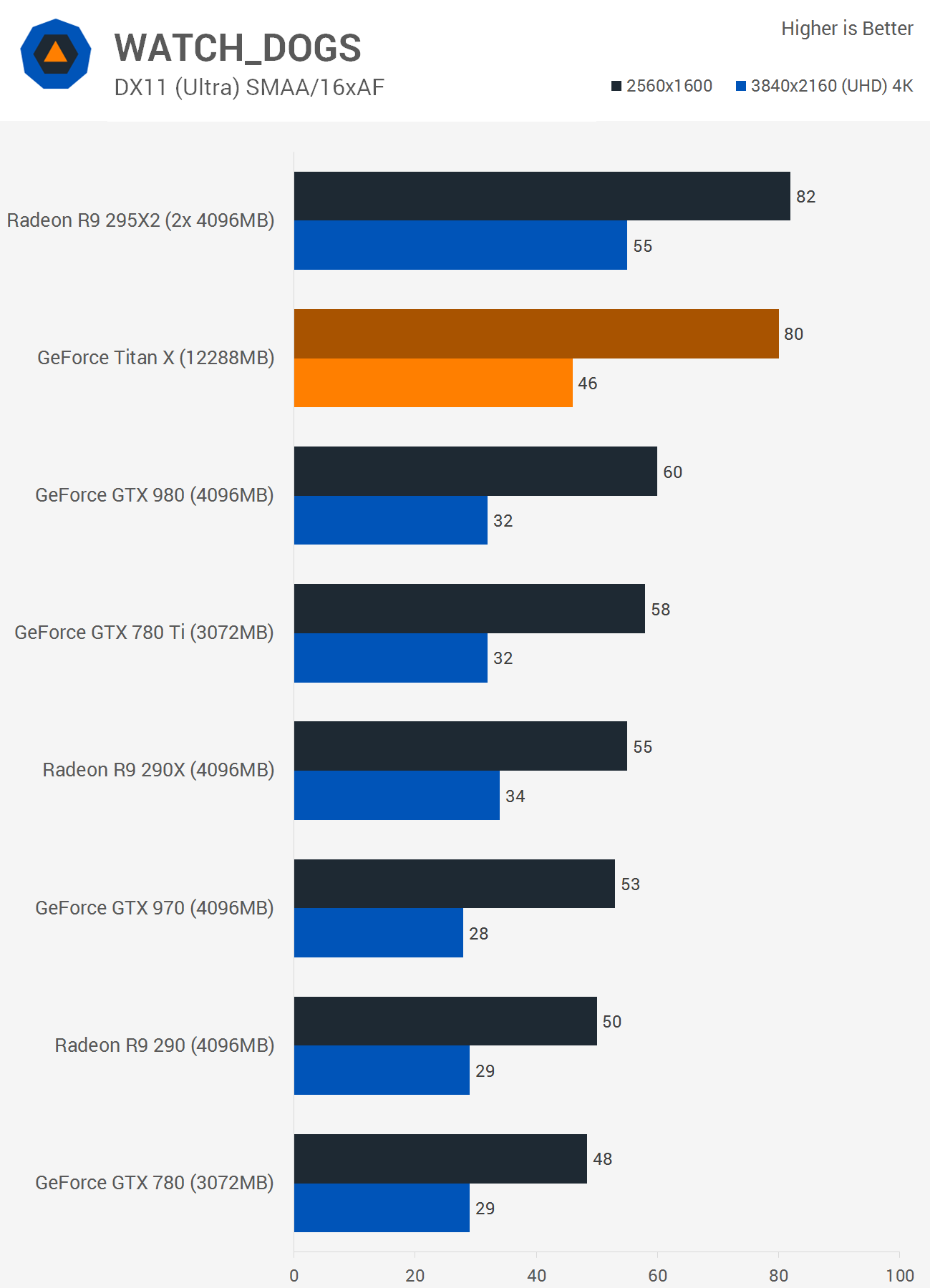

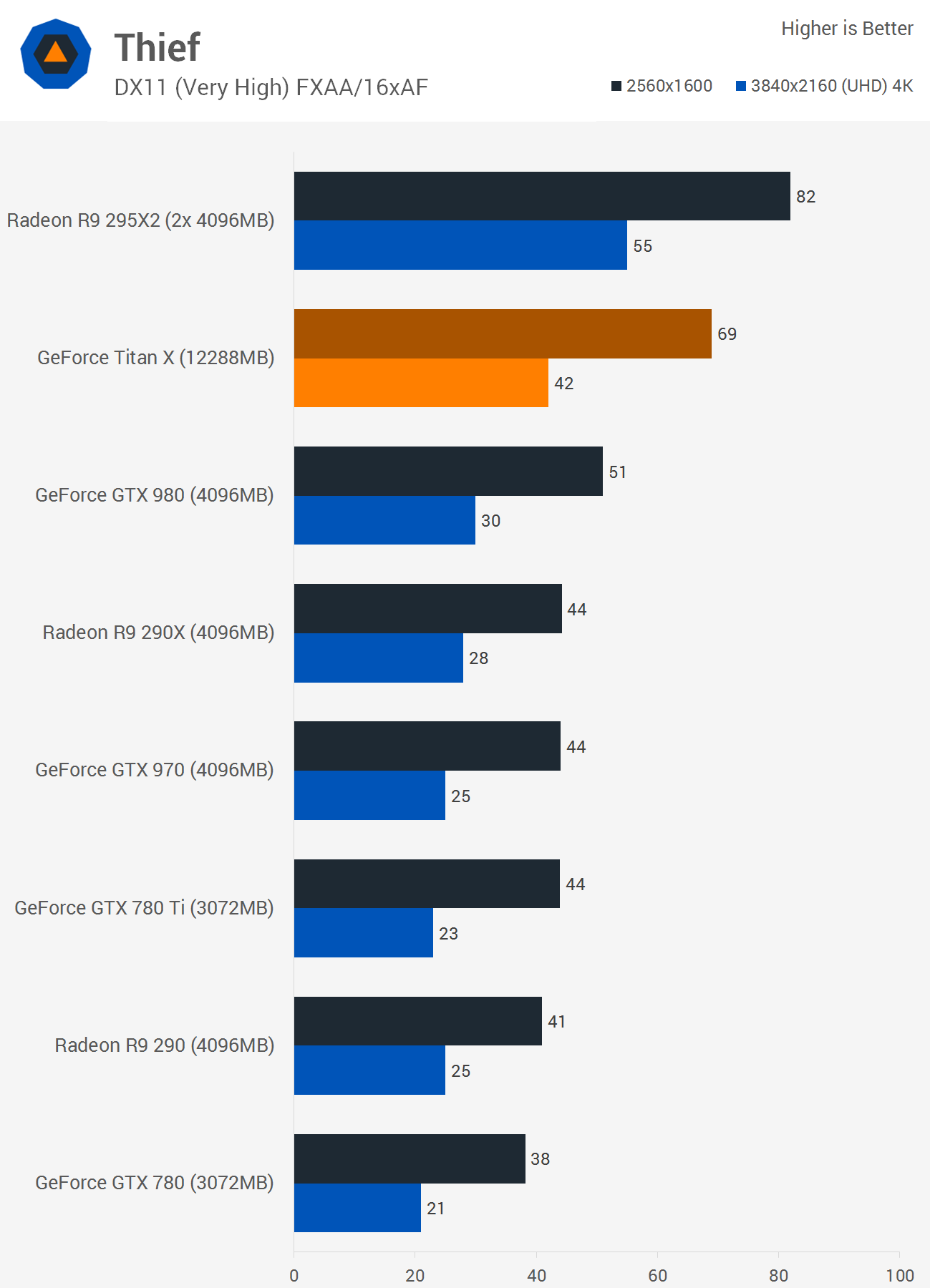

Benchmarks: Thief, Watch Dogs

At 2560x1600 the Titan X was 35% faster than the GTX 980, 57% faster than the R9 290X, but 16% slower than the R9 295X2. At 4K we find that the Titan X is 40% faster than the GTX 980 and 50% faster than the R9 290X.

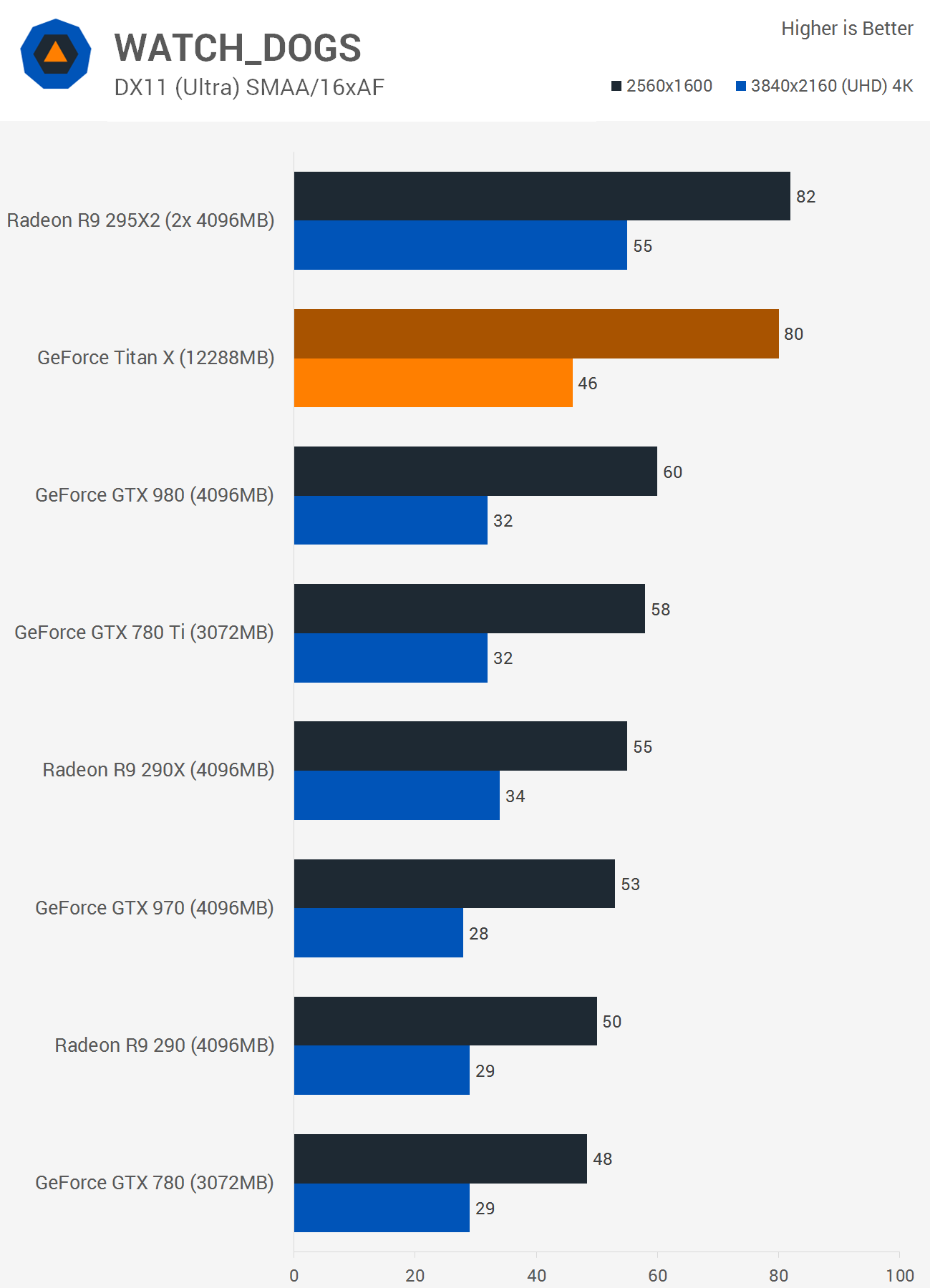

The Titan X delivered a silky smooth 80fps at 2560x1600 making it just 2% slower than the R9 295X2 while it was 33% faster than the GTX 980 and 45% faster than the R9 290X. After cranking things up to 3840x2160 the Titan X was 44% faster than the GTX 980, 35% faster than the R9 290X and 16% slower than the R9 295X2.

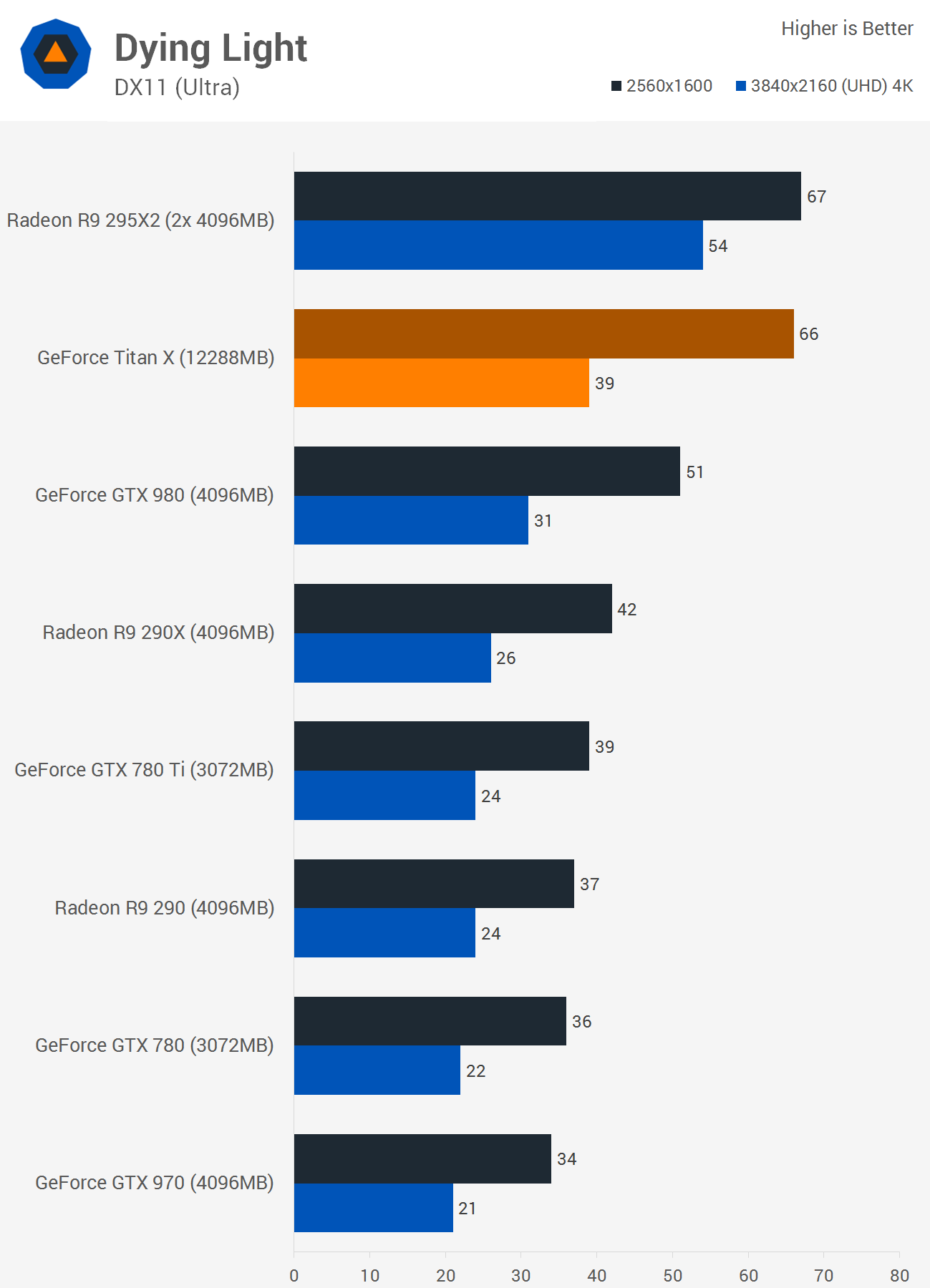

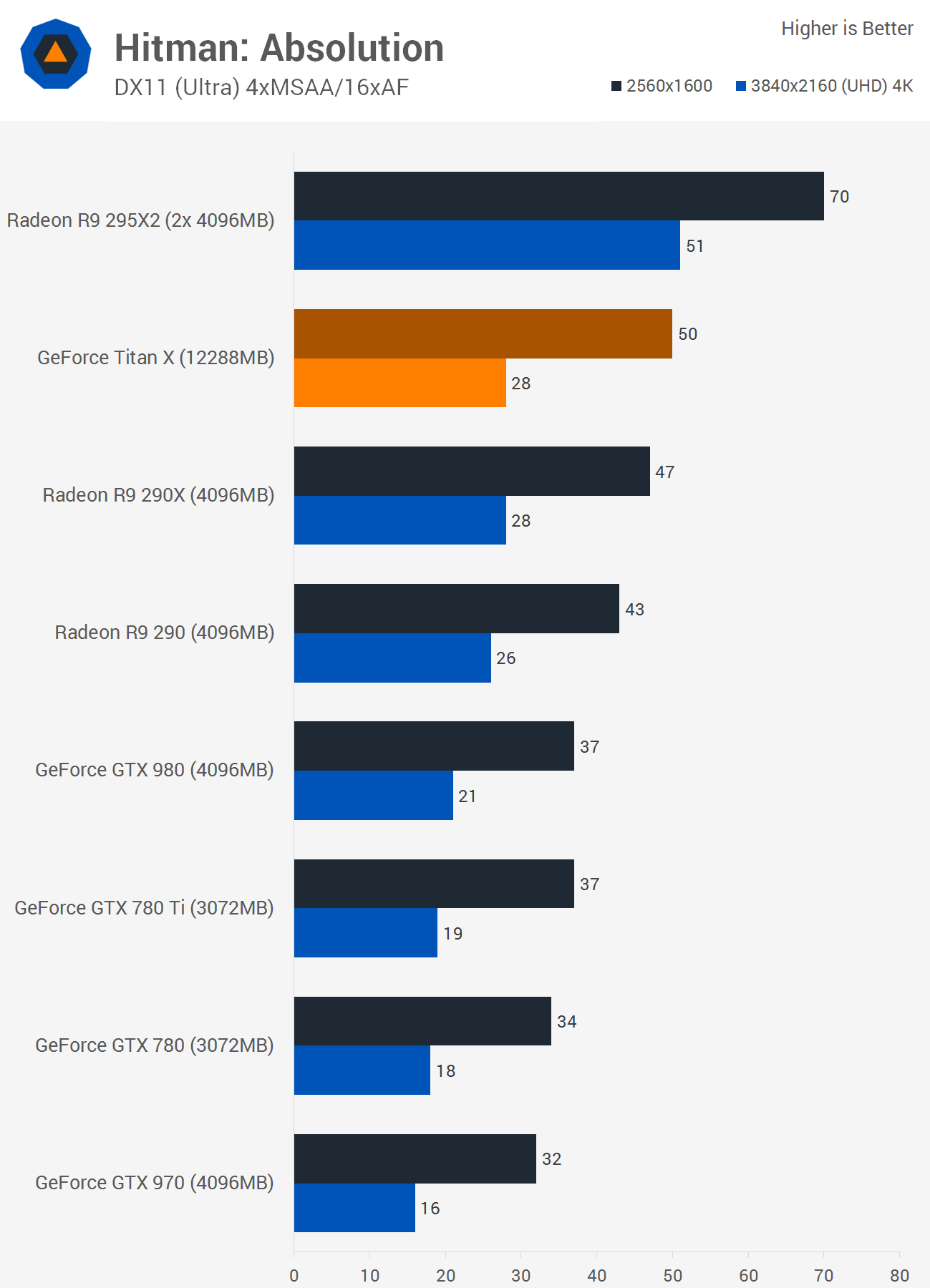

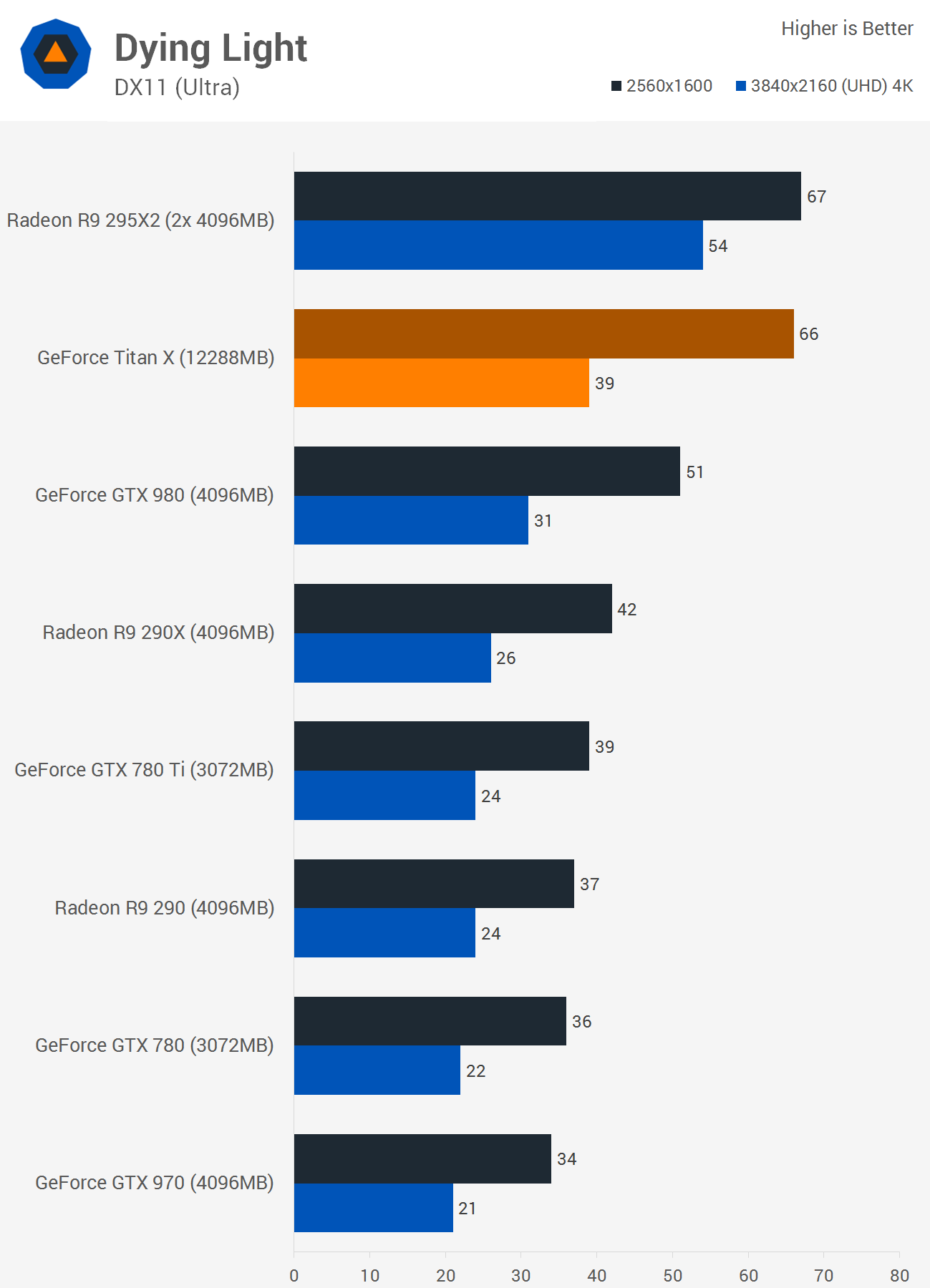

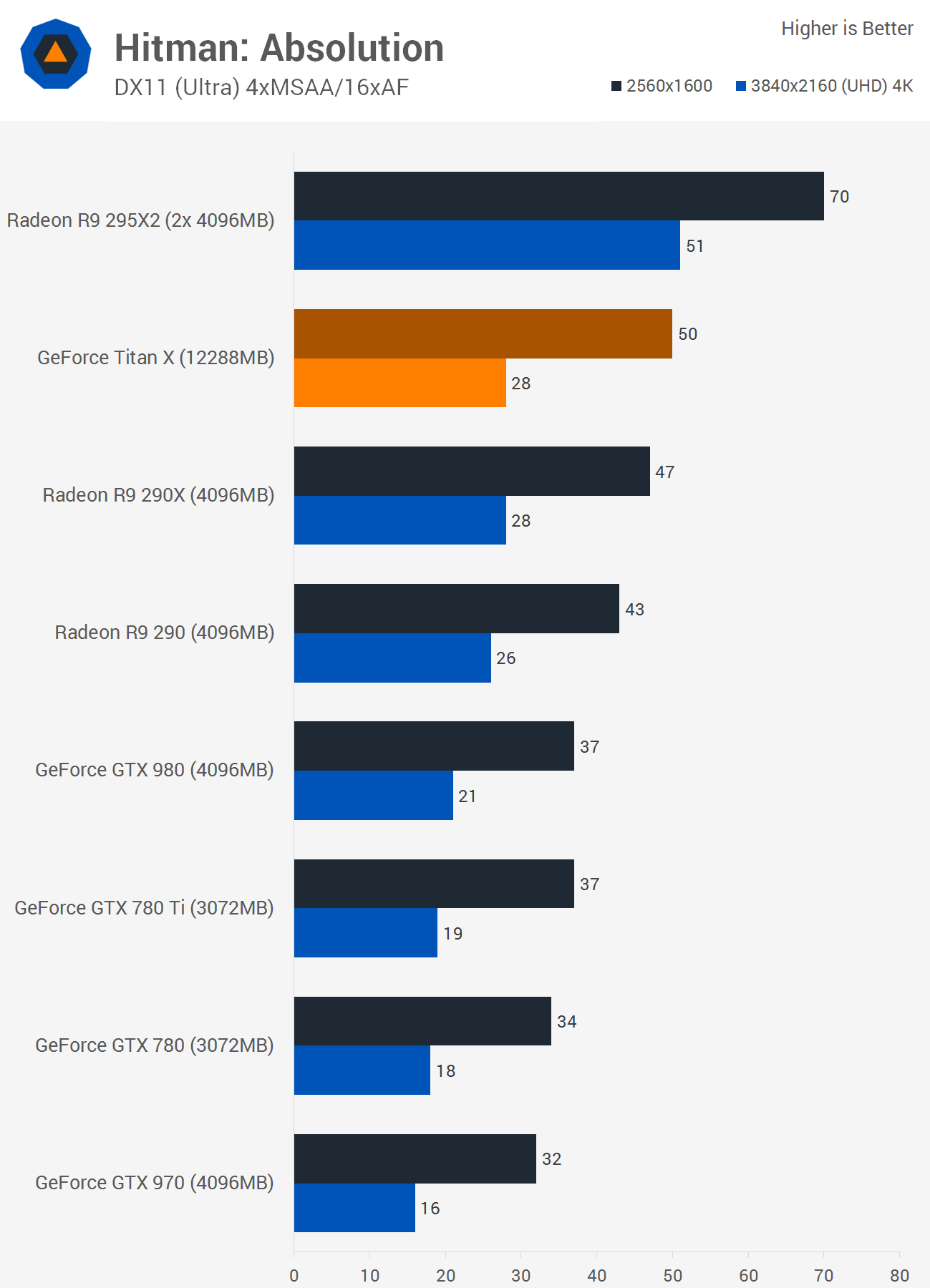

Benchmarks: Dying Light, Hitman: Absolution

Once again we see that the Titan X is roughly able to match the dual-GPU R9 295X2 at 2560x1600 while outpacing the GTX 980 and R9 290X by 29% and 57%. Similar margins are seen at 4K as the Titan X was 26% faster than the GTX 980 and 50% faster than the R9 290X but 28% slower than the R9 295X2.

The Titan X might have been 35% faster than the GTX 980 with an average of 50fps, but this also meant that it was just 6% faster than the R9 290X and 29% slower than the R9 295X2. Things didn't improve as we increased the resolution. At 4K the Titan X was only able to match the R9 290X, while it was 45% slower than the R9 295X2.

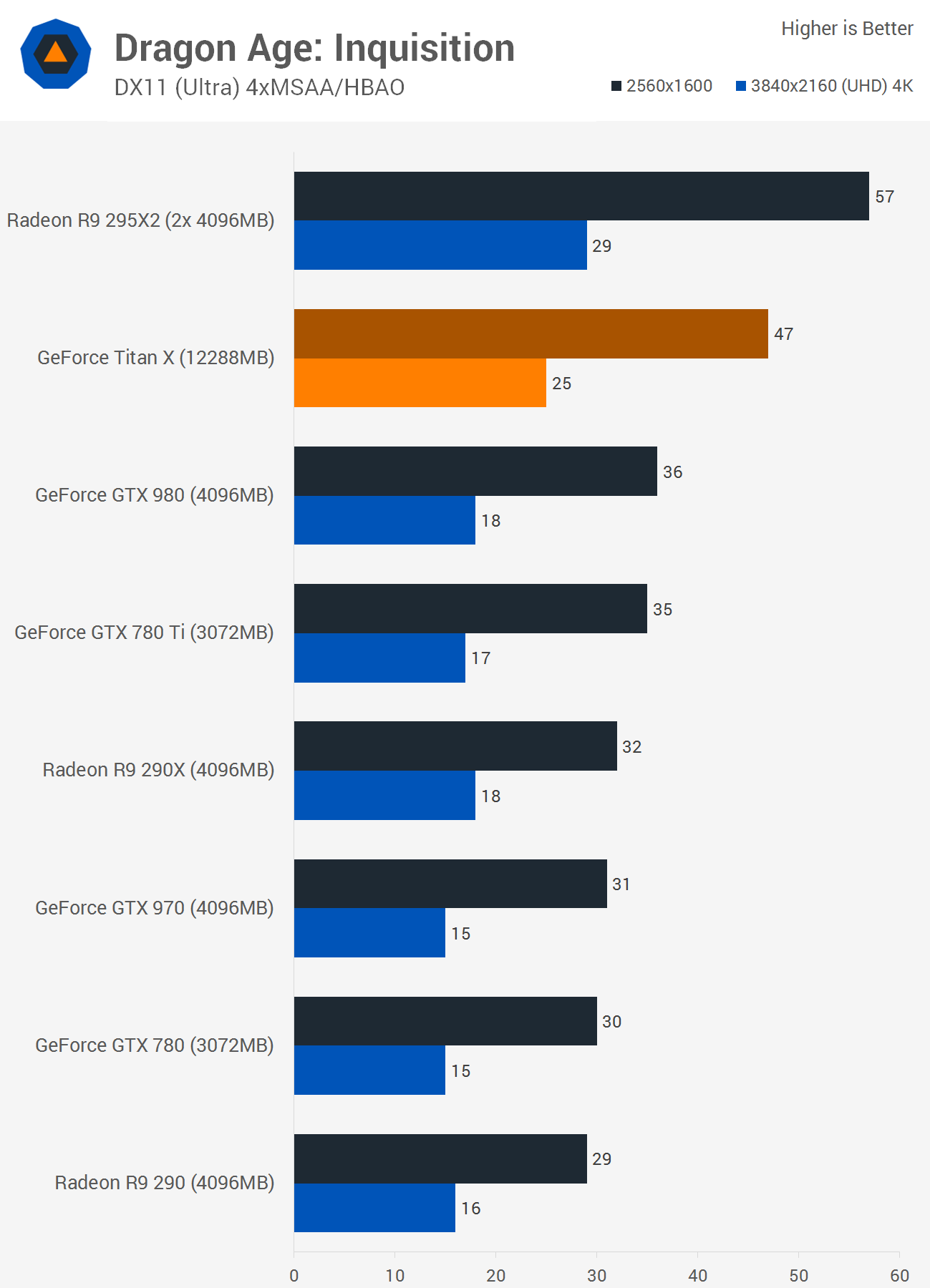

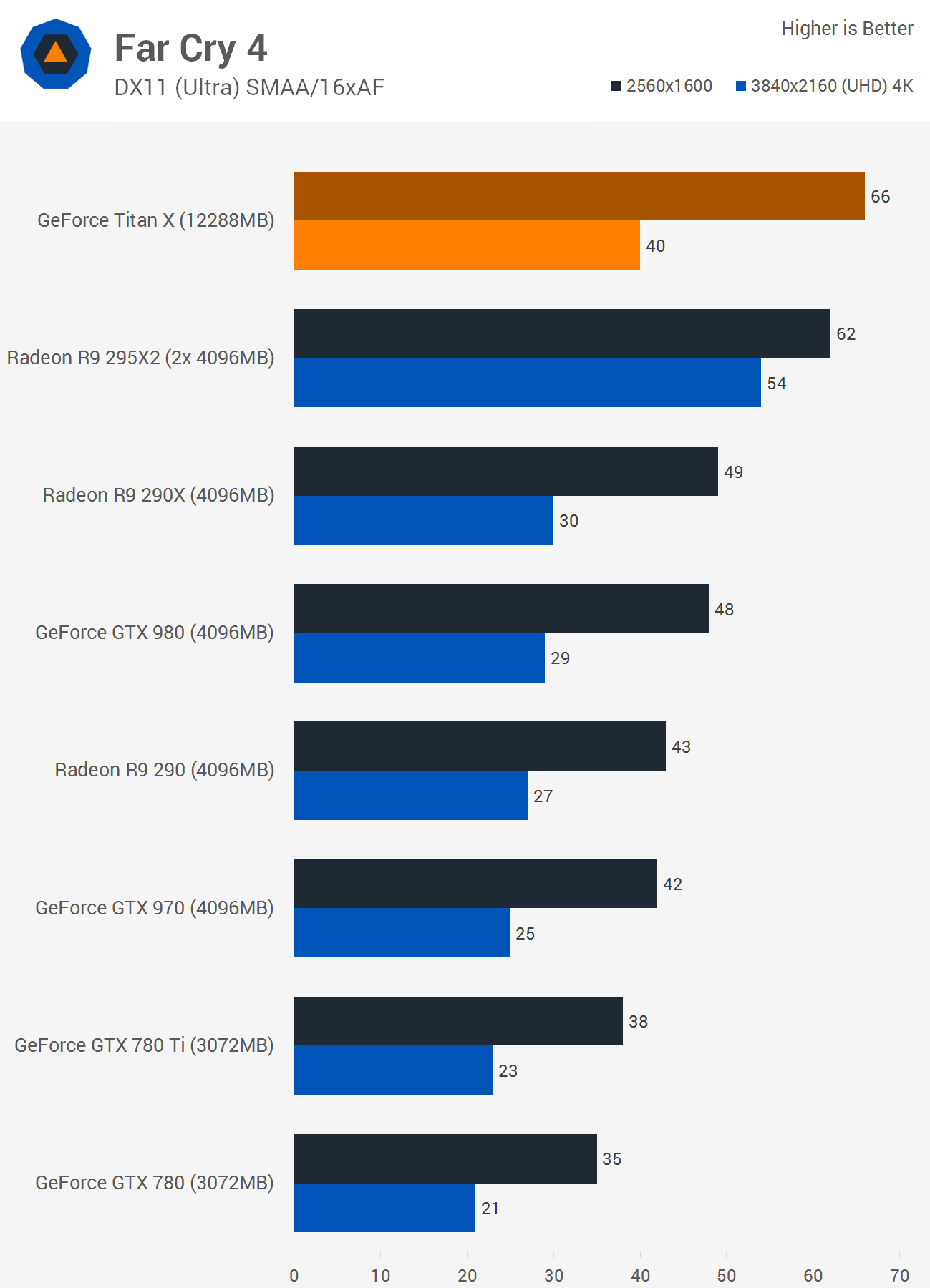

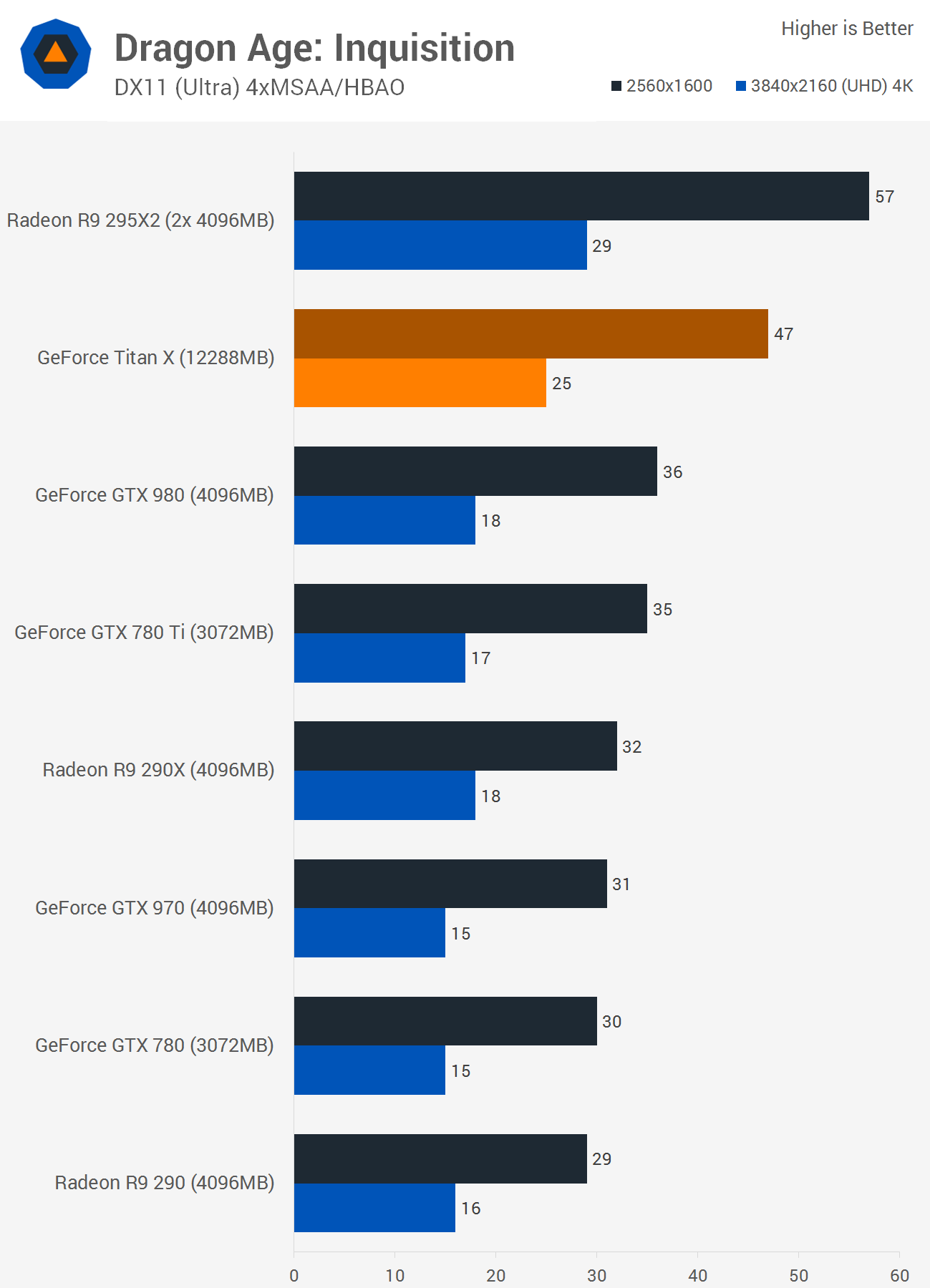

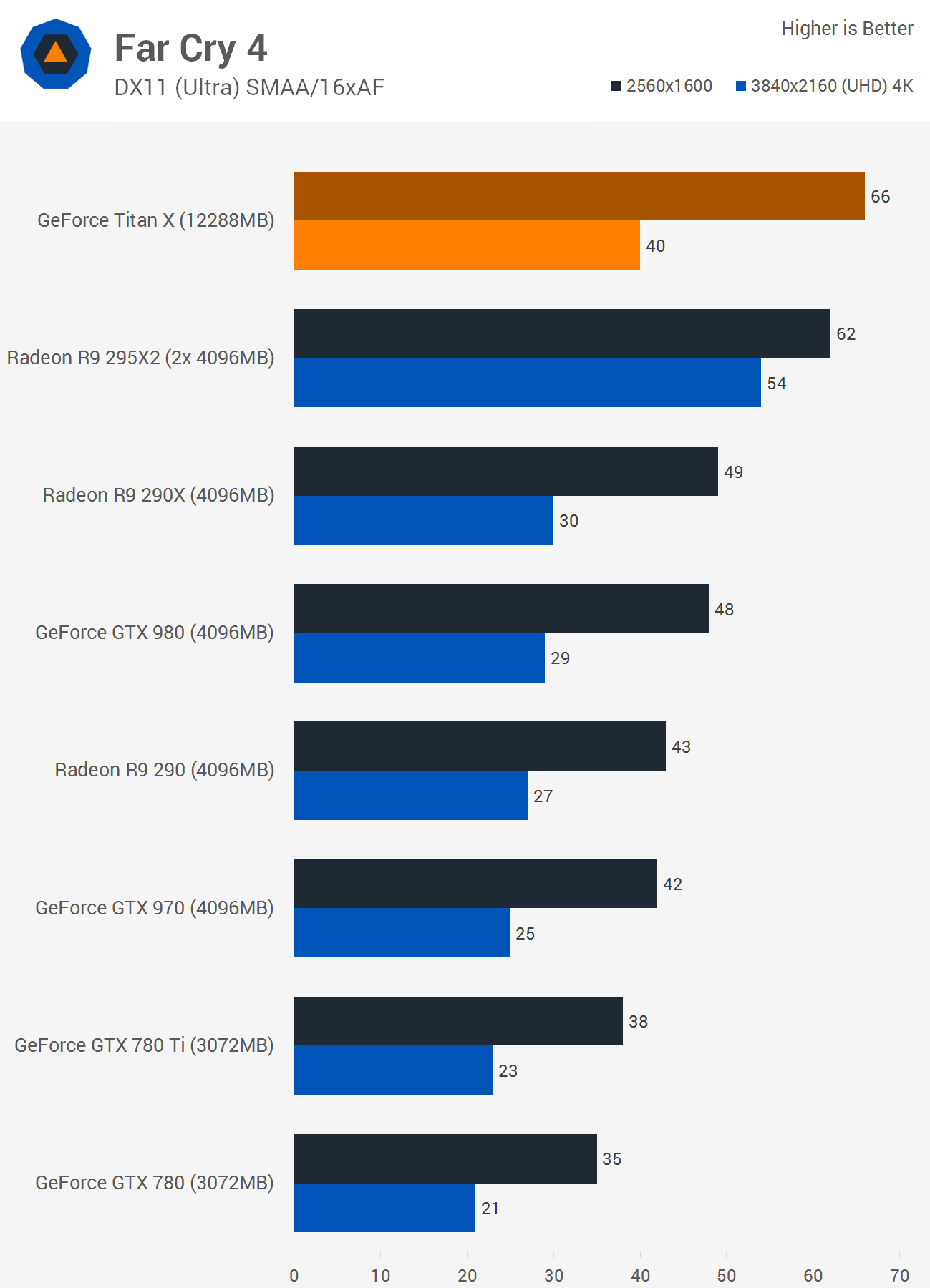

Benchmarks: Dragon Age: Inquisition, Far Cry 4

The Titan X is good for 47fps at 2560x1600 in Dragon Age: Inquisition, making it 31% faster than the GTX 980 and 47% faster than the R9 290X, while it trailed the R9 295X2 by an 18% margin. Upping the resolution to 4K didn't change things too much as the Titan X was 39% faster than both the GTX 980 and R9 290X while being 14% slower than the R9 295X2.

For the fifth time we see poor Crossfire scaling at 2560x1600 from the R9 295X2, allowing the GTX Titan X to deliver 6% more performance along with being 38% faster than the GTX 980 and 35% faster than the R9 290X.

Increasing the resolution did allow the R9 295X2 to get away as the GTX Titan X became 26% slower. Still it maintained strong leads over the GTX 980 and R9 290X.

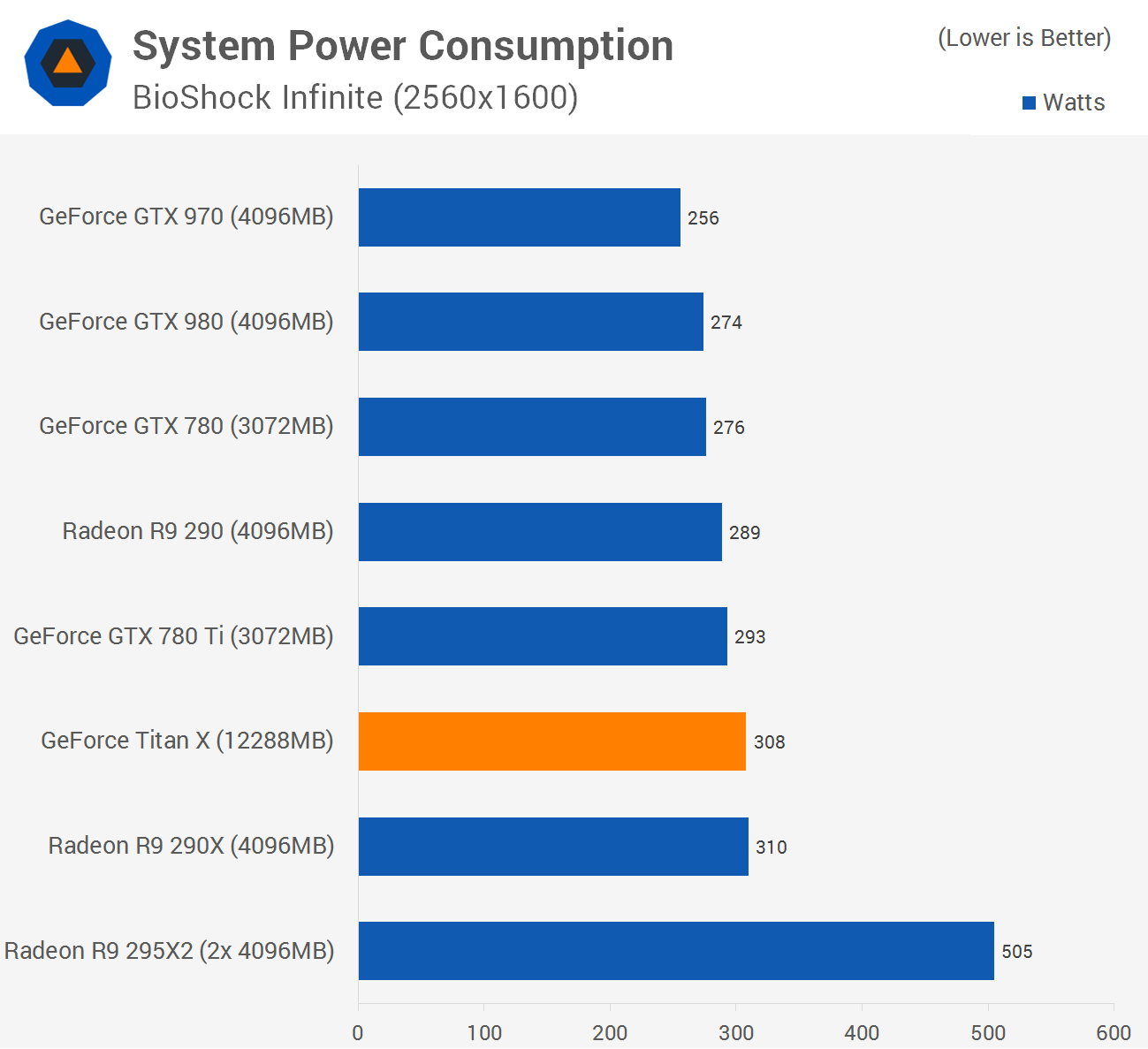

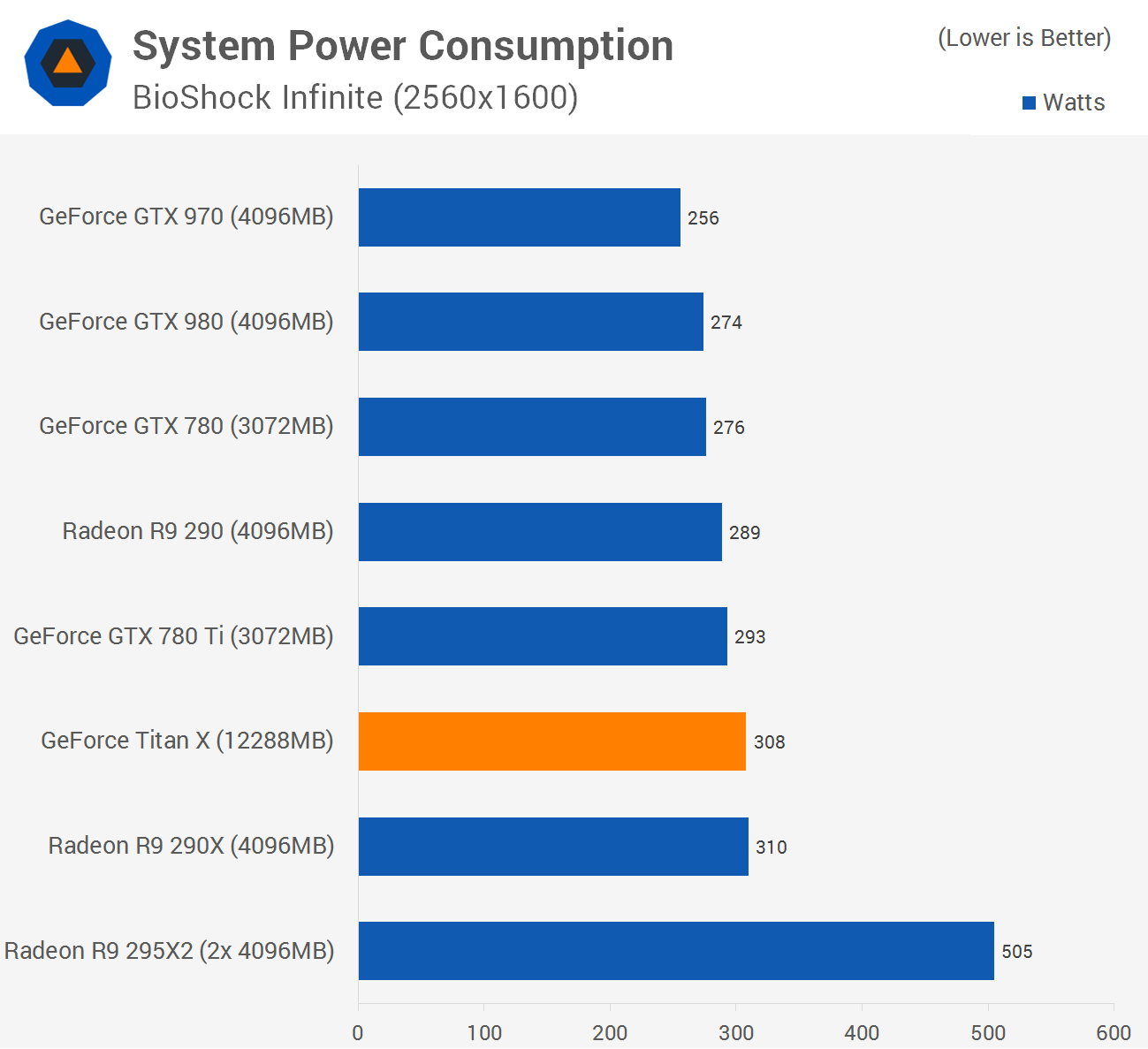

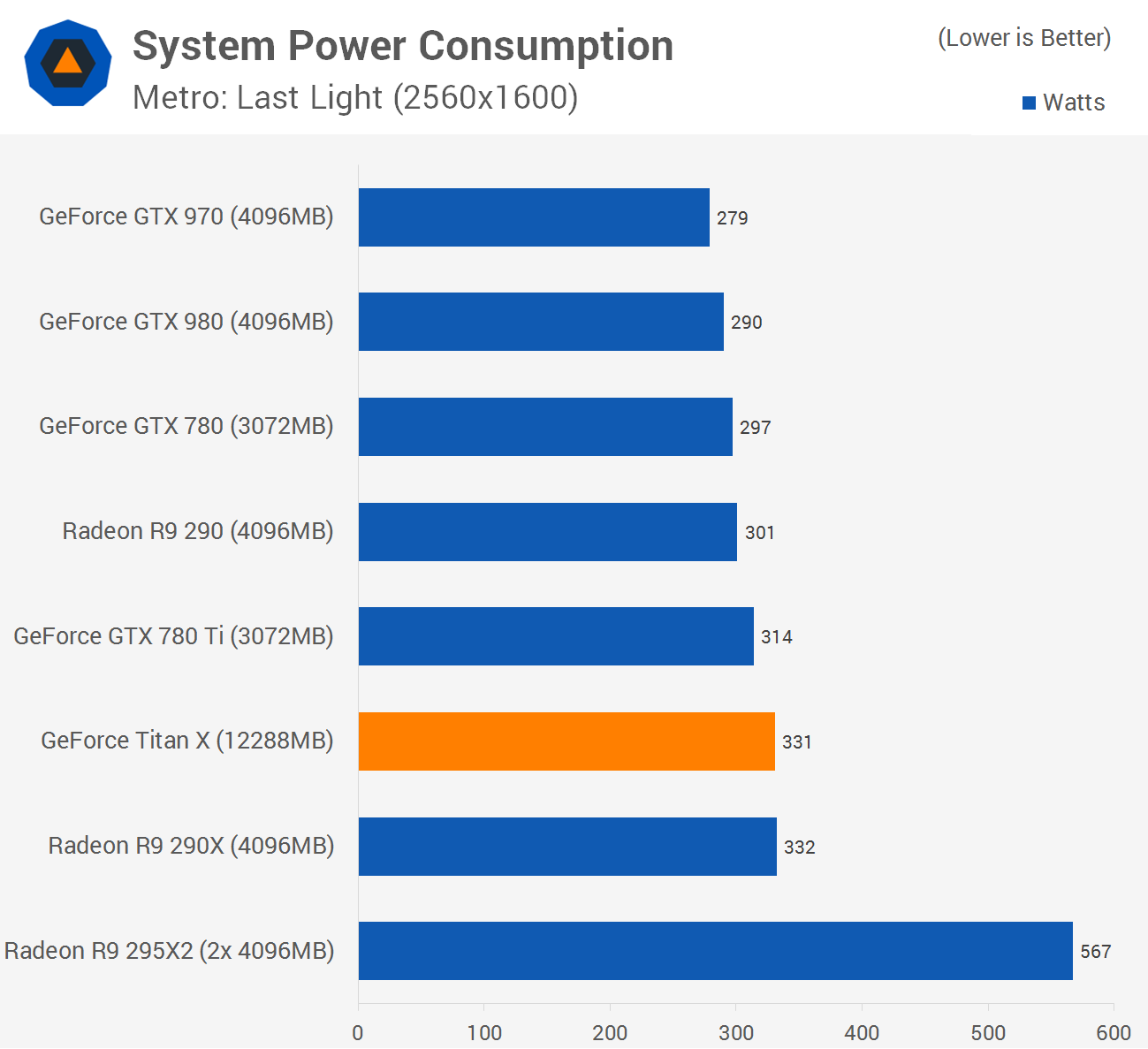

Power Consumption & Temperatures

Considering that the Titan X delivered 71% more performance than the R9 290X in BioShock, it's amazing to that their see power consumption is roughly the same.

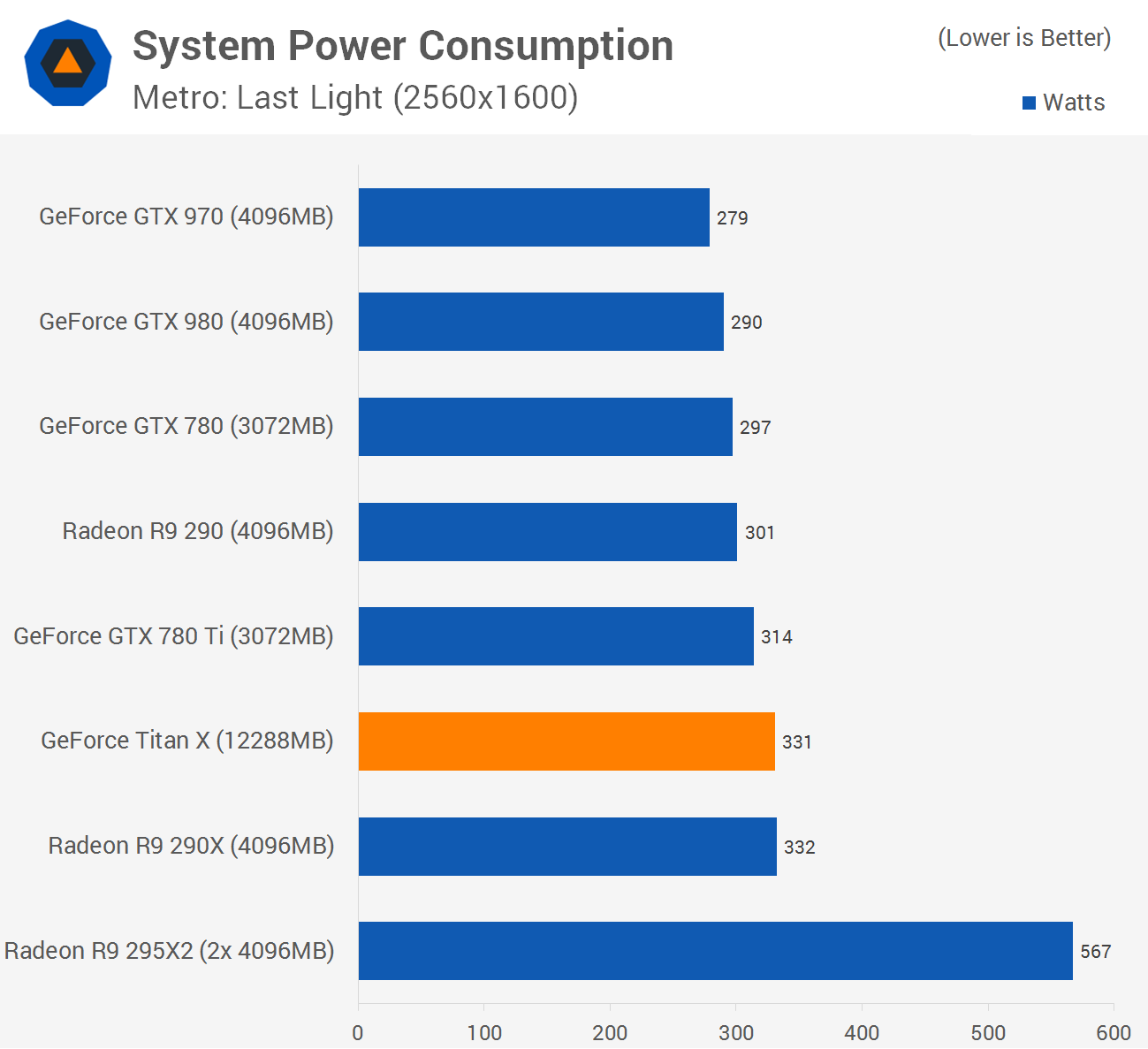

The Titan X was 66% faster than the R9 290X in Metro Redux and although we used Metro: Last Light for the power testing, it is incredible once again to see that both GPUs used roughly the same amount of power.

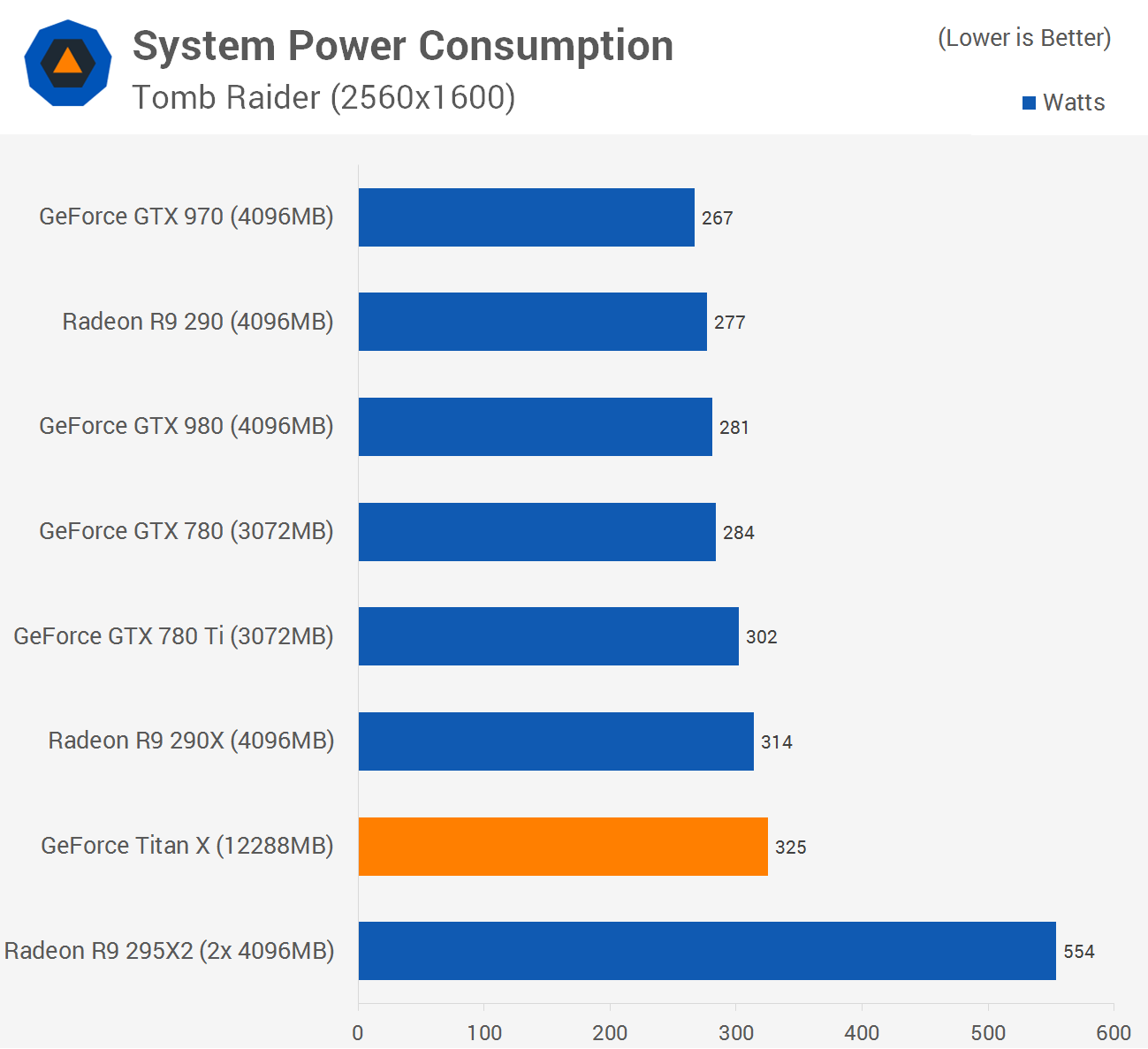

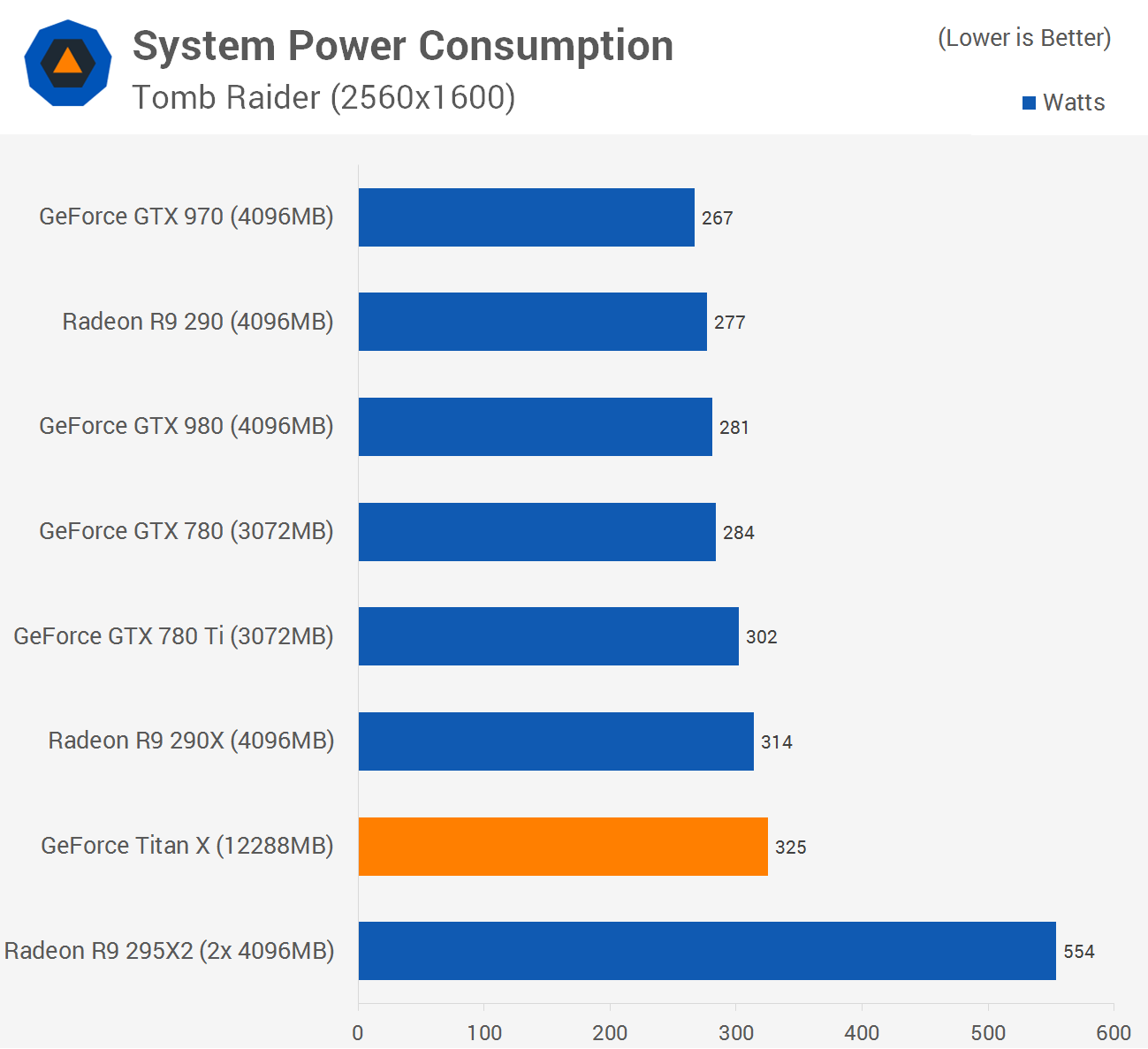

When testing Tomb Raider we found that the GTX Titan X was 67% faster than the R9 290X and yet the Titan X consumed just 4% more power. Additionally, the Titan X used 16% more power than the GTX 980 while delivering 41% more frames and compared to the R9 295X2, the GTX Titan pulled 41% less power.

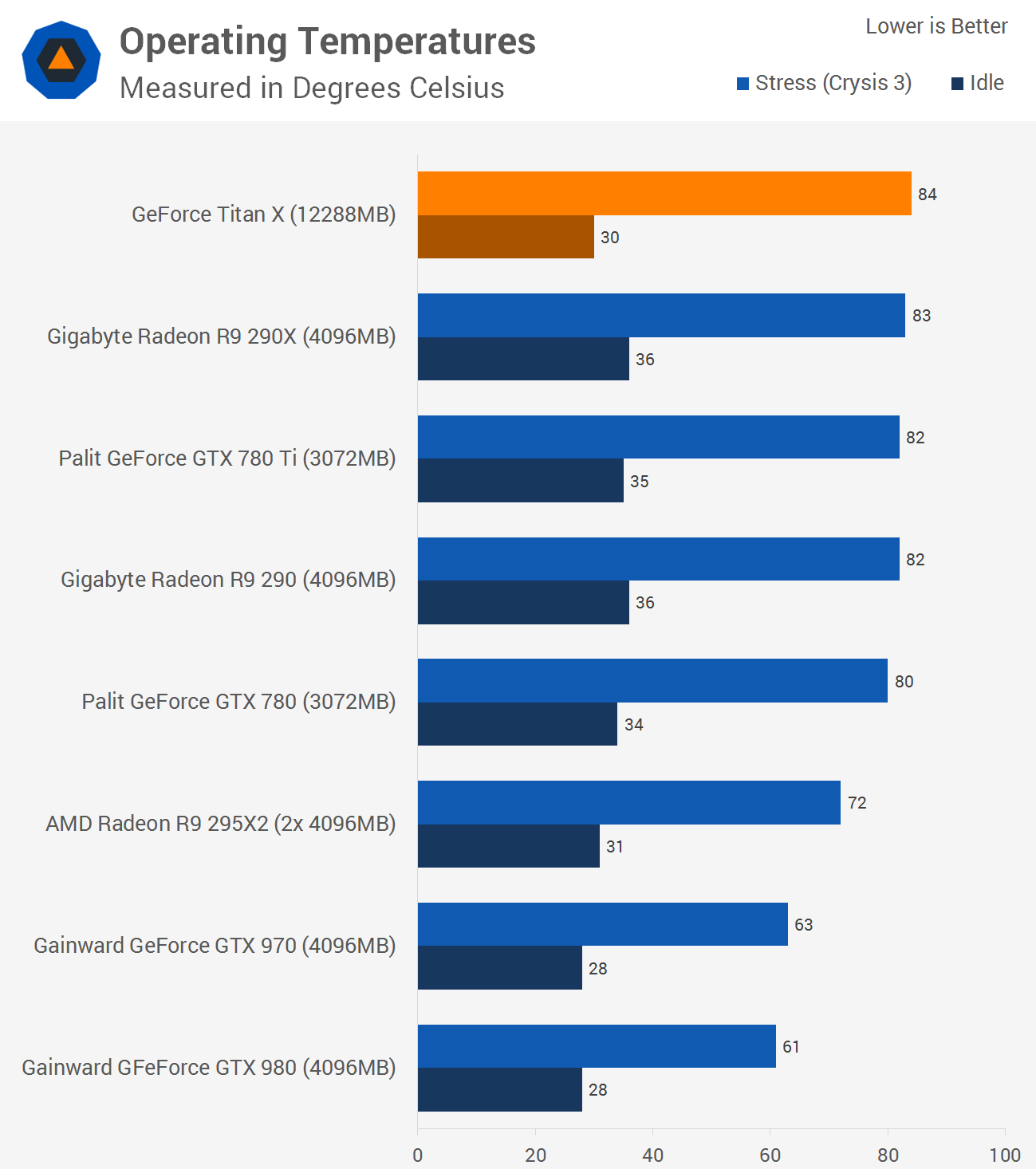

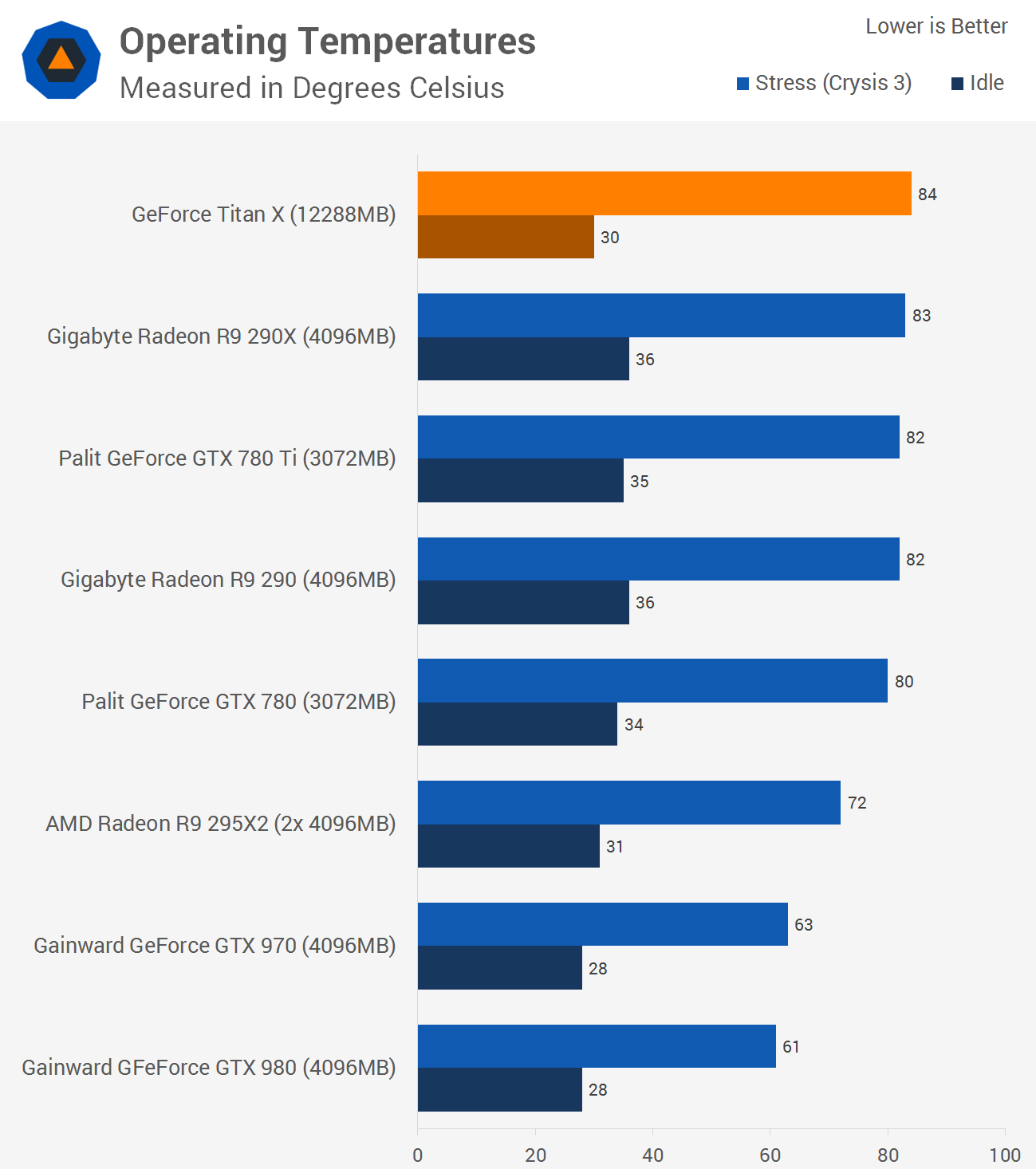

The Titan X would cool down to just 30 degrees with an ambient room temp of 21 degrees, though the card did hit 84 degrees when under load in Crysis 3, or slightly hotter than the Gigabyte WindForce 3X R9 290X.

Overclocking Performance

Is It Worth the $999 Asking Price?

Now that the results are in, one thing is clear: the GTX Titan X is bloody fast, though this isn't surprising given its specifications. The GTX 980 was already a beast, so with 50% more cores and memory bandwidth at its disposal, the Titan X was destined to be a solid performer.

Given that the Titan X is clocked ~12% lower than the GTX 980 at stock, we were hoping for around a 40% performance bump and thankfully that's close to reality as the Titan X was 37% faster than the GTX 980 at 2560x1600 and 36% faster at 4K UHD.

The Titan X was also 47% faster than the R9 290X on average at 2560x1600 while it was just 36% faster at 3840x2160.

At 2560x1600 the Titan X's results were relatively competitive with AMD's dual-GPU R9 295X2mainly due to poor scaling. In some instances, drivers could be to blame, but there were situations where the R9 295X was clearly CPU limited.

While the Titan X was just 8% slower than the R9 295X2 at 2560x1600, it was 22% slower at 3840x2160. It's not completely fair to compare the Titan X to a water-cooled dual-GPU card, nonetheless the top-end Radeon remains a serious contender for hardcore gamers at $700+.

Now, at $999, the Titan X is aiming at the no-compromise gamer. It's ~80% more expensive than the GTX 980 while delivering 40% more performance, so prospective buyers are set to pay a massive premium. Meanwhile, the new Titan is 47% faster than the R9 290X at 2560x1600, but that hardly justifies spending almost three times as much.

The R9 295X2 can be had for as little as $700, which is a heck of a deal considering the cooling it comes with, though with the headaches that often arise from multi-GPU gaming, we could see someone spending more on what is technically a slower albeit painless and more straightforward solution. Meanwhile, AMD's R9 290X is still the best choice for those wanting the best value flagship.

There's no denying that the Titan X delivers remarkable performance and despite already witnessing first-hand just how efficient Maxwell is, we still thought this card would be more power hungry than it turned out to be. It's nice to be repeatedly impressed on this front.

Ultimately the Titan X delivers. It's not a great value, but if it sells out like the original Titan did then it doesn't need to be.

From TechSpot

While we eagerly awaited for next-gen GeForce 700 cards, Nvidia dropped the GeForce GTX Titan, wielding 7.08 billion transistors for an unwieldy price of $1,000. The Titan instantly claimed king of the hill, and even though the Radeon R9 290X brought similar performance for half the price six months later, Nvidia refused to budge on the Titan's MSRP.

This was every gamer's dream GPU for half a year, but its fate was sealed when the GTX 780 Tishipped many months later (Nov/13), offering more CUDA cores at a more affordable $700.

Although the GTX Titan was great for gaming, that wasn't the sole purpose of the GPU, which was equipped with 64 double-precision cores for 1.3 teraflops of double-precision performance. Previously only found in Tesla workstations and supercomputers, this feature made the Titan ideal for students, researchers and engineers after consumer-level supercomputing performance.

A year after the original Titan's release, Nvidia followed up with a full 2880-core version known as the Titan Black, which boosted the card's double-precision performance 1.7 teraflops. A month later, the GTX Titan Z put two Titan Blacks on one PCB for 2.7 teraflops of compute power, though this card never made sense at $3,000 -- triple the Titan Black's price.

Since then, the Maxwell-based GeForce 900 series arrived with the GTX 980's unbeatable performance vs. power ratio leading the charge as today's undisputed single-GPU king. Given that the GTX 980 has a modest 2048 cores using 5.2 billion transistors in a small 398mm2 die area, it manages to be 29% smaller with 26% fewer transistors than the flagship Kepler parts.

We knew there would be more ahead for Maxwell and so here it comes. Six months after the GTX 980, Nvidia is back with the GeForce GTX Titan X, a card that's bigger and more complex than any other. However, unlike previous Titan GPUs, the new Titan X is designed exclusively for high-end gaming and as such offers similar compute performance similar to the GTX 980.

Announced at GDC, there's plenty to be psyched about: headline features include 3072 CUDA cores, 12GB of GDDR5 memory running at 7Gbps, and a whopping 8 billion transistors. At its peak, the GTX Titan X will deliver 6600 GFLOPS single precision and 206 GFLOPS double precision processing power.

Nvidia reserved pricing information to the last minute as they delivered the opening keynote at their GPU Technology Conference -- unsurprisingly the Titan X will be $999. But without getting bogged down in how stupid that was -- let's focus on the fact that we get to show you how the GTX Titan X performs and that it's a hard launch with availability expected today.

Titan X's GM200 GPU in Detail

The GeForce Titan X is a processing powerhorse. The GM200 chip carries six graphics processing clusters, 24 streaming multiprocessors with 3072 CUDA cores (single precision).

As noted earlier, the Titan features a core configuration that consists of 3072 SPUs which take care of pixel/vertex/geometry shading duties, while texture filtering is performed by 192 texture units. With a base clock frequency of 1000MHz, texture filtering rate is 192 Gigatexels/sec, which is over 33% higher than the GTX 980. The Titan X also ships with 3MB of L2 cache and 96 ROPs.

The memory subsystem of GTX Titan X consists of six 64-bit memory controllers (384-bit) with 12GB of GDDR5 memory. This means that the 384-bit wide memory interface and 7GHz memory clock deliver a peak memory bandwidth that is 50% higher than GTX 980 at 336.5GB/sec.

And with its massive 12GB of GDDR5 memory, gamers can play the latest DX12 games on the Titan X at 4K resolutions without worrying about running short on graphics memory.

Nvidia says that the Titan X is built using the full implementation of GM200. The display/video engines are unchanged from the GM204 GPU used in the GTX 980. Also like the GTX 980, overall double-precision instruction throughput is 1/32 the rate of single-precision instruction throughput.

As mentioned, the base clock speed of the GTX Titan X is 1000MHz, though it does feature a typical Boost Clock speed of 1075MHz. The Boost Clock speed is based on the average Titan X card running a wide variety of games and applications. Note that the actual Boost Clock will vary from game to game depending on actual system conditions.

Setting performance aside for a moment, one of the Titan X's other noteworthy features is its stunning board design. As was the case with previous Titan cards, the Titan X has an aluminum cover. The metal casing gives the board a premium look and feel, while the card's unique black cover sets it apart from predecessors -- this is the Darth Vader of Titans.

A copper vapor chamber is used to cool the Titan X's GM200 GPU. This vapor chamber is combined with a large, dual-slot aluminum heatsink to dissipate heat off the chip. A blower style fan then exhausts this hot air through the back of the graphics card and outside the PC’s chassis. The fan is designed to run very quietly, even while under load when the card is overclocked.

If you recall, the GTX 980 reference board design included a backplate on the underside of the card with a section that could be removed in order to improve airflow when multiple GTX 980 cards are placed directly adjacent to each other (as with 3- and 4-way SLI, for example). In order to provide maximum airflow to the Titan X's cooler in these situations, Nvidia does not include a backplate on the Titan X reference.

The Titan X reference board measures 10.5" long. Display outputs include one dual-link DVI output, one HDMI 2.0 output and three DisplayPort connectors. One 8-pin PCIe power connector and one 6-pin PCIe power connector are required for operation.

Speaking of power connectors, the Titan X has a TDP rating of 250 watts and Nvidia calls for a 600w power supply when running just a single card. That is a little over 50% higher than the TDP rating of the GTX 980, though it is still 14% lower than the Radeon R9 290X.

Nvidia says that being a gaming enthusiast's graphics card, the Titan X has been designed for overclocking and implements a six-phase power supply with overvoltaging capability. An additional two-phase power supply is dedicated for the board's GDDR5 memory.

This 6+2 phase design supplies Titan X with more than enough power, even when the board is overclocked. The Titan X reference board design supplies the GPU with 275 watts of power at the maximum power target setting of 110%.

Nvidia has used polarized capacitors (POSCAPS) to minimize unwanted board noise as well as molded inductors. To further improve Titan X's overclocking potential, Nvidia has improved airflow to these board components so they run cooler compared to previous high-end GK110 products, including the original GTX Titan.

Moreover, Nvidia says it pushed the Titan X to speeds of 1.4GHz using nothing more than the supplied air-cooler during its own testing, so we're obviously interested in testing that.

Testing Methodology

All GPU configurations have been tested at the 2560x1600 and 3840x2160 (UHD) 4K resolutions and for this review we will be discussing the results from both resolutions.

All graphics cards have been tested with core and memory clock speeds set to the AMD and Nvidia specifications.

Test System Specs

- Intel Core i7-5960X (3.0GHz)

- x4 4GB Kingston Predator DDR4-2400 (CAS 12-13-13-24)

- Asrock X99 Extreme6 (Intel X99)

- Silverstone Strider Series (700w)

- Crucial MX200 1TB (SATA 6Gb/s)

- Nvidia GeForce Titan X (12288MB)

- Gainward GFeForce GTX 980 (4096MB)

- Gainward GeForce GTX 970 (4096MB)

- Palit GeForce GTX 780 Ti (3072MB)

- Palit GeForce GTX 780 (3072MB)

- AMD Radeon R9 295X2 (2x 4096MB)

- Gigabyte Radeon R9 290X (4096MB)

- Gigabyte Radeon R9 290 (4096MB)

- Microsoft Windows 8.1 Pro 64-bit

- Nvidia GeForce 347.52

- AMD Catalyst 14.12 Omega

When gaming at 2560x1600 in Crysis 3 the GTX Titan X rendered an impressive 51fps on average, 34% faster than the GTX 980 and 15% faster than the R9 290X, while it was just 7% slower than the R9 295X2.

Jumping to 4K reduced the average frame rate to just 26fps, though if we were to disable anti-aliasing we would likely receive playable performance. Even so, with 26fps the GTX Titan X was 37% faster than the GTX 980 and 18% faster than the R9 290X while also being 24% slower than the R9 295X2.

The GTX Titan X has no trouble with BioShock Infinite at 2560x1600 using the ultra-quality preset, delivering highly playable 96fps, 35% faster than the GTX 980 and a whopping 71% faster than the R9 290X. Despite crushing the R9 290X, the GTX Titan X was still 17% slower than the R9 295X2.

Even at 4K the Titan X is able to deliver playable performance with its 49fps being 29% faster than the GTX 980, 58% faster than the R9 290X and 22% slower than the R9 295X2.

The GTX Titan X looks to be a beast when testing with Metro Redux as the R9 295X2 scales poorly at 2560x1600, allowing the Titan X to be 12% faster than AMD's dual-GPU solution in addition to topping the the GTX 980 and R9 290X.

Testing at 4K showed that the GTX Titan X could deliver playable performance, if only just. With 36fps it was 44% faster than the GTX 980, 50% faster than the R9 290X and just 5% slower than the R9 295X2.

The Titan X again dominated at 2560x1600 with 82fps, 28% faster than the R9 295X2 and 41% faster than the GTX 980. At 4K, the Titan X was 21% faster than the GTX 980, but only 18% faster than the R9 290X and 8% slower than the R9 295X2.

The Titan X rendered an impressive 58fps at 2560x1600 for a 21% lead over the GTX 980 and 35% over the R9 290X. Compared to the R9 295X2, Nvidia's newcomer was 18% slower.

Increasing the resolution reduced the average frame rate of the GTX Titan X to just 28fps, 17% faster than the GTX 980 and 27% when compared to the R9 290X.

The Titan X averaged 57fps at 2560x1600, 19% slower than the R9 295X2 but 46% faster than the GTX 980 and 39% faster than the R9 290X. At 4K the Titan X dropped to 31fps and while this made it 48% faster than the GTX 980, it was just 24% faster than the R9 290X.

At 2560x1600 the Titan X was 35% faster than the GTX 980, 57% faster than the R9 290X, but 16% slower than the R9 295X2. At 4K we find that the Titan X is 40% faster than the GTX 980 and 50% faster than the R9 290X.

The Titan X delivered a silky smooth 80fps at 2560x1600 making it just 2% slower than the R9 295X2 while it was 33% faster than the GTX 980 and 45% faster than the R9 290X. After cranking things up to 3840x2160 the Titan X was 44% faster than the GTX 980, 35% faster than the R9 290X and 16% slower than the R9 295X2.

Once again we see that the Titan X is roughly able to match the dual-GPU R9 295X2 at 2560x1600 while outpacing the GTX 980 and R9 290X by 29% and 57%. Similar margins are seen at 4K as the Titan X was 26% faster than the GTX 980 and 50% faster than the R9 290X but 28% slower than the R9 295X2.

The Titan X might have been 35% faster than the GTX 980 with an average of 50fps, but this also meant that it was just 6% faster than the R9 290X and 29% slower than the R9 295X2. Things didn't improve as we increased the resolution. At 4K the Titan X was only able to match the R9 290X, while it was 45% slower than the R9 295X2.

The Titan X is good for 47fps at 2560x1600 in Dragon Age: Inquisition, making it 31% faster than the GTX 980 and 47% faster than the R9 290X, while it trailed the R9 295X2 by an 18% margin. Upping the resolution to 4K didn't change things too much as the Titan X was 39% faster than both the GTX 980 and R9 290X while being 14% slower than the R9 295X2.

For the fifth time we see poor Crossfire scaling at 2560x1600 from the R9 295X2, allowing the GTX Titan X to deliver 6% more performance along with being 38% faster than the GTX 980 and 35% faster than the R9 290X.

Increasing the resolution did allow the R9 295X2 to get away as the GTX Titan X became 26% slower. Still it maintained strong leads over the GTX 980 and R9 290X.

Considering that the Titan X delivered 71% more performance than the R9 290X in BioShock, it's amazing to that their see power consumption is roughly the same.

The Titan X was 66% faster than the R9 290X in Metro Redux and although we used Metro: Last Light for the power testing, it is incredible once again to see that both GPUs used roughly the same amount of power.

When testing Tomb Raider we found that the GTX Titan X was 67% faster than the R9 290X and yet the Titan X consumed just 4% more power. Additionally, the Titan X used 16% more power than the GTX 980 while delivering 41% more frames and compared to the R9 295X2, the GTX Titan pulled 41% less power.

The Titan X would cool down to just 30 degrees with an ambient room temp of 21 degrees, though the card did hit 84 degrees when under load in Crysis 3, or slightly hotter than the Gigabyte WindForce 3X R9 290X.

Version 4.1.0 of the MSI AfterBurner overclocking utility didn't let us adjust the voltage of the Titan X and we didn't have time to work out why. Nevertheless, we were able to boost the clock speed by 250MHz, resulting in a base clock of 1252MHz and a boost clock of 1326MHz, which isn't far off the 1.4GHz Nvidia said they reached. The memory didn't seem to have much overclocking headroom so we left it at 1753MHz (7.01Gbps).

Our overclock was able to extract 16% more performance out of the GTX Titan X, taking the average frame rate at 2560x1600 from 82fps to 95fps.

This time when testing with BioShock Infinite our overclock provided just 4% more performance which was disappointing.

Finally, when testing how well our overclock boosted performance in Crysis 3, we found a 10% improvement in frame rate which was enough to overtake the R9 295X2.

Our overclock was able to extract 16% more performance out of the GTX Titan X, taking the average frame rate at 2560x1600 from 82fps to 95fps.

This time when testing with BioShock Infinite our overclock provided just 4% more performance which was disappointing.

Finally, when testing how well our overclock boosted performance in Crysis 3, we found a 10% improvement in frame rate which was enough to overtake the R9 295X2.

Now that the results are in, one thing is clear: the GTX Titan X is bloody fast, though this isn't surprising given its specifications. The GTX 980 was already a beast, so with 50% more cores and memory bandwidth at its disposal, the Titan X was destined to be a solid performer.

Given that the Titan X is clocked ~12% lower than the GTX 980 at stock, we were hoping for around a 40% performance bump and thankfully that's close to reality as the Titan X was 37% faster than the GTX 980 at 2560x1600 and 36% faster at 4K UHD.

The Titan X was also 47% faster than the R9 290X on average at 2560x1600 while it was just 36% faster at 3840x2160.

At 2560x1600 the Titan X's results were relatively competitive with AMD's dual-GPU R9 295X2mainly due to poor scaling. In some instances, drivers could be to blame, but there were situations where the R9 295X was clearly CPU limited.

While the Titan X was just 8% slower than the R9 295X2 at 2560x1600, it was 22% slower at 3840x2160. It's not completely fair to compare the Titan X to a water-cooled dual-GPU card, nonetheless the top-end Radeon remains a serious contender for hardcore gamers at $700+.

Now, at $999, the Titan X is aiming at the no-compromise gamer. It's ~80% more expensive than the GTX 980 while delivering 40% more performance, so prospective buyers are set to pay a massive premium. Meanwhile, the new Titan is 47% faster than the R9 290X at 2560x1600, but that hardly justifies spending almost three times as much.

The R9 295X2 can be had for as little as $700, which is a heck of a deal considering the cooling it comes with, though with the headaches that often arise from multi-GPU gaming, we could see someone spending more on what is technically a slower albeit painless and more straightforward solution. Meanwhile, AMD's R9 290X is still the best choice for those wanting the best value flagship.

There's no denying that the Titan X delivers remarkable performance and despite already witnessing first-hand just how efficient Maxwell is, we still thought this card would be more power hungry than it turned out to be. It's nice to be repeatedly impressed on this front.

Ultimately the Titan X delivers. It's not a great value, but if it sells out like the original Titan did then it doesn't need to be.

From TechSpot