- Feb 28, 2023

- 126

Recently, they released their 2023 test, which mimics the attacks of Turla, a Russian country-level APT organization, and is used to examine the detection capabilities of EDR products and the protection capabilities of EPP products. Since they never provide official rankings and comparisons, I've organized and simplified the test results and then provided them in table form for quick reading.

As per MITRE's requirements, they do not do or endorse any third-party-provided report interpretations, so I've only worked to keep the test results as simple to read as possible while keeping the reading difficulty as low as possible by consolidating the test results according to the full individual product reports. As such, I will not say that a particular security software performs better in a particular area, but simply mirror the overall performance in their tests.

This year's test was divided into a total of three categories (Carbon, Snake, and Protection), with 31 security software programs participating, including Cisco and Checkpoint, which withdrew early due to compatibility issues during the test, so the results of 29 security software programs are reflected in the final report.

In the two sections on detection capabilities, I've followed the methodology of their official once-simplified version of the report, and divided the testing simply into Analytic Coverage and Visibility, where Analytic Coverage means that the security software produced technical or tactical detections that mapped to the definitions in ATT&CK or general (but analytically valuable) detections that are not mapped to ATT&CK. Visibility builds on Analytic Coverage but include behavioral logs generated by the security software that cannot be used for separate analysis.

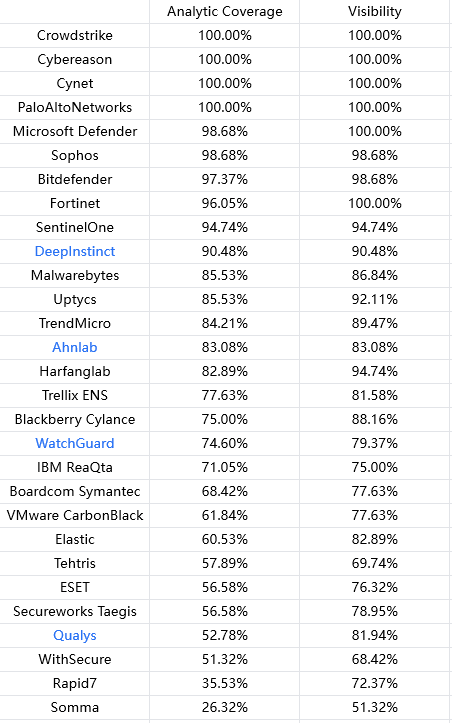

Part I - Carbon (detection)

Scenario:

This scenario follows Turla’s multi-phase approach to implant a watering hole for persistence on a victim’s network as a way to compromise more victims of interest. Turla gains initial access through a spearphishing email, a fake software installer is downloaded onto the victim machine and execution of the EPIC payload takes place. Once persistence and C2 communications are established, a domain controller is discovered, and CARBON-DLL is ingress into victim network. Further lateral movement takes the attackers to a Linux Apache server, PENQUIN is copied to the server and used to install a watering hole.

Result:

Annotation:

Products marked in blue represent the software's statement that certain sub-steps of the test are unsupported, e.g. there may be some security software that does not support the Linux operating system, and these unsupported sub-steps are excluded from the overall percentage.

Part II - Snake (detection)

Scenario:

This scenario continues Turla’s multi-phased, intelligence collection campaign, with the attackers establishing a typo-squatting website to target entities with high value information. Turla targets the victim with a drive-by compromise, Adobe Flash installer bundled with EPIC, which installs on the victim’s network. EPIC communicates to the C2 server via proxy web server with HTTPS requests, persists via process injection, and performs enumeration on the victim’s workstation. SNAKE is then deployed to maintain foothold, elevate privileges and communicates to the C2 via HTTP/SMTP/DNS. Finally, the attackers move laterally to install LightNeuron, enabling Turla to collect and exfiltrate sensitive communications to further mission objectives.

Result:

Annotation:

Products marked in blue represent the software's statement that certain sub-steps of the test are unsupported, e.g. there may be some security software that does not support the Linux operating system, and these unsupported sub-steps are excluded from the overall percentage.

Part III - Protection

Scenario:

Protections reflect the spirit of an assumed breach and defense in depth, exploring a range of ATT&CK behaviors and preventions/remediation procedures that address them. When an adversary activity is blocked, protections enables product owners to explore the next activity. We explore this by developing a clean testing range, executing the adversary emulation plan that is broken down into separate test scenarios, and conducting detection analysis to determine when and if the variant would fail.

Result:

Annotation:

The blue labeled N/A means that the software does not support protection for Linux systems (it may have scanning capability, but no real-time protection, and there is no point in continuing the test). 6 software did not participate in the protection test (may be pure EDR or software vendors do not want to participate), all of their sub-projects are recorded as N/A. The number after √ represents the interception of the sub-steps, can not be categorically said that the earliest interception of the stronger, but can reflect it is more biased towards the binary or behavioral interception.

As per MITRE's requirements, they do not do or endorse any third-party-provided report interpretations, so I've only worked to keep the test results as simple to read as possible while keeping the reading difficulty as low as possible by consolidating the test results according to the full individual product reports. As such, I will not say that a particular security software performs better in a particular area, but simply mirror the overall performance in their tests.

This year's test was divided into a total of three categories (Carbon, Snake, and Protection), with 31 security software programs participating, including Cisco and Checkpoint, which withdrew early due to compatibility issues during the test, so the results of 29 security software programs are reflected in the final report.

In the two sections on detection capabilities, I've followed the methodology of their official once-simplified version of the report, and divided the testing simply into Analytic Coverage and Visibility, where Analytic Coverage means that the security software produced technical or tactical detections that mapped to the definitions in ATT&CK or general (but analytically valuable) detections that are not mapped to ATT&CK. Visibility builds on Analytic Coverage but include behavioral logs generated by the security software that cannot be used for separate analysis.

Part I - Carbon (detection)

Scenario:

This scenario follows Turla’s multi-phase approach to implant a watering hole for persistence on a victim’s network as a way to compromise more victims of interest. Turla gains initial access through a spearphishing email, a fake software installer is downloaded onto the victim machine and execution of the EPIC payload takes place. Once persistence and C2 communications are established, a domain controller is discovered, and CARBON-DLL is ingress into victim network. Further lateral movement takes the attackers to a Linux Apache server, PENQUIN is copied to the server and used to install a watering hole.

Result:

Annotation:

Products marked in blue represent the software's statement that certain sub-steps of the test are unsupported, e.g. there may be some security software that does not support the Linux operating system, and these unsupported sub-steps are excluded from the overall percentage.

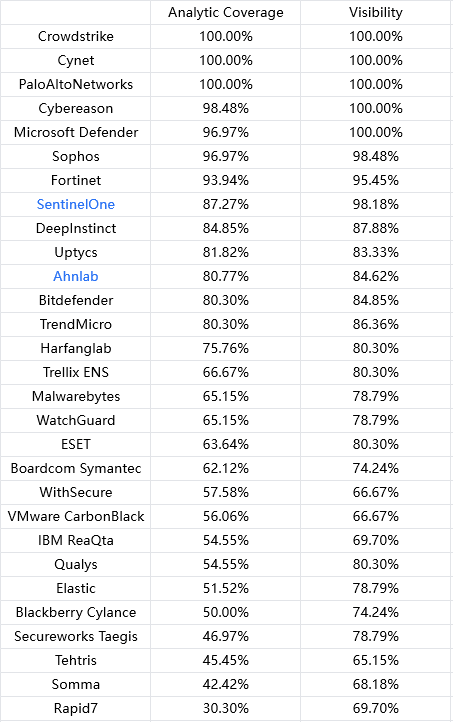

Part II - Snake (detection)

Scenario:

This scenario continues Turla’s multi-phased, intelligence collection campaign, with the attackers establishing a typo-squatting website to target entities with high value information. Turla targets the victim with a drive-by compromise, Adobe Flash installer bundled with EPIC, which installs on the victim’s network. EPIC communicates to the C2 server via proxy web server with HTTPS requests, persists via process injection, and performs enumeration on the victim’s workstation. SNAKE is then deployed to maintain foothold, elevate privileges and communicates to the C2 via HTTP/SMTP/DNS. Finally, the attackers move laterally to install LightNeuron, enabling Turla to collect and exfiltrate sensitive communications to further mission objectives.

Result:

Annotation:

Products marked in blue represent the software's statement that certain sub-steps of the test are unsupported, e.g. there may be some security software that does not support the Linux operating system, and these unsupported sub-steps are excluded from the overall percentage.

Part III - Protection

Scenario:

Protections reflect the spirit of an assumed breach and defense in depth, exploring a range of ATT&CK behaviors and preventions/remediation procedures that address them. When an adversary activity is blocked, protections enables product owners to explore the next activity. We explore this by developing a clean testing range, executing the adversary emulation plan that is broken down into separate test scenarios, and conducting detection analysis to determine when and if the variant would fail.

Result:

Annotation:

The blue labeled N/A means that the software does not support protection for Linux systems (it may have scanning capability, but no real-time protection, and there is no point in continuing the test). 6 software did not participate in the protection test (may be pure EDR or software vendors do not want to participate), all of their sub-projects are recorded as N/A. The number after √ represents the interception of the sub-steps, can not be categorically said that the earliest interception of the stronger, but can reflect it is more biased towards the binary or behavioral interception.

Last edited: