- Apr 25, 2013

- 5,355

When we search Google’s web index, we are only searching around 10 percent of the half-a-trillion or so pages that are potentially available. Much of the content in the larger deep web — not to be confused with the dark web — is buried further down in the sites that make up the visible surface web. The indexes of competitors like Yahoo and Bing (around 15 billion pages each) are still only half as large as Google’s. To close this gap, Microsoft has recently pioneered sophisticated new Field-Programmable Gate Array (FPGA) technology to make massive web crawls more efficient, and faster.

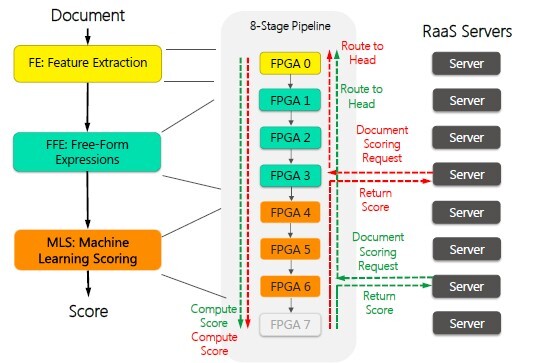

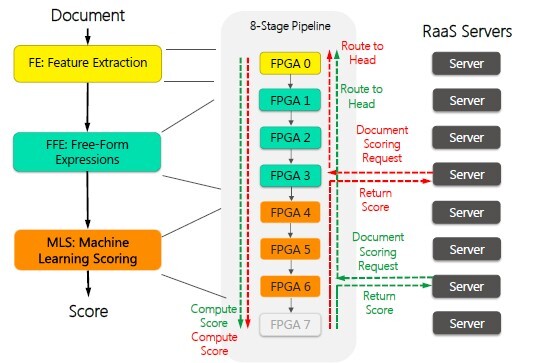

Google’s engineers have previously estimated that a typical 0.2-second web query reflects a quantity of work spent in indexing and retrieval equal to about 0.0003 kWh of energy per search. With over 100 billion looks per month at their petabyte index, well-executed page ranking has become a formidable proposition. Microsoft’s approach with Bing has been to break the ranking portion of search into three parts — feature extraction, free-form expressions, and machine learning scoring:

Bing’s document selection service, which retrieves and filters the documents containing search terms, still runs on Xeon processors. Their ranking services, which score the filtered documents according to the relevance of the search results, have recently been ported to an FPGA-based system they call Project Catapult. Microsoft could likely afford custom ASICs (application-specific integrated circuits) to accelerate Bing’s ranking functions. But given the speed at which the software algorithms now change, it probably can’t afford not to use programmable FPGA hardware instead.

Traditionally FPGAs have been the go-to device for very specific computing needs. Because you can easily reconfigure their internal structure, they’re frequently used for prototyping processors. They’re also handy for applications where a large number of input or output connections on the chip are needed. But there is another place where they are used no matter what the cost, and that is for where absolute speed is of the essence. For example, if your device needs to calculate the total energy of all the hits to a massive satellite-based cosmic-ray detecting scintillator array, decide which hits are real, and do it all in a few nanoseconds, software simply isn’t up to the job.

Project Catapult was originally based on a PCI-Express Card design from 2011 that used six Xilinx FPGAs linked with a controller. Integrating new devices into their existing servers, however, required several redesigns to adhere to strict limits on how much power the devices would draw, and how much heat they would radiate. Their latest design now uses a Stratix V GS D5 FPGA from Altera. For the hardcore FPGA crowd, this particular device has 1,590 digital signal processing blocks, 2,104 M20K memory blocks, and thirty-six 14.1 Gb/sec transceivers. As the Bing team announced last June at ISCA 2014, this platform enabled ranking with roughly half the number of servers used before.

The term Microsoft is using here is “convolution neural network accelerator.” Convolution is commonly used in signal processing applications like computer vision, speech recognition, or anywhere where special averaging or cross-correlation would be of service. In computer vision for example, 2D convolution can be used to massage each pixel using information from its immediate neighbors to achieve various filtering effects. Convolutional neural networks (CNNS) are composed of small assemblies of artificial neurons, where each focuses on a just small part of an image — their receptive field. CNNs have already bested humans in classifying objects in challenges like the ImageNet 1000. Classifying documents for ranking is a similar problem, which is now one among many Microsoft hopes to address with CNNs.

As we speak Microsoft’s engineers are looking to start using Altera’s new Arria 10 FPGA. This chip is optimized for the kinds of floating-point intensive operations that were traditionally the province of DSPs. Able to run at Teraflop speeds with three times the energy efficiency of a comparable GPU, Microsoft hopes it will help them to make significant gains in the search-and-rank business.

Google’s engineers have previously estimated that a typical 0.2-second web query reflects a quantity of work spent in indexing and retrieval equal to about 0.0003 kWh of energy per search. With over 100 billion looks per month at their petabyte index, well-executed page ranking has become a formidable proposition. Microsoft’s approach with Bing has been to break the ranking portion of search into three parts — feature extraction, free-form expressions, and machine learning scoring:

Bing’s document selection service, which retrieves and filters the documents containing search terms, still runs on Xeon processors. Their ranking services, which score the filtered documents according to the relevance of the search results, have recently been ported to an FPGA-based system they call Project Catapult. Microsoft could likely afford custom ASICs (application-specific integrated circuits) to accelerate Bing’s ranking functions. But given the speed at which the software algorithms now change, it probably can’t afford not to use programmable FPGA hardware instead.

Traditionally FPGAs have been the go-to device for very specific computing needs. Because you can easily reconfigure their internal structure, they’re frequently used for prototyping processors. They’re also handy for applications where a large number of input or output connections on the chip are needed. But there is another place where they are used no matter what the cost, and that is for where absolute speed is of the essence. For example, if your device needs to calculate the total energy of all the hits to a massive satellite-based cosmic-ray detecting scintillator array, decide which hits are real, and do it all in a few nanoseconds, software simply isn’t up to the job.

Project Catapult was originally based on a PCI-Express Card design from 2011 that used six Xilinx FPGAs linked with a controller. Integrating new devices into their existing servers, however, required several redesigns to adhere to strict limits on how much power the devices would draw, and how much heat they would radiate. Their latest design now uses a Stratix V GS D5 FPGA from Altera. For the hardcore FPGA crowd, this particular device has 1,590 digital signal processing blocks, 2,104 M20K memory blocks, and thirty-six 14.1 Gb/sec transceivers. As the Bing team announced last June at ISCA 2014, this platform enabled ranking with roughly half the number of servers used before.

The term Microsoft is using here is “convolution neural network accelerator.” Convolution is commonly used in signal processing applications like computer vision, speech recognition, or anywhere where special averaging or cross-correlation would be of service. In computer vision for example, 2D convolution can be used to massage each pixel using information from its immediate neighbors to achieve various filtering effects. Convolutional neural networks (CNNS) are composed of small assemblies of artificial neurons, where each focuses on a just small part of an image — their receptive field. CNNs have already bested humans in classifying objects in challenges like the ImageNet 1000. Classifying documents for ranking is a similar problem, which is now one among many Microsoft hopes to address with CNNs.

As we speak Microsoft’s engineers are looking to start using Altera’s new Arria 10 FPGA. This chip is optimized for the kinds of floating-point intensive operations that were traditionally the province of DSPs. Able to run at Teraflop speeds with three times the energy efficiency of a comparable GPU, Microsoft hopes it will help them to make significant gains in the search-and-rank business.