- Aug 17, 2014

- 11,114

Global Accessibility Awareness Day is Thursday, so Apple took to its newsroom blog this week to announce several major new accessibility features headed to the iPhone, Apple Watch, iPad, and Mac.

One of the most widely used will likely be Live Captions, which is coming to iPhone, Mac, and iPad. The feature shows AI-driven, live-updating subtitles for speech coming from any audio source on the phone, whether the user is "on a phone or FaceTime call, using a video conferencing or social media app, streaming media content, or having a conversation with someone next to them."

The text (which users can resize at will) appears at the top of the screen and ticks along as the subject speaks. Additionally, Mac users will be able to type responses and have them read aloud to others on the call. Live Captions will enter public beta on supported devices ("iPhone 11 and later, iPad models with A12 Bionic and later, and Macs with Apple silicon") later this year.

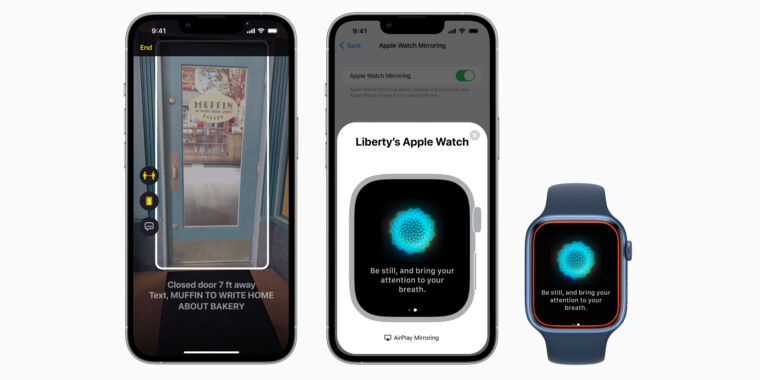

There's also door detection. It unfortunately will only work on iPhones and iPads with a lidar sensor (so the iPhone 12 Pro, iPhone 13 Pro, or recent iPad Pro models), but it sounds useful for those who are blind or have low vision. It uses the iPhone's camera and AR sensors, in tandem with machine learning, to identify doors and audibly tell users where the door is located, whether it's open or closed, how it can be opened, and what writing or labeling it might have.

Door detection will join people detection and image descriptions in a new "detection mode" intended for blind or low-vision users in iOS and iPadOS. Apple's blog post didn't say when that feature would launch, however.

Apple details new iPhone features like door detection, live captions

The announcements were made to celebrate Global Accessibility Awareness Day.