- Nov 10, 2017

- 3,250

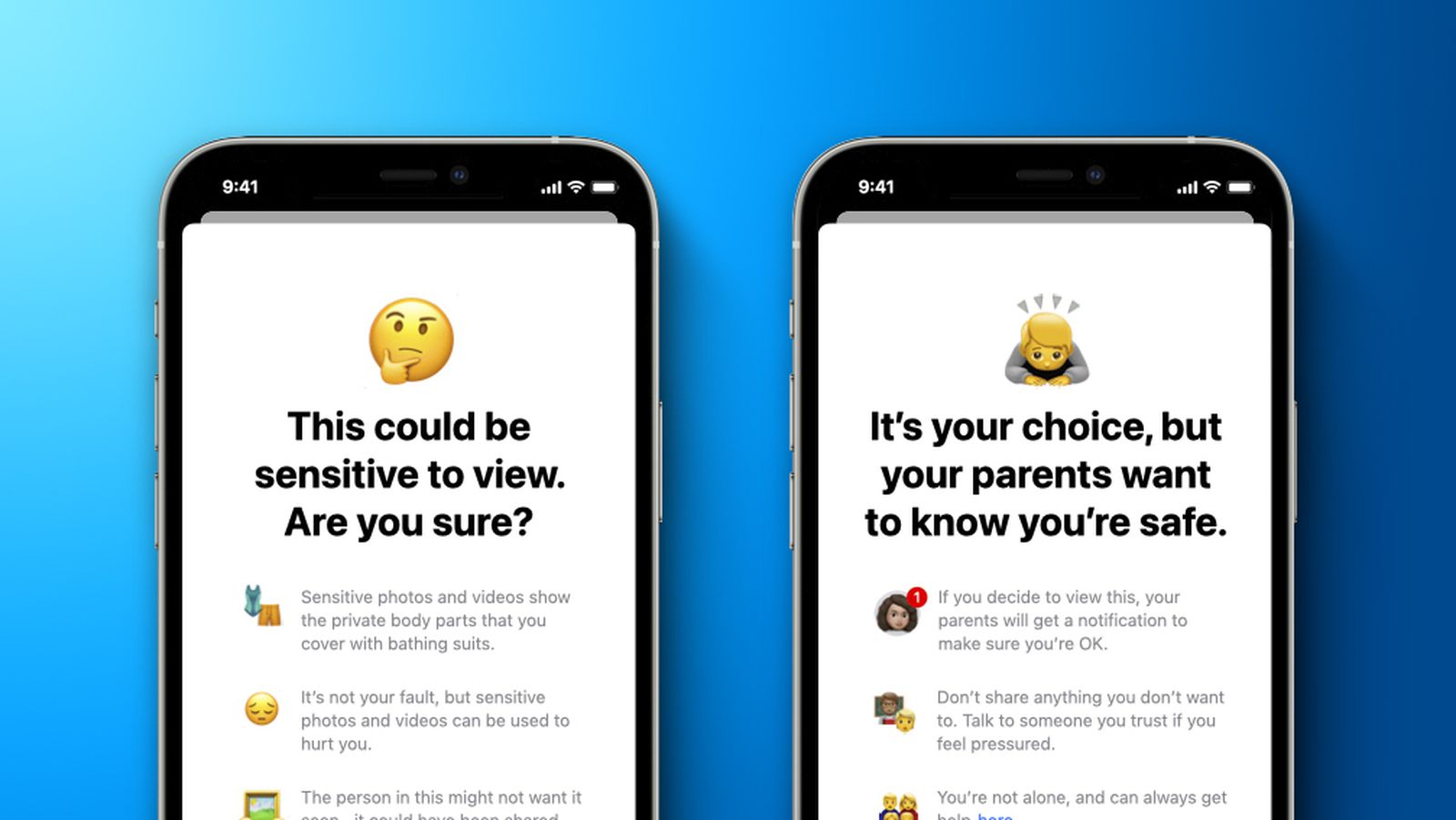

A security expert claims that Apple is about to announce photo identification tools that would identify child abuse images in iOS photo libraries.

Apple has previously removed individual apps from the App Store over child pornography concerns, but now it's said to be about to introduce such detection system wide. Using photo hashing, iPhones could identify Child Sexual Abuse Material (CSAM) on device.

Apple has not confirmed this and so far the sole source is Matthew Green, a cryptographer and associate professor at Johns Hopkins Information Security Institute.

The rest

Apple reportedly plans to make iOS detect child abuse photos | AppleInsider

A security expert claims that Apple is about to announce photo identification tools that would identify child abuse images in iOS photo libraries.

/cdn.vox-cdn.com/uploads/chorus_asset/file/8964143/acastro_170731_1777_0006_v4.jpg)