- Mar 29, 2018

- 7,618

Apple has announced impending changes to its operating systems that include new “protections for children” features in iCloud and iMessage. If you’ve spent any time following the Crypto Wars, you know what this means: Apple is planning to build a backdoor into its data storage system and its messaging system. Child exploitation is a serious problem, and Apple isn't the first tech company to bend its privacy-protective stance in an attempt to combat it. But that choice will come at a high price for overall user privacy. Apple can explain at length how its technical implementation will preserve privacy and security in its proposed backdoor, but at the end of the day, even a thoroughly documented, carefully thought-out, and narrowly-scoped backdoor is still a backdoor.

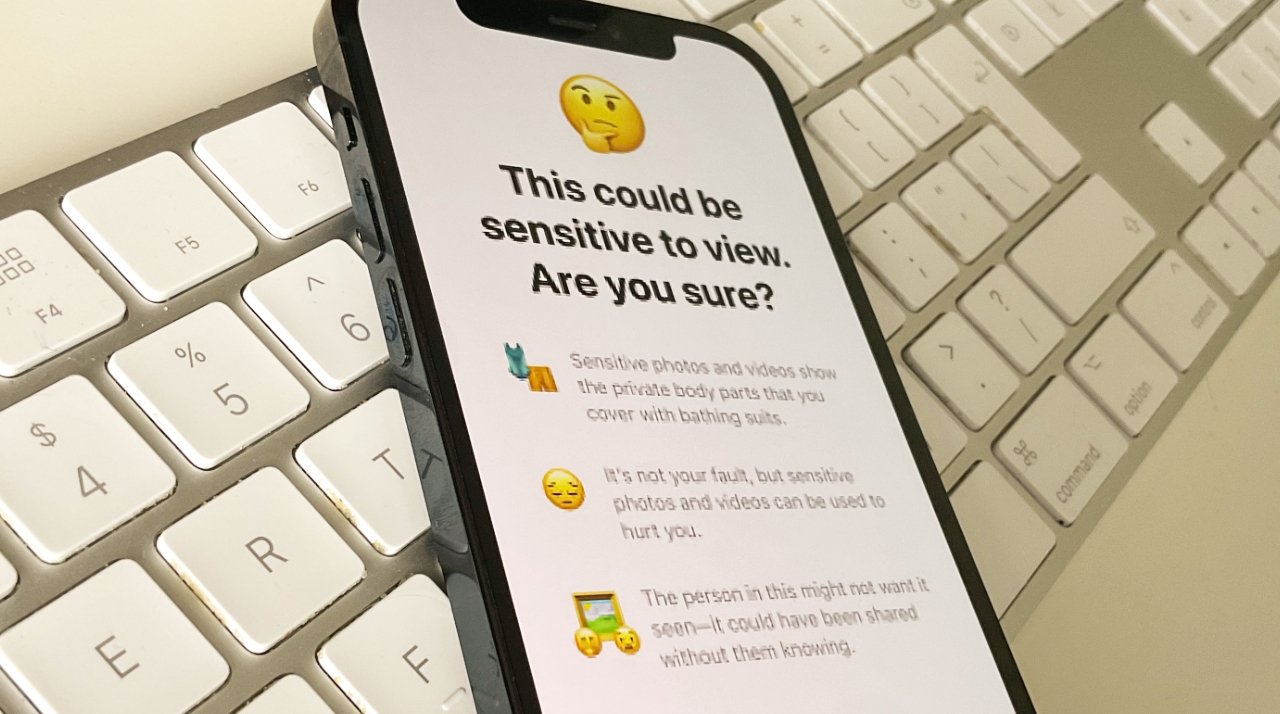

To say that we are disappointed by Apple’s plans is an understatement. Apple has historically been a champion of end-to-end encryption, for all of the same reasons that EFF has articulated time and time again. Apple’s compromise on end-to-end encryption may appease government agencies in the U.S. and abroad, but it is a shocking about-face for users who have relied on the company’s leadership in privacy and security. There are two main features that the company is planning to install in every Apple device. One is a scanning feature that will scan all photos as they get uploaded into iCloud Photos to see if they match a photo in the database of known child sexual abuse material (CSAM) maintained by the National Center for Missing & Exploited Children (NCMEC). The other feature scans all iMessage images sent or received by child accounts—that is, accounts designated as owned by a minor—for sexually explicit material, and if the child is young enough, notifies the parent when these images are sent or received. This feature can be turned on or off by parents. When Apple releases these “client-side scanning” functionalities, users of iCloud Photos, child users of iMessage, and anyone who talks to a minor through iMessage will have to carefully consider their privacy and security priorities in light of the changes, and possibly be unable to safely use what until this development is one of the preeminent encrypted messengers.

Apple Is Opening the Door to Broader Abuses

We’ve said it before, and we’ll say it again now: it’s impossible to build a client-side scanning system that can only be used for sexually explicit images sent or received by children. As a consequence, even a well-intentioned effort to build such a system will break key promises of the messenger’s encryption itself and open the door to broader abuses. That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change. All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts. That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change. Take the example of India, where recently passed rules include dangerous requirements for platforms to identify the origins of messages and pre-screen content. New laws in Ethiopia requiring content takedowns of “misinformation” in 24 hours may apply to messaging services. And many other countries—often those with authoritarian governments—have passed similar laws. Apple’s changes would enable such screening, takedown, and reporting in its end-to-end messaging. The abuse cases are easy to imagine: governments that outlaw homosexuality might require the classifier to be trained to restrict apparent LGBTQ+ content, or an authoritarian regime might demand the classifier be able to spot popular satirical images or protest flyers.

We’ve already seen this mission creep in action. One of the technologies originally built to scan and hash child sexual abuse imagery has been repurposed to create a database of “terrorist” content that companies can contribute to and access for the purpose of banning such content. The database, managed by the Global Internet Forum to Counter Terrorism (GIFCT), is troublingly without external oversight, despite calls from civil society. While it’s therefore impossible to know whether the database has overreached, we do know that platforms regularly flag critical content as “terrorism,” including documentation of violence and repression, counterspeech, art, and satire.

Image Scanning on iCloud Photos: A Decrease in Privacy

Apple’s plan for scanning photos that get uploaded into iCloud Photos is similar in some ways to Microsoft’s PhotoDNA. The main product difference is that Apple’s scanning will happen on-device. The (unauditable) database of processed CSAM images will be distributed in the operating system (OS), the processed images transformed so that users cannot see what the image is, and matching done on those transformed images using private set intersection where the device will not know whether a match has been found. This means that when the features are rolled out, a version of the NCMEC CSAM database will be uploaded onto every single iPhone. The result of the matching will be sent up to Apple, but Apple can only tell that matches were found once a sufficient number of photos have matched a preset threshold. Once a certain number of photos are detected, the photos in question will be sent to human reviewers within Apple, who determine that the photos are in fact part of the CSAM database. If confirmed by the human reviewer, those photos will be sent to NCMEC, and the user’s account disabled. Again, the bottom line here is that whatever privacy and security aspects are in the technical details, all photos uploaded to iCloud will be scanned. Make no mistake: this is a decrease in privacy for all iCloud Photos users, not an improvement. Currently, although Apple holds the keys to view Photos stored in iCloud Photos, it does not scan these images. Civil liberties organizations have asked the company to remove its ability to do so. But Apple is choosing the opposite approach and giving itself more knowledge of users’ content.

Whatever Apple Calls It, It’s No Longer Secure Messaging

As a reminder, a secure messaging system is a system where no one but the user and their intended recipients can read the messages or otherwise analyze their contents to infer what they are talking about. Despite messages passing through a server, an end-to-end encrypted message will not allow the server to know the contents of a message. When that same server has a channel for revealing information about the contents of a significant portion of messages, that’s not end-to-end encryption. In this case, while Apple will never see the images sent or received by the user, it has still created the classifier that scans the images that would provide the notifications to the parent. Therefore, it would now be possible for Apple to add new training data to the classifier sent to users’ devices or send notifications to a wider audience, easily censoring and chilling speech.

But even without such expansions, this system will give parents who do not have the best interests of their children in mind one more way to monitor and control them, limiting the internet’s potential for expanding the world of those whose lives would otherwise be restricted. And because family sharing plans may be organized by abusive partners, it's not a stretch to imagine using this feature as a form of stalkerware. People have the right to communicate privately without backdoors or censorship, including when those people are minors. Apple should make the right decision: keep these backdoors off of users’ devices.

خطّة شركة آبل "لإعادة النظر" بتشفير الرسائل تفتح باباً خلفياً على حياتكم/ن الخاصّة

Apple has announced impending changes to its operating systems that include new “protections for children” features in iCloud and iMessage. If you’ve spent any time following the Crypto Wars, you know what this means: Apple is planning to build a backdoor into its data storage system and its...

Last edited by a moderator: