Brian Kemp is bad on cybersecurity

Errata Security: Brian Kemp is bad on cybersecurity

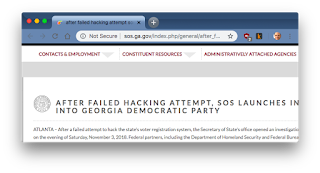

I'd prefer a Republican governor, but as a cybersecurity expert, I have to point out how bad Brian Kemp (candidate for Georgia governor) is on cybersecurity. When notified about vulnerabilities in election systems, his response has been to shoot the messenger rather than fix the vulnerabilities. This was the premise behind the cybercrime bill earlier this year that was ultimately vetoed by the current governor after vocal opposition from cybersecurity companies. More recently, he just announced that he's investigating the Georgia State Democratic Party for a "failed hacking attempt".

Errata Security: Brian Kemp is bad on cybersecurity

I'd prefer a Republican governor, but as a cybersecurity expert, I have to point out how bad Brian Kemp (candidate for Georgia governor) is on cybersecurity. When notified about vulnerabilities in election systems, his response has been to shoot the messenger rather than fix the vulnerabilities. This was the premise behind the cybercrime bill earlier this year that was ultimately vetoed by the current governor after vocal opposition from cybersecurity companies. More recently, he just announced that he's investigating the Georgia State Democratic Party for a "failed hacking attempt".

According to news stories, state elections websites are full of common vulnerabilities, those documented by the OWASP Top 10, such as "direct object references" that would allow any election registration information to be read or changed, as allowing a hacker to cancel registrations of those of the other party.

Testing for such weaknesses is not a crime. Indeed, it's desirable that people can test for security weaknesses. Systems that aren't open to test are insecure. This concept is the basis for many policy initiatives at the federal level, to not only protect researchers probing for weaknesses from prosecution, but to even provide bounties encouraging them to do so. The DoD has a "Hack the Pentagon" initiative encouraging exactly this.

But the State of Georgia is stereotypically backwards and thuggish. Earlier this year, the legislature passed SB 315 that criminalized this activity of merely attempting to access a computer without permission, to probe for possibly vulnerabilities. To the ignorant and backwards person, this seems reasonable, of course this bad activity should be outlawed. But as we in the cybersecurity community have learned over the last decades, this only outlaws your friends from finding security vulnerabilities, and does nothing to discourage your enemies. Russian election meddling hackers are not deterred by such laws, only Georgia residents concerned whether their government websites are secure.

It's your own users, and well-meaning security researchers, who are the primary source for improving security. Unless you live under a rock (like Brian Kemp, apparently), you'll have noticed that every month you have your Windows desktop or iPhone nagging you about updating the software to fix security issues. If you look behind the scenes, you'll find that most of these security fixes come from outsiders. They come from technical experts who accidentally come across vulnerabilities. They come from security researchers who specifically look for vulnerabilities.

It's because of this "research" that systems are mostly secure today. A few days ago was the 30th anniversary of the "Morris Worm" that took down the nascent Internet in 1988. The net of that time was hostile to security research, with major companies ignoring vulnerabilities. Systems then were laughably insecure, but vendors tried to address the problem by suppressing research. The Morris Worm exploited several vulnerabilities that were well-known at the time, but ignored by the vendor (in this case, primarily Sun Microsystems).

Since then, with a culture of outsiders disclosing vulnerabilities, vendors have been pressured into fix them. This has led to vast improvements in security. I'm posting this from a public WiFi hotspot in a bar, for example, because computers are secure enough for this to be safe. 10 years ago, such activity wasn't safe.

The Georgia Democrats obviously have concerns about the integrity of election systems. They have every reason to thoroughly probe an elections website looking for vulnerabilities. This sort of activity should be encouraged, not suppressed as Brian Kemp is doing.

To be fair, the issue isn't so clear. The Democrats aren't necessarily the good guys. They are probably going to lose by a slim margin, and will cry foul, pointing to every election irregularity as evidence they were cheated. It's like how in several races where Republicans lost by slim numbers they claimed criminals and dead people voted, thus calling for voter ID laws. In this case, Democrats are going to point to any potential vulnerability, real or imagined, as disenfranchising their voters. There has already been hyping of potential election systems vulnerabilities out of proportion to their realistic threat for this reason.

But while not necessarily in completely good faith, such behavior isn't criminal. If an election website has vulnerabilities, then the state should encourage the details to be made public -- and fix them.

One of the principles we've learned since the Morris Worm is that of "full disclosure". It's not simply that we want such vulnerabilities found and fixed, we also want the complete details to be made public, even embarrassing details. Among the reasons for this is that it's the only way that everyone can appreciate the consequence of vulnerabilities.

In this case, without having the details, we have only the spin from both sides to go by. One side is spinning the fact that the website was wide open. The other side, as in the above announcement, claims the website was completely secure. Obviously, one side is lying, and the only way for us to know is if the full details of the potential vulnerability are fully disclosed.

By the way, it's common for researchers to falsely believe in the severity of the vulnerability. This is at least as common as the embarrassed side trying to cover it up. It's impossible to say which side is at fault here, whether it's a real vulnerability or false, without full disclosure. Again, the wrong backwards thinking is to believe that details of vulnerabilities should be controlled, to avoid exploitation by bad guys. In fact, they should be disclosed, even if it helps the bad guys.

But regardless if these vulnerabilities are real, we do know that criminal investigation and prosecution is the wrong way to deal with the situation. If the election site is secure, then the appropriate response is to document why.

With that said, there's a good chance the Democrats are right and Brian Kemp's office is wrong. In the very announcement declaring their websites are secure, Google Chrome indicates their website is not secure in the URL sos.ga.gov, because they don't use encryption.

Using LetsEcnrypt to enable encryption on websites is such a standard security feature we have to ask ourselves what else they are getting wrong. Normally, I'd run scanners against their systems in order to figure this out, but I'm afraid to, because they are jackbooted thugs who'll come after me, instead of honest people who care about what vulnerabilities I might find so they can fix them.

Conclusion

I'm Libertarian, so I'm going to hate a Democrat governor more than a Republican governor. However, I'm also a cybersecurity expert and somebody famous for scanning for vulnerabilities. As a Georgia resident, I'm personally threatened by this backwards thuggish behavior by Brian Kemp. He learned nothing from this year's fight over SB 315, and unlike the clueful outgoing governor who vetoed that bill, Kemp is likely to sign something similar, or worse, into law.

The integrity of election systems is an especially important concern. The only way to guarantee them is to encourage research, the probing by outsiders for vulnerabilities, and fully disclosing the results. Even if Georgia had the most secure systems, embarrassing problems are going to be found. Companies like Intel, Microsoft, and Apple are the leaders in cybersecurity, and even they have had embarrassing vulnerabilities in the last few months. They have responded by paying bounties to the security researchers who found those problems, not by criminally investigating them.