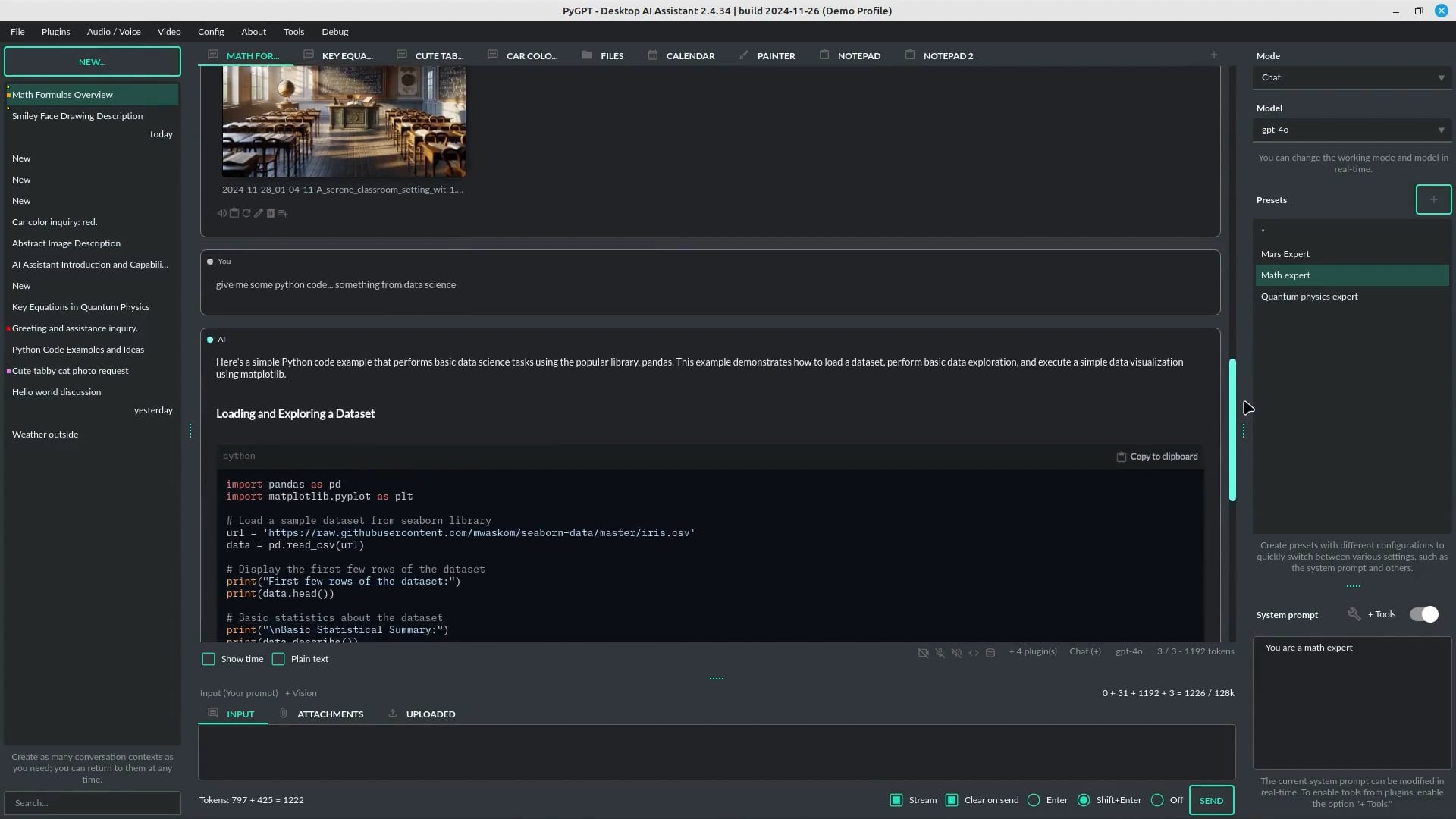

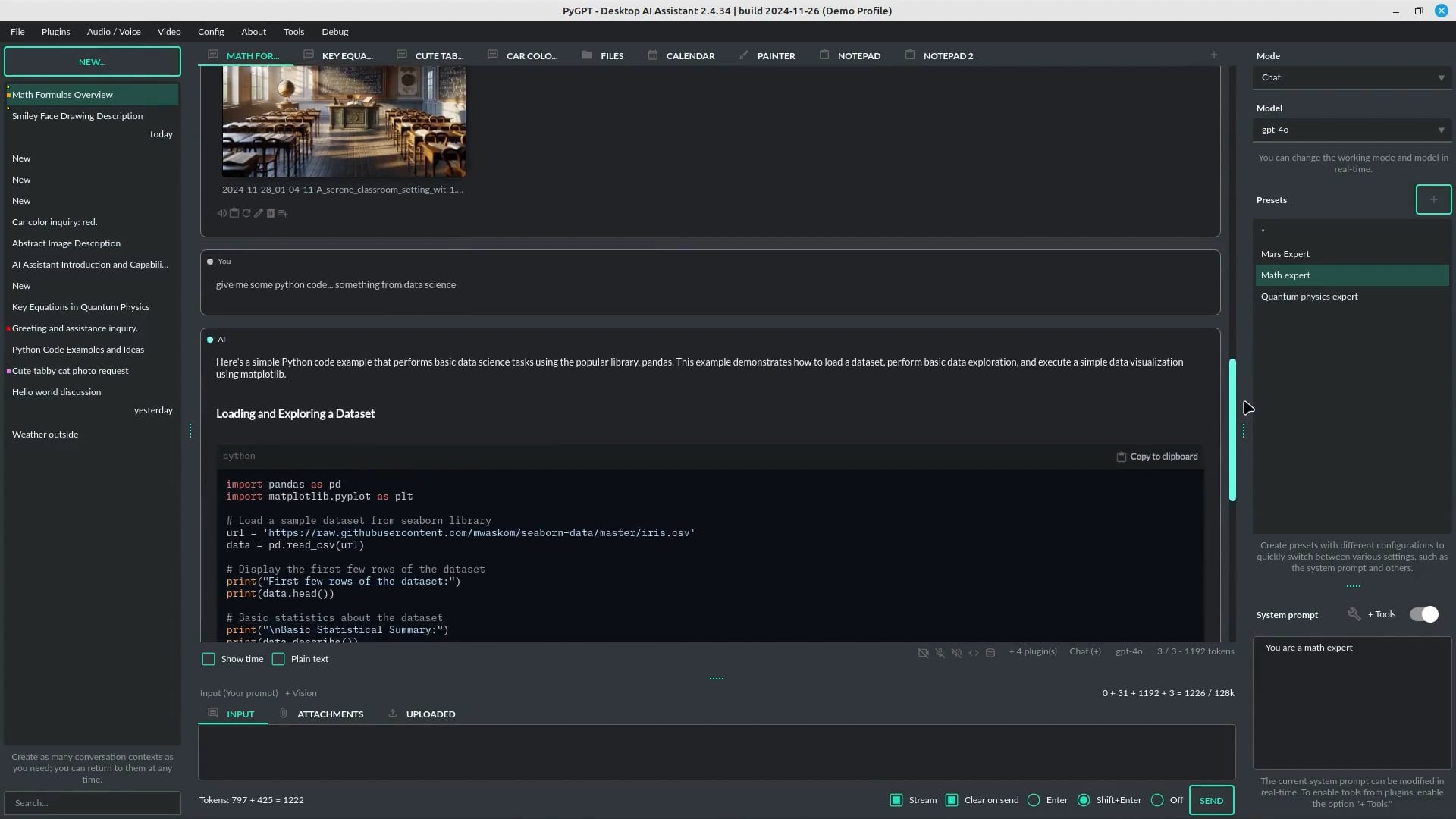

GPT-5 AI Assistant

Open Source, Personal Desktop AI Assistant for Linux, Windows, and Mac with Chat, Vision, Agents, Image generation, Tools and commands, Voice control and more.

12 modes of operation

Multiple modes of operation: Chat for natural conversation, Chat with Files for utilizing local files as additional context, Vision for image and camera-capture analysis, Agents for handling complex and autonomous tasks, Audio for audio-based interactions, Research for in-depth exploration using Perplexity and OpenAI's advanced research models, Computer Use, and more.

pygpt.net

pygpt.net

Open Source, Personal Desktop AI Assistant for Linux, Windows, and Mac with Chat, Vision, Agents, Image generation, Tools and commands, Voice control and more.

12 modes of operation

Multiple modes of operation: Chat for natural conversation, Chat with Files for utilizing local files as additional context, Vision for image and camera-capture analysis, Agents for handling complex and autonomous tasks, Audio for audio-based interactions, Research for in-depth exploration using Perplexity and OpenAI's advanced research models, Computer Use, and more.

PyGPT – Open‑source Desktop AI Assistant for Windows, macOS, Linux

PyGPT is an open‑source desktop AI assistant for Windows, macOS and Linux. Chat, agents, web search, run Python, TTS/STT, plugins, long‑term memory.