This article is a comprehensive state-of-the-art review of malware detection model research.

Malware Detection Issues, Challenges, and Future Directions: A Survey

Full article:

Malware Detection Issues, Challenges, and Future Directions: A Survey

Abstract:

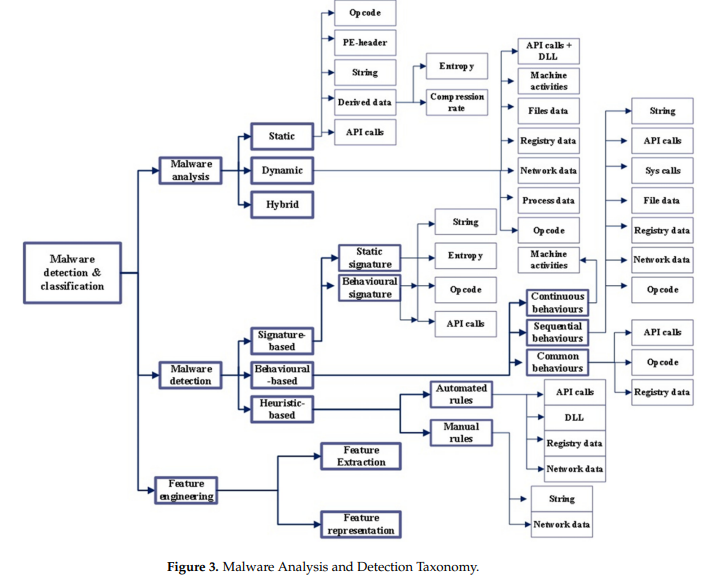

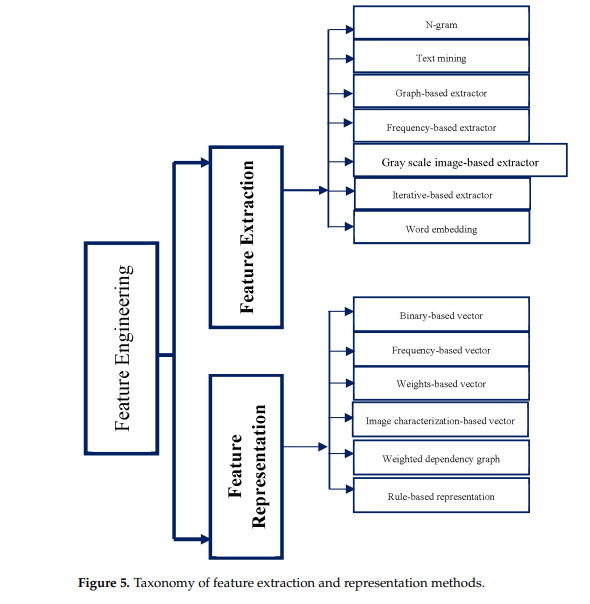

The evolution of recent malicious software with the rising use of digital services has increased the probability of corrupting data, stealing information, or other cybercrimes by malware attacks. Therefore, malicious software must be detected before it impacts a large number of computers. Recently, many malware detection solutions have been proposed by researchers. However, many challenges limit these solutions to effectively detecting several types of malware, especially zero-day attacks due to obfuscation and evasion techniques, as well as the diversity of malicious behavior caused by the rapid rate of new malware and malware variants being produced every day. Several review papers have explored the issues and challenges of malware detection from various viewpoints. However, there is a lack of a deep review article that associates each analysis and detection approach with the data type. Such an association is imperative for the research community as it helps to determine the suitable mitigation approach. In addition, the current survey articles stopped at a generic detection approach taxonomy. Moreover, some review papers presented the feature extraction methods as static, dynamic, and hybrid based on the utilized analysis approach and neglected the feature representation methods taxonomy, which is considered essential in developing the malware detection model. This survey bridges the gap by providing a comprehensive state-of-the-art review of malware detection model research. This survey introduces a feature representation taxonomy in addition to the deeper taxonomy of malware analysis and detection approaches and links each approach with the most commonly used data types. The feature extraction method is introduced according to the techniques used instead of the analysis approach. The survey ends with a discussion of the challenges and future research directions.

Full article: