As long as the whole test is independently developed, executed, verified, and samples were selected independently. I see nothing wrong with it. But because the commissioned test is not scheduled like usual, the vendor who sponsors the test is likely to be better prepared for that particular test, so this is the real

grain of salt IMO

.

Not always the case, there's a good rate of the commissioned test (multi-vendor test) that didn't end up in favor of the sponsor. Note that commissioned test is not scheduled like usual, the vendor who sponsors the test is likely to be better prepared for that particular test. The tester also claims to be independent during the test (develops, executes, verify, and collects samples).

For an example of "not always come out on top":

1.

Network Performance Test | Business Security Software | Commissioned by ESET.

Out of 6 vendors, ESET ranked 3rd for "Traffic LAN: Server Client", 1st on "Traffic WAN: Server", and 2nd on "Traffic WAN: Client"

The second test ranked 1st for "Total Client-Side Network Load", the third test ranked 1st (tied to another Vendor) "Size of client-side virus definitions".

In the fourth test, ESET was 2nd faster, 2nd worst CPU load, 2nd worst additional RAM usage for "Machine Load During Update", one vendor managed to get 1st for all factors.

2.

Comparison of Anti-Malware Software for Storage 2016 | Commissioned by Kaspersky LAB.

Total 5 vendors, Kaspersky file detection test came out 1st, and 3rd for false positives (note that they also compare with public annual test reports from consumer products which have the same results).

Throughput impact ranked 1st, 2nd, and 3rd from different platforms [out of 4 vendor total, due to one generate invalid reports] (DB, VDA, VDI), there also detailed comparisons split into detailed factors.

Impact on latency got one worst and none best.

3.

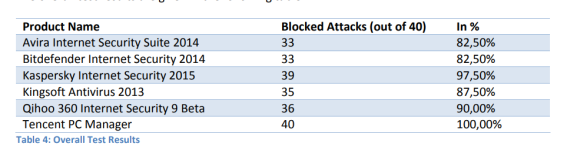

Advanced Endpoint Protection Test | Commissioned by Bitdefender.

Total 9 vendors, ranked 1st on "Proactive Protection Test" rate but with 2nd worst false positives.

"PowerShell-based File-less Attacks and File-based Exploits Test" ranked 1 tied with Kaspersky (you can check 2019 and 2020 enhanced real-world protection tests that were not commissioned by any vendor, Kaspersky, ESET, and Bitdefender performs very well).

"Real-World Protection Test" 1st protection tied with Sophos, but with one more false positives (4th worst).

"Ransomware Test" 1st with 100% rate, and pretty sure it's not as advanced as the PoC test in this forum thread.

My concern about this test in this thread is whether the default settings of the business products really care much about user data on shared folders thus users need to decide whether they need the shared folder protected or not, not only because one file gets encrypted = fail (it's likely that one document worth a lot for the company). Also, next couple of months, Kaspersky's performance against ransomware might differ because probably they focus and prepare the best for this particular test only even though every day, each vendor always does their best to protect their customers. Preparation was started in Dec'20, months ago, even though other vendors were notified, that the particular vendor who sponsors this test will likely use as much resource as they can, compared to other vendors, to get the best.

I dont understand why, but K performance is better. I speak about Windows 11!!! If u have time, please rescan thenew F-Secure 18.1