The test did not take into account the prevalence of clean samples. If I correctly remember the prevalence of samples is counted in SE Lab tests.

www.av-comparatives.org

www.av-comparatives.org

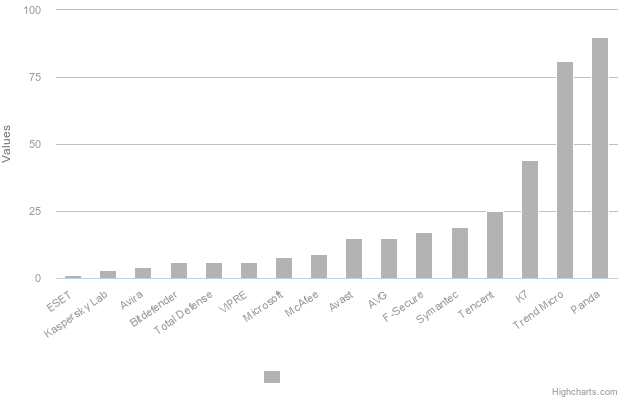

False Alarm Test March 2019

The False Alarm Test March 2019 is an appendix to the File Detection Test March 2019 listing details about the discovered False Alarms.

www.av-comparatives.org

www.av-comparatives.org

Last edited: