To quickly debate this and explain how I do it:

For URLs, source that everyone knows. It's not the method I prefer because URLs are quickly detected and die very quickly... I do it on principle, but I hate it.

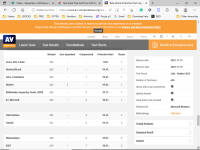

For my packs, I mix it up. I put old malwares (like Sality, Virut etc) to see if the lab knows them. Then, before getting fresh samples, I run them on a dedicated VM to see if they work. And once everything is OK, then I take it.

I also plan to create a personal Honneypot, but I lack time...

Then, for the YouTube tests, it depends.

On my side, I listen to all the opinions to improve my videos. Like at the end of January I'm going to integrate the Offline pack tests because an antivirus software won't react as if it's Online (hello Microsoft Defender because I'll start with it and before the end of January! )

For the other testers, you should know that I don't look at any of them. Faking a result is easy to do, especially if the tester is sponsored by a brand...

Whether it's Leo, CS Security etc, I don't look at any.

The only ones I look at are League Of Antivirus (but I think it has stopped) and ZeroTech00 (which I like a lot) but not others.

Especially since I'm the kind of person who believes what I do and not what I see

If you have any questions, don't hesitate of course !