Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

The peculiarity of EXE malware testing.

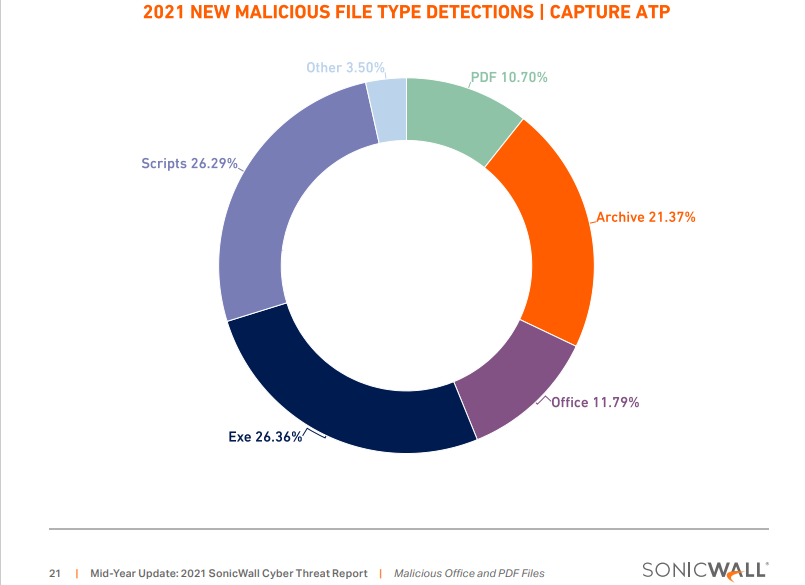

Let's look at the distribution of malware in the wild by file types:

https://static.poder360.com.br/2021/07/relatorio.pdf

We know that archives contain mostly EXE files, documents, and scripts. So, we can conclude that roughly 1/3 of initial malware are EXE files, 1/3 documents (PDF, Office), and 1/3 scripts. The attacks started with documents or scripts can often include EXE files at the later infection stage. So, most EXE malware will not be initial malware but EXE payloads that appear later in the infection chain.

Let's inspect the protection of two AVS ("A" and "B") which have got identical capabilities for detecting unknown EXE files, but the "A" has got additional strong protection against documents and scripts. It is obvious that:

Protection of "A" > Protection of "B"

The "A" can detect/block the document or script before the payload could be executed. So, there can be many 0-day EXE payloads in the wild attacks that will compromise "B" and will be prevented by "A".

After being compromised, the "B" creates fast signature or behavior detection, and for the "A" the payload is invisible.

If we will make a test on AVs "A" and "B" the next day the results will be strange:

The "B" will properly detect these payloads and the "A" will miss them. The result of the test:

Protection of "A" < Protection of "B"

The above false result holds despite the fact that "A" and "B" have got identical capabilities for detecting EXE files and despite the fact, that in the wild the "A" protects better.

The error follows from the fact that the AV cloud backend can quickly react when it is compromised, and much slower if it is not.

There are many people involved in threat hunting, so after some time most of these invisible (for "A") EXE payloads are added to detections (even if they did not compromise "A" in the wild).

Anyway, this reasoning does not apply to AVs that use file reputation lookup for EXE files. Such AVs will block almost all EXE samples both in the wild and in the tests.

How to minimize this error?

https://malwaretips.com/threads/pecuriality-of-exe-malware-testing.112854/post-979714

Let's look at the distribution of malware in the wild by file types:

https://static.poder360.com.br/2021/07/relatorio.pdf

We know that archives contain mostly EXE files, documents, and scripts. So, we can conclude that roughly 1/3 of initial malware are EXE files, 1/3 documents (PDF, Office), and 1/3 scripts. The attacks started with documents or scripts can often include EXE files at the later infection stage. So, most EXE malware will not be initial malware but EXE payloads that appear later in the infection chain.

Let's inspect the protection of two AVS ("A" and "B") which have got identical capabilities for detecting unknown EXE files, but the "A" has got additional strong protection against documents and scripts. It is obvious that:

Protection of "A" > Protection of "B"

The "A" can detect/block the document or script before the payload could be executed. So, there can be many 0-day EXE payloads in the wild attacks that will compromise "B" and will be prevented by "A".

After being compromised, the "B" creates fast signature or behavior detection, and for the "A" the payload is invisible.

If we will make a test on AVs "A" and "B" the next day the results will be strange:

The "B" will properly detect these payloads and the "A" will miss them. The result of the test:

Protection of "A" < Protection of "B"

The above false result holds despite the fact that "A" and "B" have got identical capabilities for detecting EXE files and despite the fact, that in the wild the "A" protects better.

The error follows from the fact that the AV cloud backend can quickly react when it is compromised, and much slower if it is not.

There are many people involved in threat hunting, so after some time most of these invisible (for "A") EXE payloads are added to detections (even if they did not compromise "A" in the wild).

Anyway, this reasoning does not apply to AVs that use file reputation lookup for EXE files. Such AVs will block almost all EXE samples both in the wild and in the tests.

How to minimize this error?

- Using initial attack samples (like in the Real-World tests).

- Using many 0-day unknown samples. The more unknown samples the smaller will be the error.

- Using prevalent samples (like in the Malware Protection tests made by AV-Comparatives and AV-Test). The "A" has more chances to quickly add the detections of prevalent samples.

https://malwaretips.com/threads/pecuriality-of-exe-malware-testing.112854/post-979714

Last edited: