The best Home AV protection 2021-2022

- Thread starter Andy Ful

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Disclaimer

-

This test shows how an antivirus behaves with certain threats, in a specific environment and under certain conditions.

We encourage you to compare these results with others and take informed decisions on what security products to use.

Before buying an antivirus you should consider factors such as price, ease of use, compatibility, and support. Installing a free trial version allows an antivirus to be tested in everyday use before purchase.

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

Did not participate in AV-Comparatives and SE Labs tests.F-secure?

- Dec 18, 2015

- 60

Do you believe that it would be among the first if it would?Did not participate in AV-Comparatives and SE Labs tests.

Last edited:

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

Yes. It was in the previous comparison:Do you believe that it would be among the first if it would?

The best Home AV protection 2019-2020

Real-World tests include fresh web-originated samples. Malware Protection tests include older samples (several days old) usually delivered via USB drives or network drives. Real-World 2019-2020: SE Labs, AV-Comparatives, AV-Test (7659 samples in 24 tests) -------------------Missed samples...

malwaretips.com

malwaretips.com

- Aug 13, 2012

- 186

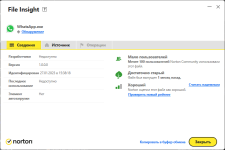

About Norton Security. It detects some good files as WS.Reputation. And for some malicious files, it shows a good file.

It's not that often. It's probably forgivable. Everyone decides for himself whether to forgive or not.

Sent the file to their lab.

It's not that often. It's probably forgivable. Everyone decides for himself whether to forgive or not.

Attachments

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

That is interesting malware. It has got a broken certificate, so it is not benign. It is not recognized as malicious by most of the top AVs, despite the fact that it is a few weeks old (created in the year 2021???). It is very probable that it is already "dead" (not harmful). It is also very probable that it could be blocked by Norton when it was not dead (too low prevalence and short time in the wild). Many malware samples are alive only for a short time, from several minutes to a few hours.

The VT info also suggests that truly malicious actions can be probably performed not by this executable but via DLL hijacking. In such a case, Norton would probably block the attack by blocking the malicious DLL.

There can be many similar cases when Norton might not detect the malware, but the user is protected anyway.

The VT info also suggests that truly malicious actions can be probably performed not by this executable but via DLL hijacking. In such a case, Norton would probably block the attack by blocking the malicious DLL.

There can be many similar cases when Norton might not detect the malware, but the user is protected anyway.

Last edited:

- Aug 13, 2012

- 186

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

The file was a part of the attack (it is not a legal file), so even if it is already dead or not malicious itself (without a payload) then it should not be on the computer. The detection of Norton is not perfect. The protection is far better due to Download Insight.

Anyway, also reputation-based solutions are imperfect. I can imagine that a coin-miner malware that uses 30% of the CPU, can be installed on many computers (even intentionally) and bypass reputation-based protection. The same is true for some legal Adware.

Anyway, also reputation-based solutions are imperfect. I can imagine that a coin-miner malware that uses 30% of the CPU, can be installed on many computers (even intentionally) and bypass reputation-based protection. The same is true for some legal Adware.

Last edited:

- Mar 13, 2021

- 470

I've read in some articles that Microsoft Defender protects better if you use Edge and it's not the same with other browsers, even if you use the browser extension. Is that true?

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

Yes, If you use Edge with SmartScreen + PUA enabled. If I correctly recall, the extension (Windows Defender Browser Protection) does not include PUA.I've read in some articles that Microsoft Defender protects better if you use Edge and it's not the same with other browsers, even if you use the browser extension. Is that true?

F

ForgottenSeer 97327

Real-World tests include fresh web-originated samples.

Malware Protection tests include older samples (several days old) usually delivered via USB drives or network drives.

Real-World Triathlon 2021-2022: SE Labs, AV-Comparatives, AV-Test (7548 samples in 24 tests)

-------------------Missed samples

Norton 360..................12..... =

Avast...........................13..... +

Kaspersky....................18..... =

Microsoft...................*27.5.. =

McAfee ........................37.... +

Avira ............................43.... =

Comparison with the period 2019-2021:

- no significant changes in protection ( = )

- significant improvement in protection ( + )

Real-World Biathlon 2021-2022: SE Labs, AV-Comparatives, AV-Test (6748 samples in 16 tests)

-------------------Missed samples

Norton......................6

Bitdefender .............9

Avast........................10

Kaspersky................13

TrendMicro..............13

Microsoft...............*22.5

McAfee....................23

Malwarebytes.........26

Avira........................30

Malware Protection Biathlon 2021-2022: AV-Test, AV-Comparatives (270634 samples in 16 tests)

-------------------Missed samples

Norton 360.............1........ =

McAfee...................3....... +

Bitdefender.............5

Avast....................15........ +

Kaspersky.............28....... =

Microsoft............. 30....... =

Avira ....................45....... =

Malwarebytes.....173

TrendMicro ........623....... -

Comparison with the period 2019-2021:

- no significant changes in detection ( = )

- significant improvement in detection ( + )

- significant decrease of detection ( - )

Why two years period?

The results of any single test made by SE Labs, AV-Test, or AV-Comparatives are useless for comparing AVs. In most cases, the statistical errors allow only saying that the group of 10 first AVs can be awarded. This follows from a too-small number of tested samples. I noticed that even a period of one year is not sufficient.

Some thoughts about these results:

https://malwaretips.com/threads/the-best-home-av-protection-2019-2020.106485/post-927440

- The entries with the same color cannot be differentiated due to statistical errors.

- The differences between several AVs are very small and they can hardly be noticed by the home user.

- In some cases, the differences can disappear by tweaking the AV settings.

https://selabs.uk/consumer/

https://www.av-comparatives.org/news-archive/

https://www.av-test.org/en/antivirus/home-users/

When you intent this post as serious, I have some serious questions (the last being the most statistically relevant one :

- What do the color codes mean?

- What does the asterix for Microsoft mean?

- What is the difference between real world thriatlon and biatlon in your categories?

- When biatlon means those AV-s took only part in 2 out of 3 on what criteria did you exclude missed samples of the AV;s mentioned in Thriatlon?

- On what basis did you decide that the sample test set of two years testing is big enough to make them relevant for the total malware population in those two years?

Last edited by a moderator:

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

It is explained in the OP. The same color means that the statistical error is probably greater than the differences in missed samples. So, on the basis of the data one cannot say which AV is better.When you intent this post as serious, I have some serious questions (the last being the most statistically relevant one :)

- What do the color codes mean?

It comes from:

- What does the asterix for Microsoft mean?

https://malwaretips.com/threads/the-best-home-av-protection-2021.112213/post-973591

* AV-Comparatives rejected the partial results in the test February-May 2021 due to incorrect Defender updates. So, the result of Microsoft Defender in the first half of the year was calculated as an average of other tests.

The number of AV labs.

- What is the difference between real world thriatlon and biatlon in your categories?

- When biatlon means those AV-s took only part in 2 out of 3 on what criteria did you exclude missed samples of the AV;s mentioned in Thriatlon?

The missed samples are added. So, the Biathlon does not include missed samples from SE Labs tests.

- On what basis did you decide that the sample test set of two years testing is big enough to make them relevant for the total malware population in those two years?

It is explained in the results for the period 2019-2020:

https://malwaretips.com/threads/the-best-home-av-protection-2019-2020.106485/post-927440

- Jan 28, 2018

- 2,464

I interpreted this as a sacrifice of safety at the cost of convenience and fun living with computers.At some point why bother using a PC at all...

A parked car is safe, but once it starts moving, there is no small risk of an accident. It is similar to that.

It is necessary, but overconfidence is prohibited. In my opinion, it is too overprotective to the extent that it interferes with the use of online software.So is an antivirus really necessary?

F

ForgottenSeer 97327

On what basis did you decide two years is enough? The sample test has to be relevant to the number of malwares in the wild in that specific period. Since nobody knowns how much malware lives in the wild in a given month (you can't count what you don't detect), I am still in the dark about why 2 years is sufficient, why not four years or eight?It is explained in the results for the period 2019-2020:

https://malwaretips.com/threads/the-best-home-av-protection-2019-2020.106485/post-927440

As posted earlier: it makes me smile about the passion MT-members debate qualities and advantages of specific AV's while you are saying the differences are irrelevant due to to small test sample sizes

F

ForgottenSeer 97327

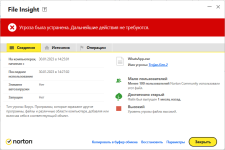

Whatsapp maliciousAbout Norton Security. It detects some good files as WS.Reputation. And for some malicious files, it shows a good file.

It's not that often. It's probably forgivable. Everyone decides for himself whether to forgive or not.

Sent the file to their lab.

Well looking at what it does by monitoring what you like on your friends/contacts posts and because it are your friends/contacts and your group memberships, it exactly knows your interests and preferences. Rumours say even pictures can be hashed on topic by AI/image recognition, so I am not surprised so many qualify this executable as malicious

- Aug 13, 2012

- 186

FakeWhatsApp / Win32.Stealer.Whatsapp malicious

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

On what basis did you decide two years is enough? The sample test has to be relevant to the number of malwares in the wild in that specific period. Since nobody knowns how much malware lives in the wild in a given month (you can't count what you don't detect), I am still in the dark about why 2 years is sufficient, why not four years or eight?

The results from one year can be very different in the next year. This difference is significantly smaller when comparing the two-year periods. It is possible that a three-year period could also be OK.

Here is an example from the reference link:

Real-World: SE Labs, AV-Comparatives, AV-Test

-------------------------------2019----2020------

Avast Free...............26..............11

Microsoft................12..............25

The one-year results are totally different, but the two-year results are the same.

On the other side, the AV protection is changing in time. So, it would not be reasonable to increase the period above two years.

- Jul 5, 2019

- 617

Very well said.As posted earlier: it makes me smile about the passion MT-members debate qualities and advantages of specific AV's while you are saying the differences are irrelevant due to to small test sample sizes

F

ForgottenSeer 97327

Yes you explained, but you did not answer my question.The results from one year can be very different in the next year. This difference is significantly smaller when comparing the two-year periods. It is possible that a three-year period could also be OK.

Here is an example from the reference link:

Real-World: SE Labs, AV-Comparatives, AV-Test

-------------------------------2019----2020------

Avast Free...............26..............11

Microsoft................12..............25

The one-year results are totally different, but the two-year results are the same.

On the other side, the AV protection is changing in time. So, it would not be reasonable to increase the period above two years.

I am just pulling your leg, I agree with your observation that the missed malware difference are only relevant when the size of "real world malware" is statistically a representative set of all malware in the wild for that reported month. Using the same logic I am challenging the two year period you used

Fact is you don't know the population size (of active malware in a given month) you also don't know how much variation in results the AV-labs want to report. Therefore we don't know the statistocal relevance and representation of the sample sizes used by the AV-labs (for real world scenario testing for instance). The same applies to your two year period

Last edited by a moderator:

Andy Ful

From Hard_Configurator Tools

Thread author

Verified

Honorary Member

Top Poster

Developer

Well-known

- Dec 23, 2014

- 8,970

Yes you explained, but you did not answer my question.

I am just pulling your leg, I agree with your observation that the missed malware difference are only relevant when the size of "real world malware" is statistically a representative set of all malware in the wild for that reported month. Using the same logic I am challenging the two year period you used

Fact is you don't know the population size (of active malware in a given month) you also don't know how much variation in results the AV-labs want to report. Therefore we don't know the statistocal relevance and representation of the sample sizes used by the AV-labs (for real world scenario testing for instance). The same applies to your two year period

Yes, that is right. But we know that if the statistical fluctuations are big, then the number of tests is insufficient.

So, I do not use here any statistical model, but try to find empirically the minimum sensible set of tests that allows keeping the statistical fluctuations relatively small.

I do not think that the methods noted in your video (based on the known statistical models) can help much because there are too many hidden factors, like:

- We do not know how many samples were in the wild.

- We do not know how many never-before-seen samples were in the wild.

- We do not know how many never-before-seen samples were tested.

- We do not know how the AV Labs manage the morphed samples.

- etc.

https://malwaretips.com/threads/randomness-in-the-av-labs-testing.104104/post-905376

https://malwaretips.com/threads/randomness-in-the-av-labs-testing.104104/post-905910

https://malwaretips.com/threads/randomness-in-the-av-labs-testing.104104/post-905991

https://malwaretips.com/threads/randomness-in-the-av-labs-testing.104104/post-909548

https://malwaretips.com/threads/randomness-in-the-av-labs-testing.104104/post-909620

https://malwaretips.com/threads/randomness-in-the-av-labs-testing.104104/post-917496

Similar threads

- Replies

- 10

- Views

- 1,680

- Replies

- 16

- Views

- 2,122

AV-Comparatives

Advanced Threat Protection (ATP) Test 2024

- Replies

- 0

- Views

- 1,118