Disclaimer: when you believe in large blocklists with 100.000 rules or more, stop reading this post (because this post is based on research data and not alternative facts and popular make believe  ) Adblocking methods are developing into new interesting directions using several layers to increase effectiveness of ad-blocking and reduce website breakage due to bad filters.

) Adblocking methods are developing into new interesting directions using several layers to increase effectiveness of ad-blocking and reduce website breakage due to bad filters.

What is wrong with old (large) block list approach?

What new techniques are evolving?

What is wrong with old (large) block list approach?

- Large community based blocklists (like Easylist) contain a lot of dead rules

A blog post based on a field research explains the scope of dead or stale rules in Easy Privacy blocklist. The Mounting Cost of Stale Ad Blocking Rules | Brave Browser . The problem with uer maintained black lists is that there is no automated mechanism to check whether that rule actually blocks something. That is why some adblockers like Adguard measure how many times rules are triggered and offer their own optimized version of the community based black lists. Also the build-in adblockers of Brave and Opera clean up their versions of the Easylist blocklist on a regular base.

- Large community based blocklist chase the wrong target.

The problem with most community maintained blocklist is that they chase the symptoms and not the cause. The community chases ads on websites they surf to/on. But the ads on these websites are displayed are pushed to these website by an ad&tracking network. As Peter Low explains on his website (quote) "The ad banners that you see all over the web are stored on servers. Stopping your computer communicating with another computer can be quite simple. So, if you have a list of the servers used for ad banners, it's easy to stop ad banners even getting to your browser." . So blocking ads on individual websites like most community maintained list do, is putting the horse behind the cart.

What new techniques are evolving?

- Small blocklist with isolation.

Firefox uses the block lists of Disconnect with containers (to keep parts of your online life separated into containers that preserve your privacy). When you are logged in to Google or Facebook and visit websites, you disclose a lot more info. FF containers prevent this.

- Small blocklist with smart white listing

Microsoft also uses the (small) block lists of Disconnect and reduces website breakage with user engagement score (white listing known trackers based on the users interaction with a specific website)

- Small block list with heuristics based blacklisting

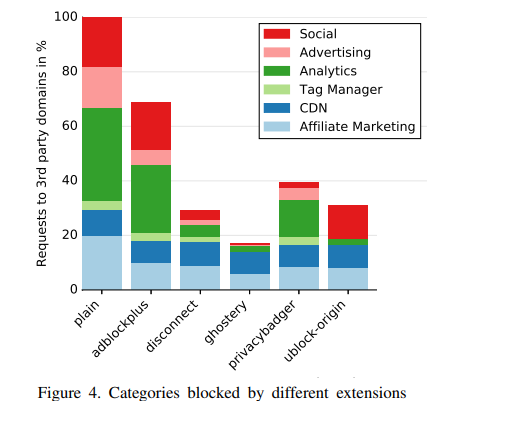

This is how Privacy Badger works (when a single third-party hosts tracks you on three separate sites, this ad and tracking server will be automatically disallowed). The information provided on Privacy badger's website is not actual anymore. Ghostery also uses a similar heuristics but more advanced approach as Privacy Badger. Ghostery evaluates URL-parameters. When an URL- parameter has more than three times the same values in the last two days it will be filtered (link) This is more granular than Privacy badger (three strikes is out on domain level) and causes less website breakage. Ghostery's blocking URL parameters is simular to AdGuard's stealthmode blocking UTM parameters. Ghostery's approach is more granular than AdGuard's system wide URL-parameters filtering. This Ghostery/Cliqz claim is backed by research (paper).

- Machine learning (AdGraph)

On the upcoming privacy and security symposium (link) a ' new' approach to use Machine Learning for adblocking is presented (called AdGraph link). Why is 'new' put in quotes because this Proof Of Concept Chromium browser (source code on Github link) is based on a 2018 study (link) sponsored and co-created with Brave (link).

AdGraph automatically and effectively blocks ads and trackers with 97.7% accuracy. AdGraph even has better recall than filter lists, as it blocks 16% more ads and trackers with 65% accuracy. The analysis also shows that AdGraph is fairly robust against adversarial obfuscation by publishers and advertisers that bypass filter lists.

The graph identifies advertising and tracking resources in websites based on the HTML structure, JavaScript behavior, and network requests made during execution. The PoC chromium uses a modified Blink (renderer) and V8 (Javascript) engine to classify and attribute third-party call traces of ad network. Although AdGraph much better detects evasion techniques of advertising networks and loads pages faster than stock Chromium on 42% of pages, and faster than AdBlock Plus on 78% of pages, this is also the Achilles heel of this approach. When Brave would decide to use this innovation, it would not only have to maintain its own fork (program code variant) of the browser (Chromium), but also a fork of the renderer (Blink) and the Javascript Engine (V8). This is probably the reason Brave has not embraced this technology from this 2018 study they co-funded and co-created.

Humble suggestion of a junior member to browser developers:

Develop an adblocker using a short blocklist (for third-party cookies, fingerprinting and scripts like Privacy Badger's initial JSON blacklist) with Edge's user engagement white listing mechanism and Ghostery's heuristic based URL parameter filtering to limit third-party exposure with an data collection OPT-IN.

With the collected OPT-IN data the "no-UID safe set" cloud white list is optimized using Machine Learning technology  . No-IUD safe set attributes are excluded in Ghostery's local " same URL-parameter value" analysis, for example 1920x1080 and 720x1280 are common URL-parameter values, but they probably represent the screen size, so should be excluded as a possible unique identifier. When a user does not OPT-IN, this adblocker does not use the cloud "URL-parameter safe-set" (but only local version). At browser launch the local cookie-fingerprint-script blacklist and "safe-set URL-parameter" whitelist are updated.

. No-IUD safe set attributes are excluded in Ghostery's local " same URL-parameter value" analysis, for example 1920x1080 and 720x1280 are common URL-parameter values, but they probably represent the screen size, so should be excluded as a possible unique identifier. When a user does not OPT-IN, this adblocker does not use the cloud "URL-parameter safe-set" (but only local version). At browser launch the local cookie-fingerprint-script blacklist and "safe-set URL-parameter" whitelist are updated.

Last edited: