- Jun 5, 2017

- 50

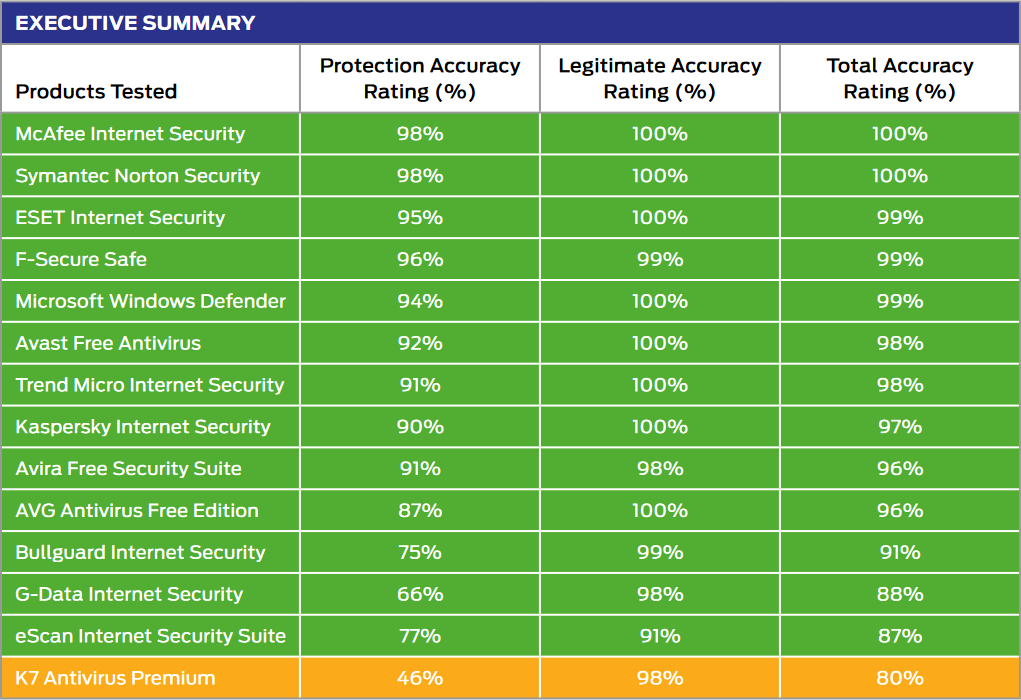

Executive Summary

Exact percentages, methodology, and much more detail in the specifics of each program was scored based on how they handed each piece of malware can be found in the full report: https://selabs.uk/download/consumers/epp/2019/jan-mar-2019-home.pdf

Most products were largely capable of handling public web-based threats such as those used by criminals to attack Windows PCs, tricking users into running malicious files or running scripts that download and run malicious files.

- The security software products were generally effective at handling general threats from cyber criminals...

Many products were also competent at blocking more targeted, exploit-based attacks. However, while some did very well in this part of the test, others were very much weaker. Products from K7 and G-Data were notably weaker than the competition.

- .. and targeted attacks were prevented in many cases.

Most of the products were good at correctly classifying legitimate applications and websites. The vast majority allowed all of the legitimate websites and applications. eScan’s was the least accurate in this part of the test.

- False positives were not an issue for most products

Products from McAfee, Symantec (Norton), ESET, F-Secure and Microsoft achieved extremely good results due to a combination of their ability to block malicious URLs, handle exploits and correctly classify legitimate applications and websites.

- Which products were the most effective?

[Note: "The web browser used in this test was Google Chrome. When testing Microsoft products Chrome was equipped with the Windows Defender Browser Protection browser extension (Windows Defender Browser Protection)."]

Exact percentages, methodology, and much more detail in the specifics of each program was scored based on how they handed each piece of malware can be found in the full report: https://selabs.uk/download/consumers/epp/2019/jan-mar-2019-home.pdf