- Dec 23, 2014

- 8,970

It is not so hard to see the difference, for example:Y'all complain Leo isnt doing it correctly, while claim independent labs, which do the exact same thing as him, is somehow "correct".

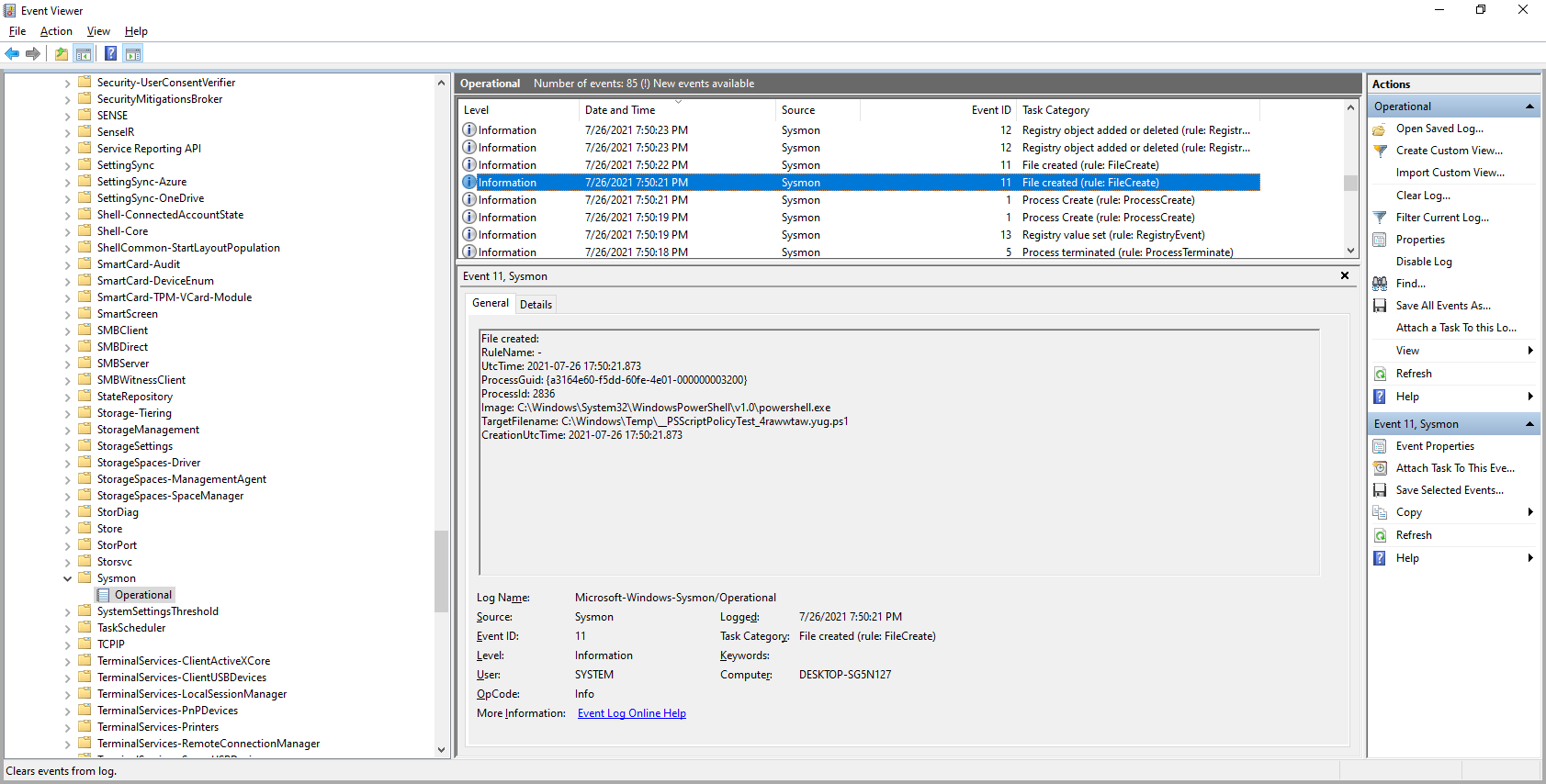

Analyzing System Logs » AVLab Cybersecurity Foundation

Question - What does the name of the "Advanced In-The-Wild Malware Test" mean?

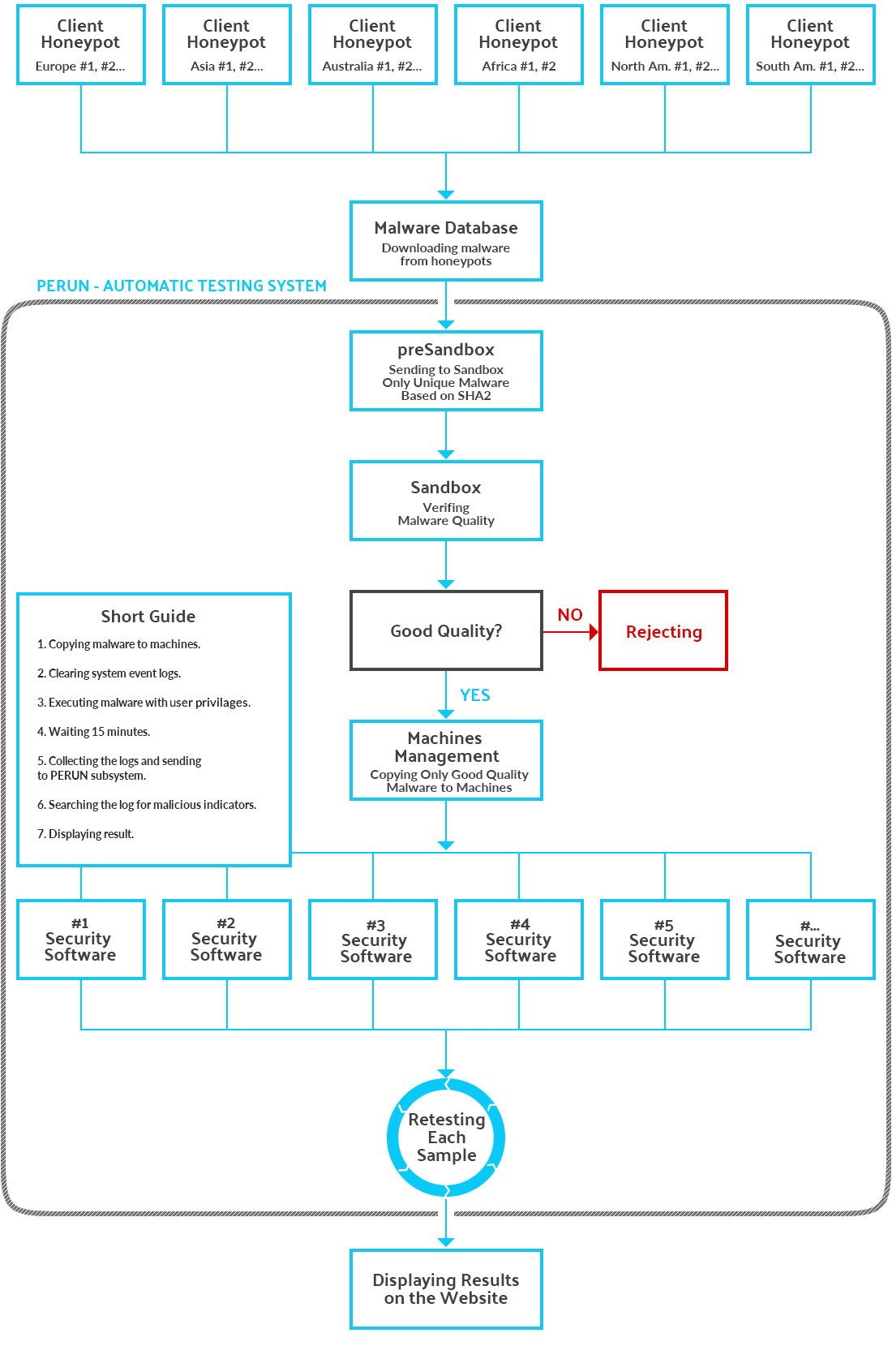

Methods Of Carrying Out Automatic Tests » AVLab Cybersecurity Foundation

Question - What does the name of the "Advanced In-The-Wild Malware Test" mean?

Making professional tests requires several people, resources, and time.

Shrinking the people, resources, and time = Leo's video.

Last edited: